In recent years, language models have been pushed to handle increasingly long contexts. This need has exposed some inherent problems in the standard attention mechanisms. The quadratic complexity of full attention quickly becomes a bottleneck when processing long sequences. Memory usage and computational demands increase rapidly, making it challenging for practical applications such as multi-turn dialogues or complex reasoning tasks. Moreover, while sparse attention methods promise theoretical improvements, they often struggle to translate those benefits into real-world speedups.

Many of these challenges arise from a disconnect between theoretical efficiency and practical implementation. Reducing computational overhead without losing essential information is not a simple task. This has led researchers to rethink attention mechanisms so that they can better balance performance with efficiency. Addressing these issues is a crucial step toward building models that are both scalable and effective.

DeepSeek AI researchers introduce NSA, a hardware-aligned and natively trainable sparse attention mechanism for ultra-fast long-context training and inference. NSA integrates both algorithmic innovations and hardware-aligned optimizations to reduce the computational cost of processing long sequences. NSA uses a dynamic hierarchical approach. It begins by compressing groups of tokens into summarized representations. Then, it selectively retains only the most relevant tokens by computing importance scores. In addition, a sliding window branch ensures that local context is preserved. This three-pronged strategy—compression, selection, and sliding window—creates a condensed representation that still captures both global and local dependencies.

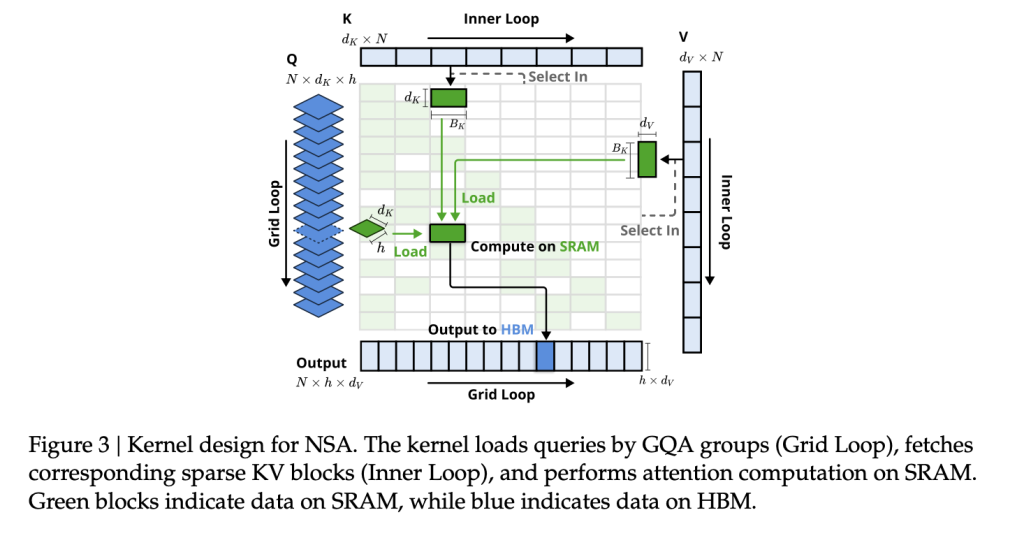

The design of NSA is also mindful of hardware constraints. By implementing specialized kernels optimized for modern GPUs, NSA achieves reduced latency in both inference and training. This careful blend of algorithmic strategy and hardware alignment makes NSA a promising candidate for improving long-context modeling.

Technical Details and Benefits

NSA’s architecture rests on two main pillars: a hardware-aware design and a training-friendly algorithm. The compression mechanism uses a learnable multilayer perceptron to aggregate sequential tokens into block-level representations. This captures high-level patterns while reducing the need for full-resolution processing.

Following compression, the token selection module operates in a blockwise manner. It selects continuous token blocks that show similar attention scores, which helps minimize random memory access. The sliding window component is responsible for handling local context. By separating local and global information, NSA manages to preserve fine details essential for many tasks. On the hardware side, NSA optimizes the use of GPU resources. Queries are loaded into SRAM in groups, and redundant key-value transfers are minimized by sharing memory efficiently. These optimizations lead to noticeable speedups in both forward and backward computations. Experimental results indicate improvements of up to 9× in forward propagation and 6× in backward propagation for long sequences.

Core components of NSA:

- Dynamic hierarchical sparse strategy

- Coarse-grained token compression

- Fine-grained token selection

Results and Insights

The research presents a careful evaluation of NSA across various tasks. On benchmarks such as MMLU, GSM8K, and DROP, NSA achieves performance comparable to, or even better than, traditional full attention models. The design also proves effective in long-context scenarios, where maintaining both global awareness and local precision is critical.

One interesting observation is NSA’s high retrieval accuracy in needle-in-a-haystack tasks with sequences as long as 64k tokens. This is largely due to its hierarchical design that blends coarse global scanning with detailed local selection. The results also show that NSA’s decoding speed scales well with increasing sequence length, thanks to its reduced memory access footprint. These insights suggest that NSA’s balanced approach—combining compression, selection, and sliding window processing—offers a practical way to handle long sequences efficiently without sacrificing accuracy.

Conclusion

NSA marks a thoughtful step forward in the design of sparse attention mechanisms. By integrating trainability with hardware-aligned optimizations, NSA addresses the dual challenges of computational efficiency and effective long-context modeling. Its three-tiered approach, which includes token compression, selective attention, and sliding window processing, reduces computational overhead while preserving important context.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post DeepSeek AI Introduces NSA: A Hardware-Aligned and Natively Trainable Sparse Attention Mechanism for Ultra-Fast Long-Context Training and Inference appeared first on MarkTechPost.

Source: Read MoreÂ