After the advent of LLMs, AI Research has focused solely on the development of powerful models day by day. These cutting-edge new models improve users’ experience across various reasoning, content generation tasks, etc. However, trust in the results and the underlying reasoning used by these models have recently been in the spotlight. In developing these models, the quality of the data, its compliance, and associated legal risks have become key concerns, as the models’ output depends on the underlying dataset.

LG AI Research, a pioneer in the AI field with previous successful launches of the EXAONE Models, has developed an Agent AI to address the above concerns. The Agent AI tracks the life cycle of training datasets to be used in AI models, comprehensively analyzing legal risks and assessing potential threats related to a dataset. LG AI Research has also introduced NEXUS, where users can directly explore results generated by this Agent AI system.

LG AI Research focuses on the training data underlying AI models. This is concerning because AI has been rapidly expanding into various sectors, and the biggest concern is its legal, safe, and ethical advancement. Through this research, LG AI Research found that AI training datasets are redistributed many times, and a dataset is sometimes linked to hundreds of datasets, making it impossible for a human being to track its sources. This lack of transparency can give rise to some serious legal and compliance risks.

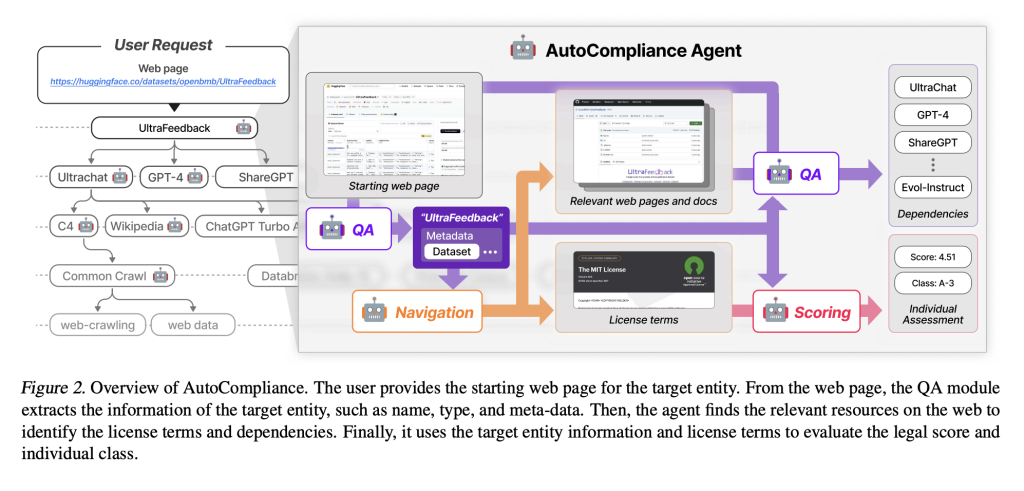

Through its offering of an Agent AI embedded in NEXUS, LG AI Research is tracking complex datasets’ lifecycle to ensure data compliance. The team has achieved this through its robust Agent AI, which can automatically find and analyze complex layers and dataset relationships. They developed this Agent AI system using a comprehensive data compliance framework and their EXAONE 3.5 model. The Agent AI system comprises three core modules, and each has been fine-tuned differently:

- The Navigation Module: This module is extensively trained to navigate web documents and analyze AI-generated text data. It performs navigation based on the name and type of the entity to find links to web pages or license documents related to the entity.

- The QA Module: In this module, the model was trained to take collected documents as input and extract dependency and license information from the documents.

- The Scoring Module: Finally, it was trained using a refined dataset labeled by lawyers, which analyzes license details alongside an entity’s metadata to evaluate and quantify potential legal risks.

Through this robust development, Agent AI has achieved 45 times faster speed than a human expert at a cost cheaper than 700 times.

Other notable results include: when evaluating 216 randomly chosen datasets from Hugging Face’s top 1,000+ downloads, Agent AI accurately detected dependencies by around 81.04% and identified license documents by about 95.83%.

In this Agent AI, the legal risk assessment for datasets is based on the data compliance framework developed by LG AI Research. This data compliance framework uses 18 key factors: license grants, data modification rights, derivative works permissions, potential copyright infringement in outputs, and privacy considerations. Each factor is weighted according to real-world disputes and case law, ensuring practical, reliable risk assessments. After this, data compliance results are classified into a seven-level risk rating system, where A-1 is the highest, requiring explicit commercial use permission or public domain status, plus consistent rights for all sub-datasets. A-2 to B-2 allows limited use, often free for research but restricted commercially. C-1 to C-2 carry higher risk due to unclear licenses, rights issues, or privacy concerns.

The research on NEXUS has set a new standard for the legal stability of AI training datasets. LG AI Research envisions a long way forward; they have conducted an in-depth analysis of 3,612 major datasets through NEXUS and found that the inconsistency of rights relationships between datasets and dependencies is far higher than expected. Many of these datasets with inconsistencies are used for major AI models in widespread use. For example, of the 2,852 AI training datasets determined to be commercially available, only 605 (21.21%) remained commercially available after accounting for dependency risks.

Recognizing these real-world issues, LG AI Research has several future goals for evolving AI technology and the legal environment. The first immediate goal is to expand the scope and depth of the datasets that Agent AI technology analyzes, aiming to understand the life cycle of all the data worldwide and maintain the quality of assessment and results throughout this expansion. Another vision is to evolve the data compliance framework into a global standard. LG AI Research plans to collaborate with the worldwide AI community and legal experts to develop these criteria into an international standard. Finally, in the long term, LG AI Research plans to evolve NEXUS into a comprehensive legal risk management system for AI developers, contributing to creating a safe, legal, data-compliant, and responsible AI ecosystem.

Sources:

Thanks to the LG AI Research team for the thought leadership/ Resources for this article. LG AI Research team has supported us in this content/article.

The post LG AI Research Releases NEXUS: An Advanced System Integrating Agent AI System and Data Compliance Standards to Address Legal Concerns in AI Datasets appeared first on MarkTechPost.

Source: Read MoreÂ