Large Language Models (LLMs) have advanced significantly in natural language processing, yet reasoning remains a persistent challenge. While tasks such as mathematical problem-solving and code generation benefit from structured training data, broader reasoning tasks—like logical deduction, scientific inference, and symbolic reasoning—suffer from sparse and fragmented data. Traditional approaches, such as continual pretraining on code, often embed reasoning signals implicitly, making it difficult for models to generalize. Even text-to-code generation methods remain constrained by syntax-specific learning, limiting their applicability beyond programming-related tasks. A more structured approach is needed to expose LLMs to fundamental reasoning patterns while preserving logical rigor.

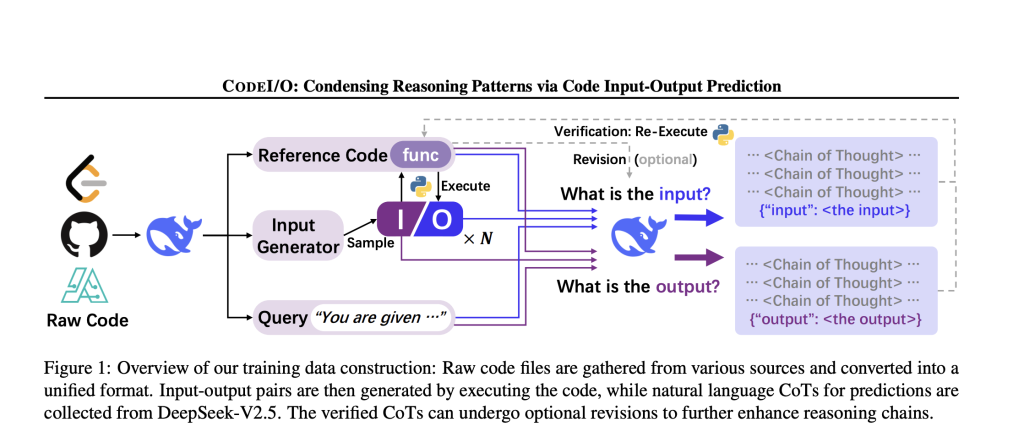

DeepSeek AI Research presents CODEI/O, an approach that converts code-based reasoning into natural language. By transforming raw code into an input-output prediction format and expressing reasoning steps through Chain-of-Thought (CoT) rationales, CODEI/O allows LLMs to internalize core reasoning processes such as logic flow planning, decision tree traversal, and modular decomposition. Unlike conventional methods, CODEI/O separates reasoning from code syntax, enabling broader applicability while maintaining logical structure.

Technical Overview and Benefits

CODEI/O follows a structured data processing pipeline:

- Collecting Raw Code Files: Over 450K functions were gathered from multiple sources, including algorithm repositories and educational programming datasets.

- Standardizing the Data: The collected code was refined using DeepSeek-V2.5, ensuring clarity and execution compatibility.

- Generating Input-Output Pairs: Functions were executed with varying inputs to create structured training examples across diverse reasoning tasks.

- Generating Chain-of-Thought Reasoning: Using models like DeepSeek-V2.5, natural language explanations were generated to provide structured reasoning.

- Verification and Refinement: Predictions were validated through execution, with incorrect responses revised iteratively to improve reasoning accuracy.

Key Features of CODEI/O:

- Transformative Learning: Converts diverse code patterns into natural language CoT rationales, making reasoning transferable beyond programming contexts.

- Syntax-Decoupled Learning: Separates logical reasoning from code syntax, improving adaptability across reasoning tasks.

- Multi-Task Improvement: Enhances performance across symbolic, scientific, logical, mathematical, and commonsense reasoning domains.

- Verifiability: Predictions can be validated through cached ground-truth matching or re-execution.

- Iterative Refinement: A refined version, CODEI/O++, employs multi-turn revision to enhance reasoning accuracy.

Empirical Results and Performance

The impact of CODEI/O was tested across four base models (ranging from 7B to 30B parameters) on 14 reasoning benchmarks covering logic, symbolic inference, mathematics, scientific deduction, and commonsense reasoning.

Findings:

- Consistent Improvements: CODEI/O training led to higher scores across reasoning benchmarks compared to traditional pretraining methods.

- Generalization Across Tasks: Unlike existing approaches that improve specific tasks but degrade performance elsewhere, CODEI/O showed balanced enhancements.

- Comparison to Baselines: CODEI/O outperformed datasets such as OpenMathInstruct2, OpenCoder-SFT-Stage1, and WebInstruct.

- Effectiveness of Multi-Turn Refinement: CODEI/O++ further improved results by iteratively refining incorrect responses, leveraging execution feedback for better reasoning quality.

For instance, in logical and symbolic reasoning benchmarks such as BBH and CruxEval, CODEI/O led to notable performance gains. In math reasoning tasks (GSM8K, MATH, and MMLU-STEM), it demonstrated improvements over existing baselines. Even in commonsense reasoning, where code-based methods typically struggle, CODEI/O maintained robust results.

Conclusion

CODEI/O presents a structured way to enhance LLMs’ reasoning by leveraging input-output transformations from real-world code. Instead of focusing on isolated reasoning tasks, it extracts universal reasoning patterns and translates them into natural language explanations. This structured learning approach ensures that models acquire robust reasoning skills across different domains.

The introduction of multi-turn revision (CODEI/O++) further refines reasoning accuracy, demonstrating that iterative learning from execution feedback enhances model reliability. By making predictions verifiable, CODEI/O provides a scalable and reliable method for improving LLM reasoning.

By bridging code-based and natural language reasoning, CODEI/O offers a promising direction for enhancing LLMs’ cognitive abilities beyond programming-related tasks.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post DeepSeek AI Introduces CODEI/O: A Novel Approach that Transforms Code-based Reasoning Patterns into Natural Language Formats to Enhance LLMs’ Reasoning Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)