Diffusion models generate images by progressively refining noise into structured representations. However, the computational cost associated with these models remains a key challenge, particularly when operating directly on high-dimensional pixel data. Researchers have been investigating ways to optimize latent space representations to improve efficiency without compromising image quality.

A critical problem in diffusion models is the quality and structure of the latent space. Traditional approaches such as Variational Autoencoders (VAEs) have been used as tokenizers to regulate the latent space, ensuring that the learned representations are smooth and structured. However, VAEs often struggle with achieving high pixel-level fidelity due to the constraints imposed by regularization. Autoencoders (AEs), which do not employ variational constraints, can reconstruct images with higher fidelity but often lead to an entangled latent space that hinders the training and performance of diffusion models. Addressing these challenges requires a tokenizer that provides a structured latent space while maintaining high reconstruction accuracy.

Previous research efforts have attempted to tackle these issues using various techniques. VAEs impose a Kullback-Leibler (KL) constraint to encourage smooth latent distributions, whereas representation-aligned VAEs refine latent structures for better generation quality. Some methods utilize Gaussian Mixture Models (GMM) to structure latent space or align latent representations with pre-trained models to enhance performance. Despite these advancements, existing approaches still encounter computational overhead and scalability limitations, necessitating more effective tokenization strategies.

A research team from Carnegie Mellon University, The University of Hong Kong, Peking University, and AMD introduced a novel tokenizer, Masked Autoencoder Tokenizer (MAETok), to address these challenges. MAETok employs masked modeling within an autoencoder framework to develop a more structured latent space while ensuring high reconstruction fidelity. The researchers designed MAETok to leverage the principles of Masked Autoencoders (MAE), optimizing the balance between generation quality and computational efficiency.

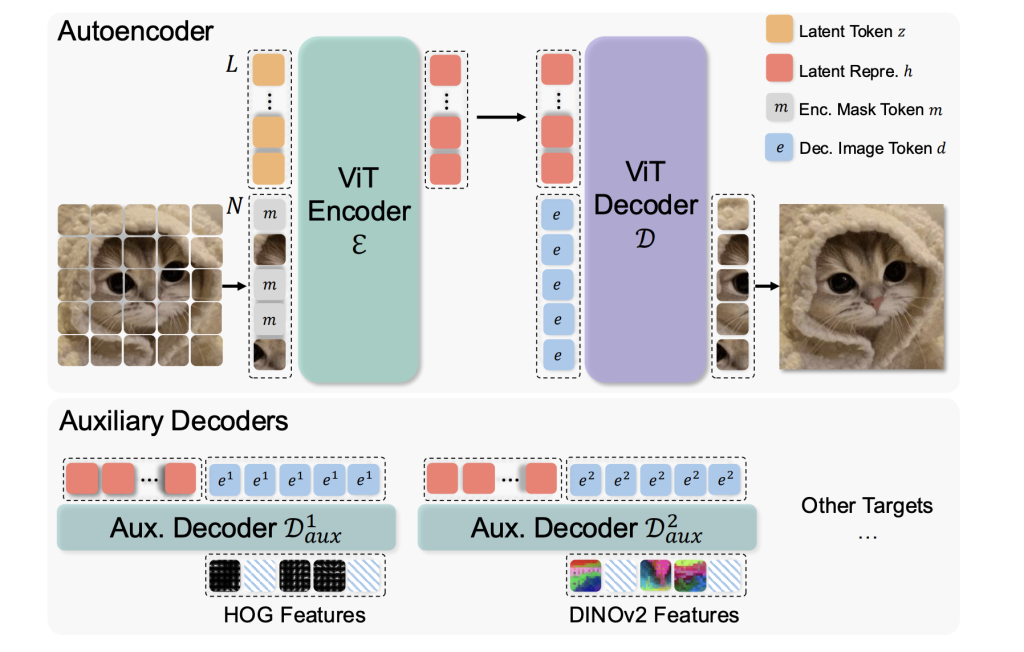

The methodology behind MAETok involves training an autoencoder with a Vision Transformer (ViT)-based architecture, incorporating both an encoder and a decoder. The encoder receives an input image divided into patches and processes them along with a set of learnable latent tokens. During training, a portion of the input tokens is randomly masked, forcing the model to infer the missing data from the remaining visible regions. This mechanism enhances the ability of the model to learn discriminative and semantically rich representations. Additionally, auxiliary shallow decoders predict the masked features, further refining the quality of the latent space. Unlike traditional VAEs, MAETok eliminates the need for variational constraints, simplifying training while improving efficiency.

Extensive experimental evaluations were conducted to assess MAETok’s effectiveness. The model demonstrated state-of-the-art performance on ImageNet generation benchmarks while significantly reducing computational requirements. Specifically, MAETok utilized only 128 latent tokens while achieving a generative Frechet Inception Distance (gFID) of 1.69 for 512×512 resolution images. Training was 76 times faster, and inference throughput was 31 times higher than conventional methods. The results showed that a latent space with fewer Gaussian Mixture modes produced lower diffusion loss, leading to improved generative performance. The model was trained on SiT-XL with 675M parameters and outperformed previous state-of-the-art models, including those trained with VAEs.

This research highlights the importance of structuring latent space effectively in diffusion models. By integrating masked modeling, the researchers achieved an optimal balance between reconstruction fidelity and representation quality, demonstrating that the structure of the latent space is a crucial factor in generative performance. The findings provide a strong foundation for further advancements in diffusion-based image synthesis, offering an approach that enhances scalability and efficiency without sacrificing output quality.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

Join our machine learning community on Twitter/X

Join our machine learning community on Twitter/X

The post This AI Paper Introduces MAETok: A Masked Autoencoder-Based Tokenizer for Efficient Diffusion Models appeared first on MarkTechPost.

Source: Read MoreÂ