Large Language Models (LLMs) have demonstrated notable reasoning capabilities in mathematical problem-solving, logical inference, and programming. However, their effectiveness is often contingent on two approaches: supervised fine-tuning (SFT) with human-annotated reasoning chains and inference-time search strategies guided by external verifiers. While supervised fine-tuning offers structured reasoning, it requires significant annotation effort and is constrained by the quality of the teacher model. Inference-time search techniques, such as verifier-guided sampling, enhance accuracy but increase computational demands. This raises an important question: Can an LLM develop reasoning capabilities independently, without relying on extensive human supervision or external verifiers? To address this, researchers have introduced Satori, a 7B parameter LLM designed to internalize reasoning search and self-improvement mechanisms.

Introducing Satori: A Model for Self-Reflective and Self-Exploratory Reasoning

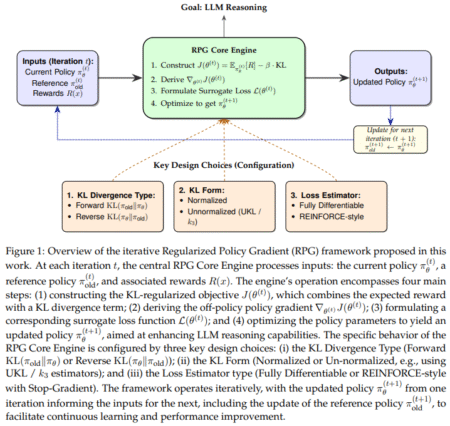

Researchers from MIT, Singapore University of Technology and Design, Harvard, MIT-IBM Watson AI Lab, IBM Research, and UMass Amherst propose Satori, a model that employs autoregressive search—a mechanism enabling it to refine its reasoning steps and explore alternative strategies autonomously. Unlike models that rely on extensive fine-tuning or knowledge distillation, Satori enhances reasoning through a novel Chain-of-Action-Thought (COAT) reasoning paradigm. Built upon Qwen-2.5-Math-7B, Satori follows a two-stage training framework: small-scale format tuning (FT) and large-scale self-improvement via reinforcement learning (RL).

Technical Details and Benefits of Satori

Satori’s training framework consists of two stages:

- Format Tuning (FT) Stage:

- A small-scale dataset (~10K samples) is used to introduce COAT reasoning, which includes three meta-actions:

- Continue (<|continue|>): Extends the reasoning trajectory.

- Reflect (<|reflect|>): Prompts a self-check on previous reasoning steps.

- Explore (<|explore|>): Encourages the model to consider alternative approaches.

- Unlike conventional CoT training, which follows predefined reasoning paths, COAT enables dynamic decision-making during reasoning.

- A small-scale dataset (~10K samples) is used to introduce COAT reasoning, which includes three meta-actions:

- Reinforcement Learning (RL) Stage:

- A large-scale self-improvement process using Reinforcement Learning with Restart and Explore (RAE).

- The model restarts reasoning from intermediate steps, refining its problem-solving approach iteratively.

- A reward model assigns scores based on self-corrections and exploration depth, leading to progressive learning.

Insights

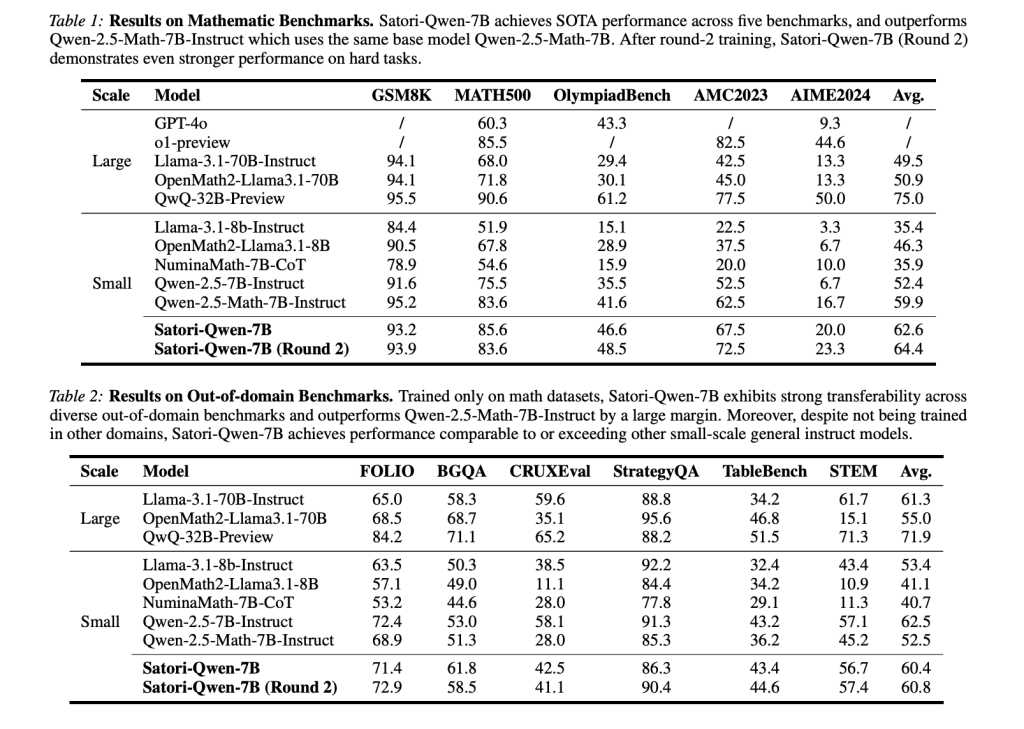

Evaluations show that Satori performs strongly on multiple benchmarks, often surpassing models that rely on supervised fine-tuning or knowledge distillation. Key findings include:

- Mathematical Benchmark Performance:

- Satori outperforms Qwen-2.5-Math-7B-Instruct on datasets such as GSM8K, MATH500, OlympiadBench, AMC2023, and AIME2024.

- Self-improvement capability: With additional reinforcement learning rounds, Satori demonstrates continuous refinement without additional human intervention.

- Out-of-Domain Generalization:

- Despite training primarily on mathematical reasoning, Satori exhibits strong generalization to diverse reasoning tasks, including logical reasoning (FOLIO, BoardgameQA), commonsense reasoning (StrategyQA), and tabular reasoning (TableBench).

- This suggests that RL-driven self-improvement enhances adaptability beyond mathematical contexts.

- Efficiency Gains:

- Compared to conventional supervised fine-tuning, Satori achieves similar or better reasoning performance with significantly fewer annotated training samples (10K vs. 300K for comparable models).

- This approach reduces reliance on extensive human annotations while maintaining effective reasoning capabilities.

Conclusion: A Step Toward Autonomous Learning in LLMs

Satori presents a promising direction in LLM reasoning research, demonstrating that models can refine their own reasoning without external verifiers or high-quality teacher models. By integrating COAT reasoning, reinforcement learning, and autoregressive search, Satori shows that LLMs can iteratively improve their reasoning abilities. This approach not only enhances problem-solving accuracy but also broadens generalization to unseen tasks. Future work may explore refining meta-action frameworks, optimizing reinforcement learning strategies, and extending these principles to broader domains.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

The post Meet Satori: A New AI Framework for Advancing LLM Reasoning through Deep Thinking without a Strong Teacher Model appeared first on MarkTechPost.

Source: Read MoreÂ

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)

Recommended Open-Source AI Platform: ‘IntellAgent is a An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System’ (Promoted)