The fast development of wireless communication technologies has increased the application of automatic modulation recognition (AMR) in sectors such as cognitive radio and electronic countermeasures. With their various modulation types and signal changes, modern communication systems provide significant obstacles to preserving AMR performance in dynamic contexts.

Deep learning-based AMR algorithms have emerged as the leading technology in wireless signal recognition due to their higher performance and automated feature extraction capabilities. Unlike previous techniques, deep learning models excel at managing complicated signal input while maintaining high identification accuracy. However, these models are sensitive to adversarial attacks, where little changes in input signals might result in inaccurate classifications. Defense measures, such as detection-based and adversarial training methods, have been investigated to improve the resilience of deep learning models to such attacks, making them more dependable in practical applications.

Adversarial training, while effective, increases computational costs, risks reduced performance on clean data and may lead to overfitting in complex models like Transformers. Balancing robustness, accuracy, and efficiency remains a key challenge for ensuring reliable AMR systems in adversarial scenarios.

In this context, a Chinese research team recently published a paper introducing a novel method called Attention-Guided Automatic Modulation Recognition (AG-AMR) to address these challenges. This innovative approach incorporates an optimized attention mechanism within the Transformer model, enabling the extraction and refinement of signal features through attention weights during training.

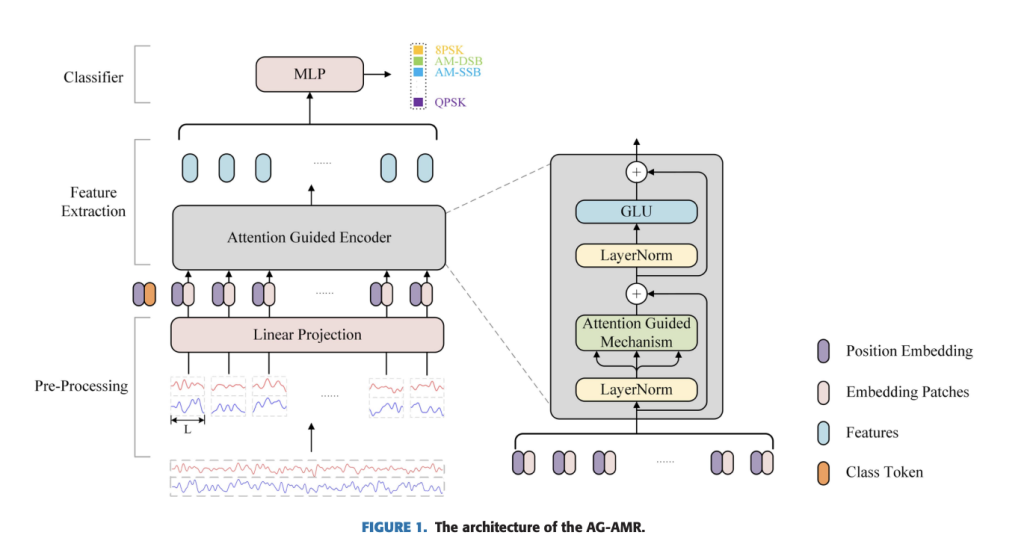

Concretely, the suggested AG-AMR technique improves modulation recognition tasks by combining an Attention-Guided Encoder (AG-Encoder), enhanced data preprocessing, and feature embedding. The approach converts input signals into two-channel images representing real and imaginary portions using the Transformer’s capacity to process long-range dependencies while avoiding the local feature restrictions of CNNs and RNNs. These signals are segmented, normalized, and framed into sequences, with positional embeddings and a class token added to preserve temporal and global information. The AG-Encoder uses a Multi-Head Self-Attention (MSA) mechanism and a Gated Linear Unit (GLU) to enhance feature extraction. The MSA dynamically allocates weights to focus on essential input regions while ignoring noise, generating outputs by concatenating and converting attention scores and values. Meanwhile, GLU, which replaces traditional forward propagation networks, modulates the information flow through gates, improving the processing of temporal tasks. The combined framework efficiently extracts relevant features, reduces computational complexity, and improves robustness to adversarial perturbations by filtering out redundant or irrelevant data while preserving critical signal information.

The experiments conducted by the authors thoroughly evaluate the effectiveness of the proposed AG-AMR method for automatic modulation recognition. The method is benchmarked against several models, including MCLDNN, LSTM, GRU, and PET-CGDNN, using two public datasets: RML2016.10a and RML2018.01a. These datasets feature diverse modulation types, channel conditions, and signal-to-noise ratios, offering a challenging environment for model evaluation. Various adversarial attack techniques, such as FGSM, PGD, C&W, and AutoAttack, are applied to assess robustness against adversarial samples. The impact of key parameters, including frame length and network depth, on model performance, is analyzed, revealing that deeper networks with optimized frame lengths enhance recognition accuracy. Performance metrics, including training time, accuracy, and model complexity, are systematically compared across datasets, showcasing AG-AMR’s superior resilience and classification performance under adversarial conditions.

To summarize, the AG-AMR technique represents a substantial advance in automated modulation recognition by including an improved attention mechanism in the Transformer model. This novel technique solves critical difficulties in dynamic wireless communication situations, including signal complexity and vulnerability to adversarial attacks. Extensive experiments show that AG-AMR beats existing models regarding resilience, accuracy, and efficiency, making it a promising solution for real-world applications such as cognitive radio and electronic countermeasures.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

The post Transformer-Based Modulation Recognition: A New Defense Against Adversarial Attacks appeared first on MarkTechPost.

Source: Read MoreÂ