Currently, three trending topics in the implementation of AI are LLMs, RAG, and Databases. These enable us to create systems that are suitable and specific to our use. This AI-powered system, combining a vector database and AI-generated responses, has applications across various industries. In customer support, AI chatbots retrieve knowledge base answers dynamically. The legal and financial sectors benefit from AI-driven document summarization and case research. Healthcare AI assistants help doctors with medical research and drug interactions. E-learning platforms provide personalized corporate training. Journalism uses AI for news summarization and fact-checking. Software development leverages AI for coding assistance and debugging. Scientific research benefits from AI-driven literature reviews. This approach enhances knowledge retrieval, automates content creation, and personalizes user interactions across multiple domains.

In this tutorial, we will create an AI-powered English tutor using RAG. The system integrates a vector database (ChromaDB) to store and retrieve relevant English language learning materials and AI-powered text generation (Groq API) to create structured and engaging lessons. The workflow includes extracting text from PDFs, storing knowledge in a vector database, retrieving relevant content, and generating detailed AI-powered lessons. The goal is to build an interactive English tutor that dynamically generates topic-based lessons while leveraging previously stored knowledge for improved accuracy and contextual relevance.

Step 1: Installing the necessary libraries

!pip install PyPDF2

!pip install groq

!pip install chromadb

!pip install sentence-transformers

!pip install nltk

!pip install fpdf

!pip install torchPyPDF2 extracts text from PDF files, making it useful for handling document-based information. groq is a library that provides access to Groq’s AI API, enabling advanced text generation capabilities. ChromaDB is a vector database designed to retrieve text efficiently. Sentence-transformers generate text embeddings, which helps in storing and retrieving information meaningfully. nltk (Natural Language Toolkit) is a well-known NLP library for text preprocessing, tokenization, and analysis. fpdf is a lightweight library for creating and manipulating PDF documents, allowing generated lessons to be saved in a structured format. torch is a deep learning framework commonly used for machine learning tasks, including AI-based text generation.

Step 2: Downloading NLP Tokenization Data

import nltk

nltk.download('punkt_tab')The punkt_tab dataset is downloaded using the above code. nltk.download(‘punkt_tab’) fetches a dataset required for sentence tokenization. Tokenization is splitting text into sentences or words, which is crucial for breaking down large text bodies into manageable segments for processing and retrieval.

Step 3: Setting Up NLTK Data Directory

working_directory = os.getcwd()

nltk_data_dir = os.path.join(working_directory, 'nltk_data')

nltk.data.path.append(nltk_data_dir)

nltk.download('punkt_tab', download_dir=nltk_data_dir)We will set up a dedicated directory for nltk data. The os.getcwd() function retrieves the current working directory, and a new directory nltk_data is created within it to store NLP-related resources. The nltk.data.path.append(nltk_data_dir) command ensures that this directory stores downloaded nltk datasets. The punkt_tab dataset, required for sentence tokenization, is downloaded and stored in the specified directory.

Step 4: Importing Required Libraries

import os

import torch

from sentence_transformers import SentenceTransformer

import chromadb

from chromadb.utils import embedding_functions

import numpy as np

import PyPDF2

from fpdf import FPDF

from functools import lru_cache

from groq import Groq

import nltk

from nltk.tokenize import sent_tokenize

import uuid

from dotenv import load_dotenvHere, we import all necessary libraries used throughout the notebook. os is used for file system operations. torch is imported to handle deep learning-related tasks. sentence-transformers provides an easy way to generate embeddings from text. chromadb and its embedding_functions module help in storing and retrieving relevant text. numpy is a mathematical library used for handling arrays and numerical computations. PyPDF2 is used for extracting text from PDFs. fpdf allows the generation of PDF documents. lru_cache is used to cache function outputs for optimization. groq is an AI service that generates human-like responses. nltk provides NLP functionalities, and sent_tokenize is specifically imported to split text into sentences. uuid generates unique IDs, and load_dotenv loads environment variables from a .env file.

Step 5: Loading Environment Variables and API Key

load_dotenv()

api_key = os.getenv('api_key')

os.environ["GROQ_API_KEY"] = api_key

#or manually retrieve key from https://console.groq.com/ and add it hereThrough above code, we will load, environment variables from a .env file. The load_dotenv() function reads environment variables from the .env file and makes them available within the Python environment. The api_key is retrieved using os.getenv(‘api_key’), ensuring secure API key management without hardcoding it in the script. The key is then stored in os.environ[“GROQ_API_KEY”], making it accessible for later API calls.

Step 6: Defining the Vector Database Class

class VectorDatabase:

def __init__(self, collection_name="english_teacher_collection"):

self.client = chromadb.PersistentClient(path="./chroma_db")

self.encoder = SentenceTransformer('all-MiniLM-L6-v2')

self.embedding_function = embedding_functions.SentenceTransformerEmbeddingFunction(model_name='all-MiniLM-L6-v2')

self.collection = self.client.get_or_create_collection(name=collection_name, embedding_function=self.embedding_function)

def add_text(self, text, chunk_size):

sentences = sent_tokenize(text, language="english")

chunks = self._create_chunks(sentences, chunk_size)

ids = [str(uuid.uuid4()) for _ in chunks]

self.collection.add(documents=chunks, ids=ids)

def _create_chunks(self, sentences, chunk_size):

chunks = []

for i in range(0, len(sentences), chunk_size):

chunk = ' '.join(sentences[i:i+chunk_size])

chunks.append(chunk)

return chunks

def retrieve(self, query, k=3):

results = self.collection.query(query_texts=[query], n_results=k)

return results['documents'][0]This class defines a VectorDatabase that interacts with chromadb to store and retrieve text-based knowledge. The __init__() function initializes the database, creating a persistent chroma_db directory for long-term storage. The SentenceTransformer model (all-MiniLM-L6-v2) generates text embeddings, which convert textual information into numerical representations that can be efficiently stored and searched. The add_text() function breaks the input text into sentences and divides them into smaller chunks before storing them in the vector database. The _create_chunks() function ensures that text is properly segmented, making retrieval more effective. The retrieve() function takes a query and returns the most relevant stored documents based on similarity.

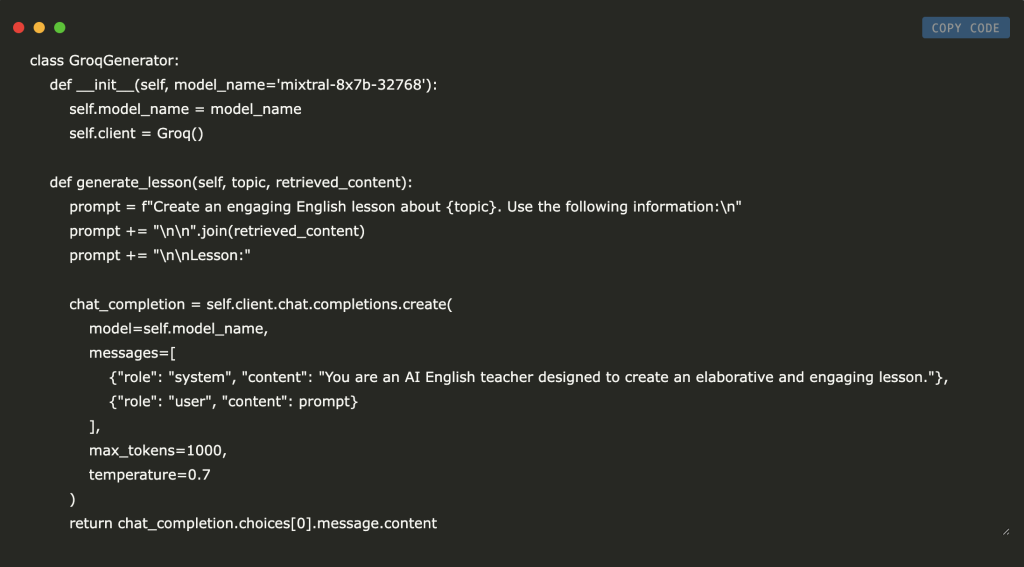

Step 7: Implementing AI Lesson Generation with Groq

class GroqGenerator:

def __init__(self, model_name='mixtral-8x7b-32768'):

self.model_name = model_name

self.client = Groq()

def generate_lesson(self, topic, retrieved_content):

prompt = f"Create an engaging English lesson about {topic}. Use the following information:n"

prompt += "nn".join(retrieved_content)

prompt += "nnLesson:"

chat_completion = self.client.chat.completions.create(

model=self.model_name,

messages=[

{"role": "system", "content": "You are an AI English teacher designed to create an elaborative and engaging lesson."},

{"role": "user", "content": prompt}

],

max_tokens=1000,

temperature=0.7

)

return chat_completion.choices[0].message.contentThis class, GroqGenerator, is responsible for generating AI-powered English lessons. It interacts with the Groq AI model via an API call. The __init__() function initializes the generator using the mixtral-8x7b-32768 model, designed for conversational AI. The generate_lesson() function takes a topic and retrieved knowledge as input, formats a prompt, and sends it to the Groq API for lesson generation. The AI system returns a structured lesson with explanations and examples, which can then be stored or displayed.

Step 8: Combining Vector Retrieval and AI Generation

class RAGEnglishTeacher:

def __init__(self, vector_db, generator):

self.vector_db = vector_db

self.generator = generator

@lru_cache(maxsize=32)

def teach(self, topic):

relevant_content = self.vector_db.retrieve(topic)

lesson = self.generator.generate_lesson(topic, relevant_content)

return lessonThe above class, RAGEnglishTeacher, integrates the VectorDatabase and GroqGenerator components to create a retrieval-augmented generation (RAG) system. The teach() function retrieves relevant content from the vector database and passes it to the GroqGenerator to produce a structured lesson. The lru_cache(maxsize=32) decorator caches up to 32 previously generated lessons to improve efficiency by avoiding repeated computations.

In conclusion, we successfully built an AI-powered English tutor that combines a Vector Database (ChromaDB) and Groq’s AI model to implement Retrieval-Augmented Generation (RAG). The system can extract text from PDFs, store relevant knowledge in a structured manner, retrieve contextual information, and generate detailed lessons dynamically. This tutor provides engaging, context-aware, and personalized lessons by utilizing sentence embeddings for efficient retrieval and AI-generated responses for structured learning. This approach ensures learners receive accurate, informative, and well-organized English lessons without requiring manual content creation. The system can be expanded further by integrating additional learning modules, improving database efficiency, or fine-tuning AI responses to make the tutoring process more interactive and intelligent.

Use the Colab Notebook here. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 70k+ ML SubReddit.

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

The post Creating an AI-Powered Tutor Using Vector Database and Groq for Retrieval-Augmented Generation (RAG): Step by Step Guide appeared first on MarkTechPost.

Source: Read MoreÂ