The critical issue of restricted access to high-quality reasoning datasets has limited open-source AI-driven logical and mathematical reasoning advancements. While proprietary models have leveraged structured reasoning demonstrations to enhance performance, these datasets and methodologies remain closed, restricting independent research and innovation. The lack of open, scalable reasoning datasets has created a bottleneck for AI development.

Over recent years, models such as SkyT1, STILL-2, and DeepSeek-R1 have demonstrated that a relatively small set of high-quality reasoning demonstrations on hundreds of thousands can substantially enhance a model’s ability to perform complex logical and mathematical reasoning tasks. Still, most reasoning datasets and the methodologies behind their creation remain proprietary, limiting access to crucial resources necessary for further exploration in the field.

The Open Thoughts initiative, led by Bespoke Labs and the DataComp community from Stanford, UC Berkeley, UT Austin, UW, UCLA, UNC, TRI, and LAION, is an ambitious open-source project aiming to curate and develop high-quality reasoning datasets to address the above concerns with the availability of datasets. This project seeks to establish the best open reasoning datasets to enhance language models’ cognitive capabilities. The team aims to provide publicly available, state-of-the-art reasoning datasets and data generation strategies. In this effort, they have released the OpenThoughts-114k reasoning dataset and the associated OpenThinker-7B model. Let’s look into the details of both of them one by one.

The OpenThoughts-114k Dataset: A New Standard in Open Reasoning Data

This dataset was designed to provide a large-scale, high-quality corpus of reasoning demonstrations to improve language models’ reasoning abilities. OpenThoughts-114k is an extension of previous datasets like Bespoke-Stratos-17k, which only contained 17,000 examples. By scaling up to 114,000 reasoning examples, this dataset has improved performance on various reasoning benchmarks. OpenThoughts-114k was generated using reasoning distillation techniques inspired by DeepSeek-R1, which showed that synthetic reasoning demonstrations could be produced efficiently and at scale. This dataset incorporates diverse reasoning challenges, ranging from mathematical problem-solving to logical deduction, thereby serving as a valuable resource for improving model robustness across multiple reasoning domains.

OpenThinker-7B: A Model for Advanced Reasoning

Alongside the release of OpenThoughts-114k, the Open Thoughts team also introduced OpenThinker-7B, a fine-tuned version of Qwen-2.5-7B-Instruct. This model was trained specifically on OpenThoughts-114k and substantially improved over its predecessors. Over 20 hours, it was trained using four 8xH100 nodes. It was trained using the Transformers 4.46.1 library and PyTorch 2.3.0 to ensure compatibility with widely used ML frameworks.

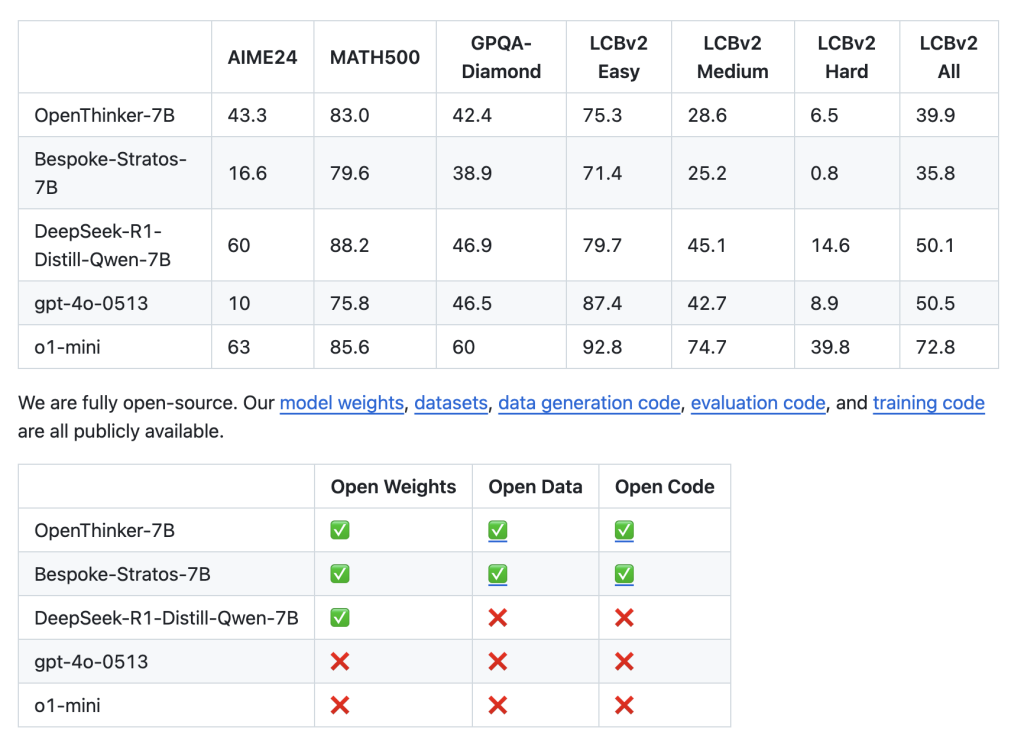

In some reasoning tasks, OpenThinker-7B outperforms comparable models such as Bespoke-Stratos-7B, DeepSeek-R1-Distill-Qwen-7B, and even GPT-4o. Benchmarked using Evalchemy, it demonstrated impressive results on datasets such as AIME24: 43.3%, MATH500: 83.0%, GPQA-D: 42.4%, LCB Easy: 75.3%, and LCB Medium: 28.6%. These results indicate that OpenThinker-7B is a formidable open-source alternative to proprietary reasoning models.

Fully Open-Source: Weights, Data, and Code

A defining feature of the Open Thoughts project is its commitment to full transparency. Unlike proprietary models such as GPT-4o and o1-mini, which keep their datasets and training methodologies closed, OpenThinker-7B and OpenThoughts-114k are entirely open-source. This means:

- Open Model Weights: The OpenThinker-7B model weights are publicly accessible, allowing researchers and developers to fine-tune and build upon the model.

- Open Data: The OpenThoughts-114k dataset is freely available for anyone to use, modify, and expand.

- Open Code: The data generation, evaluation, and training code for OpenThinker-7B are all hosted on GitHub, ensuring complete transparency and reproducibility.

The Open Thoughts project is only in its early stages, with plans for further expansion. Some potential future directions include:

- Future iterations of OpenThoughts could incorporate millions of reasoning examples, covering a broader spectrum of cognitive challenges.

- OpenThinker-7B is an excellent starting point, but larger models fine-tuned on even more data could further push the boundaries of reasoning capabilities.

- Encouraging more researchers, engineers, and AI enthusiasts to contribute to dataset creation, model training, and evaluation methodologies.

In conclusion, Open Thoughts represents a transformative effort to democratize AI reasoning. By launching OpenThoughts-114k and OpenThinker-7B as open-source resources, the project empowers the AI community with high-quality data and models to advance reasoning research. With continued collaboration and expansion, Open Thoughts has the potential to redefine how AI approaches logical, mathematical, and cognitive reasoning tasks.

Sources

- https://github.com/open-thoughts/open-thoughts

- https://huggingface.co/datasets/open-thoughts/OpenThoughts-114k

- https://huggingface.co/open-thoughts/OpenThinker-7B

- https://www.open-thoughts.ai/blog/launch

Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 70k+ ML SubReddit.

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted)

The post Open Thoughts: An Open Source Initiative Advancing AI Reasoning with High-Quality Datasets and Models Like OpenThoughts-114k and OpenThinker-7B appeared first on MarkTechPost.

Source: Read MoreÂ