The field of artificial intelligence is evolving rapidly, with increasing efforts to develop more capable and efficient language models. However, scaling these models comes with challenges, particularly regarding computational resources and the complexity of training. The research community is still exploring best practices for scaling extremely large models, whether they use a dense or Mixture-of-Experts (MoE) architecture. Until recently, many details about this process were not widely shared, making it difficult to refine and improve large-scale AI systems.

Qwen AI aims to address these challenges with Qwen2.5-Max, a large MoE model pretrained on over 20 trillion tokens and further refined through Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). This approach fine-tunes the model to better align with human expectations while maintaining efficiency in scaling.

Technically, Qwen2.5-Max utilizes a Mixture-of-Experts architecture, allowing it to activate only a subset of its parameters during inference. This optimizes computational efficiency while maintaining performance. The extensive pretraining phase provides a strong foundation of knowledge, while SFT and RLHF refine the model’s ability to generate coherent and relevant responses. These techniques help improve the model’s reasoning and usability across various applications.

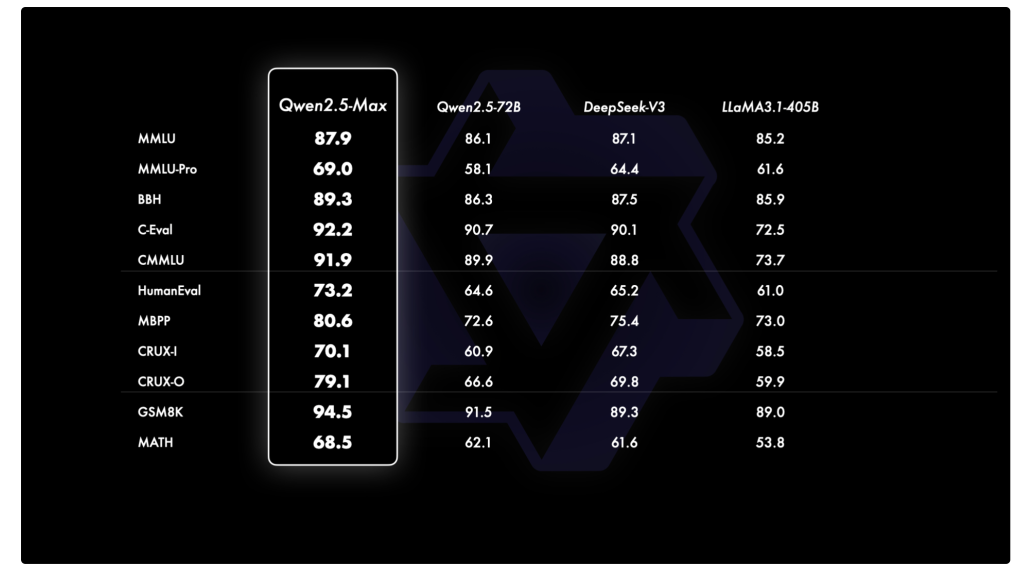

Qwen2.5-Max has been evaluated against leading models on benchmarks such as MMLU-Pro, LiveCodeBench, LiveBench, and Arena-Hard. The results suggest it performs competitively, surpassing DeepSeek V3 in tests like Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond. Its performance on MMLU-Pro is also strong, highlighting its capabilities in knowledge retrieval, coding tasks, and broader AI applications.

In summary, Qwen2.5-Max presents a thoughtful approach to scaling language models while maintaining efficiency and performance. By leveraging a MoE architecture and strategic post-training methods, it addresses key challenges in AI model development. As AI research progresses, models like Qwen2.5-Max demonstrate how thoughtful data use and training techniques can lead to more capable and reliable AI systems.

Check out the Demo on Hugging Face, and Technical Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 70k+ ML SubReddit.

The post Qwen AI Introduces Qwen2.5-Max: A large MoE LLM Pretrained on Massive Data and Post-Trained with Curated SFT and RLHF Recipes appeared first on MarkTechPost.

Source: Read MoreÂ