Amazon Neptune Analytics is a high-performance analytics engine designed to extract insights and detect patterns within vast amounts of graph data stored in Amazon Simple Storage Service (Amazon S3) or Amazon Neptune Database. Using built-in algorithms, vector search, and powerful in-memory processing, Neptune Analytics efficiently handles queries on datasets with tens of billions of relationships, delivering results in seconds.

Graphs allow for in-depth analysis of complex relationships and patterns across various data types. They are widely used in social networks to uncover community structures, suggest new connections, and study information flow. In supply chain management, graphs support route optimization and bottleneck analysis, whereas in cybersecurity, they highlight network vulnerabilities and track potential threats. Beyond these areas, graph data plays a crucial role in knowledge management, financial services, digital advertising, and more—helping identify money laundering schemes in financial networks and forecasting potential security risks.

With Neptune Analytics, you gain a fully managed experience that offloads infrastructure tasks, allowing you to focus on building insights and solving complex problems. Neptune Analytics automatically provisions the compute resources necessary to run analytics workloads based on the size of the graph, so you can focus on your queries and workflows. Initial benchmarks show that Neptune Analytics can load data from Amazon S3 up to 80 times faster than other AWS solutions, enhancing speed and efficiency across analytics workflows.

Neptune Analytics now supports a new functionality that allows you to import Parquet (in addition to CSV) data, and export Parquet or CSV data, to and from your Neptune Analytics graph. The new Parquet import capability makes it simple to get Parquet data into Neptune Analytics for graph queries, analysis, and exploration of the results. Many data pipelines already output files into Parquet, and this new functionality makes it straightforward to quickly create a graph from it. Additionally, Parquet and CSV data can be loaded into the graph using the new neptune.read() procedure, which allows you to read Parquet and CSV data from Amazon S3 in any format and subsequently read, insert, or update using that data. Similarly, graph data can be exported from Neptune Analytics as Parquet or CSV files, allowing you to move data from Neptune Analytics to many data lakes and Neptune Database. The export functionality also supports use cases that require exporting graph data into other downstream data and machine learning (ML) platforms for exploring and analyzing the results.

In this two-part series, we show how you can import and export using Parquet and CSV to quickly gather insights from your existing graph data. In Part 1, we introduced the import and export functionalities, and walked you through how to quickly get started with them. In this post, we show how you can use the new data mobility improvements in Neptune Analytics to enhance fraud detection.

Solution overview

Imagine a financial institution facing an increasing number of sophisticated fraud schemes that are difficult to detect through traditional methods. The bank’s analysts are struggling to connect the dots between transactions, customer profiles, and external networks, because suspicious behaviors often involve complex relationships spread across multiple accounts and entities. Fraudulent activities, such as account takeovers, money laundering, and networked schemes, often evade detection because they form intricate patterns that require a more connected view of the data.

To tackle this challenge, the institution needs a robust fraud detection solution that can rapidly analyze vast amounts of transactional and customer relationship data. Using the built-in export capability of Neptune Analytics, they can efficiently map out relationships and suspicious patterns within their data, enabling them to spot anomalies, trace connections between entities, export the result of fraud detection analysis to their S3 bucket to merge it with other data lakes for further analysis, and act swiftly to prevent further losses. This solution enables the bank to stay ahead of evolving fraud tactics by using the power of graph analytics to surface hidden risks and take proactive measures.

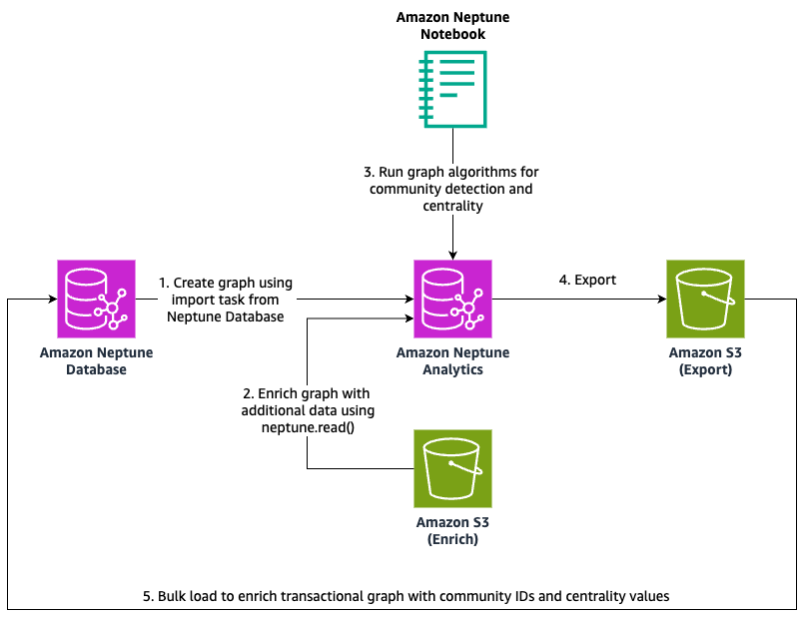

In the following sections, we guide you through building a fraud detection solution using a variation of the architecture introduced in Part 1 of this series. The key to enhancing our fraud detection solution is using the new import and export capabilities of Neptune Analytics. The following diagram illustrates the solution architecture.

The workflow consists of the following steps:

- Create a Neptune Analytics graph using an import task from Neptune Database.

- Enrich your graph with additional data using the read() procedure.

- Run your graph algorithms in a Neptune notebook for community detection and centrality.

- Use the export feature of Neptune Analytics to export the data to Amazon S3.

- Alternatively, you can export your graph data to Amazon S3, and re-ingest (using bulk load) into Neptune Database for enrichment of graph transactional data with community IDs and centrality values.

In our example, we walk you through how you can seamlessly import graph data stored in Neptune Database, then use Neptune Analytics to identify suspicious patterns and relationships suggestive of fraud.

Prerequisites

Before you get started, complete the following steps:

- Create an AWS Identity and Access Management (IAM) role that Neptune Analytics can assume so it has permissions to write the exported data to Amazon S3. For a detailed walkthrough, refer to Part 1 of this series. For more details, refer to Create and configure IAM role and AWS KMS key.

- Create a Neptune Database cluster.

- Deploy a Neptune notebook. For instructions, refer to the documentation Using Amazon Neptune with graph notebooks.

Load the dataset

For this post, we use a fictitious fraud graph dataset. This dataset contains nodes representing accounts (such as credit card accounts) connected to the attributes (home addresses, phone numbers, IP addresses, email addresses, birthdays) that they are associated with. Additionally, it contains nodes representing transactions (purchases) that can be associated with merchants, and optionally attributes (a purchase made over the phone, or a purchase made from a device using a specific IP address).

For example, the following diagram illustrates a single account and its associations with the attributes it uses: two phone numbers (represented by the phone icon), three e-mail addresses (represented by the @ icon), one birthday (represented by the cake icon), one IP address (represented by the laptop icon), and one home address (represented by the house icon).

Each account can also be associated with a transaction (represented by a dollar symbol icon), and each transaction can be associated with a merchant (represented by a shopping bag icon) and optionally a phone number or IP address.

To load the sample dataset, complete the following steps:

- Run the

%seedcommand from your Neptune notebook, using the following settings to load the data:- For Source type, choose samples.

- For Data model, choose propertygraph.

- For Language, choose gremlin.

- For Data set, choose fraud_graph.

- Choose Submit to initiate the load.

- After the data is loaded, create a Neptune Analytics graph from the Neptune Database cluster you just created. For instructions, see Creating a Neptune Analytics graph from Neptune cluster or snapshot. When creating the graph, make sure that you have at least one method of connectivity established (public or private). For ease of experimentation, you can configure your Neptune Analytics graph to be publicly accessible.

- After you create the Neptune Analytics graph, update the notebook configuration to interact with the graph:

After the fraud graph is recreated in Neptune Analytics, you can run graph algorithms to detect patterns of suspicious behavior.

Find insights with graph algorithms

Let’s say that we are fraud investigators looking for new patterns of potentially fraudulent behavior. We don’t necessarily know what those patterns look like exactly (for example, at least two shared phone numbers and two shared home addresses between three different accounts). But we do know that multiple distinct accounts shouldn’t be sharing multiples of the same attributes.

Before we get started with queries, set the visualization options with custom colors and icons. In a new cell, run the following code, which assigns a color and a FontAwesome icon to each node type that will be generated in the graph visualizations:

To find insights with graph algorithms, complete the following steps:

- To find clusters of multiple distinct accounts sharing multiples of the same attributes, you can use generic clustering algorithms. You can use a query like the following, which finds clusters (components) of highly connected accounts and attributes using the weakly connected components algorithm. The values of the subsequently found components (clusters of highly connected accounts and attributes) are then written to a property called

wcc: - You can now use the newly added

wccproperty to find outliers in the distribution of component size. Run the following query, which finds all the component IDs and how many members are in each. We use the optional line input--store-toto save the query output to a variable calledwcc_dist: - You can then use the following code in a new notebook cell to plot a histogram depicting the distribution of components by their member count. To install the

matplotliblibrary within the notebook, you can use!pip install matplotlib.

From the distribution, we can see that an overwhelming majority of the components in the graph have less than 40 members. - Let’s investigate what the largest component looks like with the following query:

From the diagram, we can see that there are multiple clusters of attributes that are shared between multiple clusters of accounts. Even though we didn’t know exactly what patterns to look for, using clustering algorithms helped us find outlier patterns in the graph, which is helpful for identifying what parts of the graph that an investigator should begin drilling into further.

Enrich the graph data

Another family of algorithms that we can use to help us find outliers in our graph is the centrality algorithms. Centrality algorithms help us discover the most important nodes in a graph relative to other nodes. The exact definition of importance can vary based on which algorithm you use. PageRank is an algorithm that calculates node importance based off not only the count of nodes its connected to, but the importance of those nodes as well. We can use PageRank in our example to help us identify the more important and influential nodes in our graph, and within given components. In the context of fraud detection, multiple nodes with high PageRank values that are connected together would indicate potential fraud rings or collusion between suspicious accounts.

Now let’s say our fraud investigation team has calculated risk scores for each of the IP addresses that are in our graph. These scores are generated based on risk factors such as VPN or proxy usage and location. A higher score generally indicates riskier behavior, and we want to account for this information when running our PageRank algorithm. We can use a variant of PageRank called weighted PageRank, which will adjust node influence according to the weight given by connecting edges. The fraud investigation team has provided us the risk scores in a CSV formatted as shown in the following table.

| nodeID:String | score:Int |

| feature-1.194.153.59 | 8 |

| feature-1.0.3.9 | 469 |

| feature-14.192.78.72 | 400 |

| feature-190.121.7.235 | 249 |

| feature-41.73.48.50 | 639 |

| feature-88.241.191.224 | 365 |

| … | … |

You can download the sample file. After you save the file to an S3 bucket, you can use the neptune.read() command to write the data into our graph. Alternatively, you can use a Parquet formatted file instead. For more details on the supported formats, see neptune.read().

For our use case, we write the risk score as a property called ipFraudScore on each FEATURE_OF_ACCOUNT edge that connects between an account and the respective IP address. This will help us represent certain accounts as riskier if they are associated with a risker IP address, and will influence the final PageRank scores. See the following code:

The neptune.read() command reads the input file and returns one result for each row (such as a YIELD row). Then for each row, you can choose to read, insert, or update based on the contents of each row. In our example, we find the connecting edges between the given IP addresses (given by the nodeID column) and accounts, and add a new property called ipFraudScore to the edges and set the value to the given fraud score (given by the score column).

Then we run the PageRank algorithm, and include the newly populated fraud score as an input to the algorithm:

Now that we’ve written the PageRank values to the graph, we can include them in our queries. For example, we previously used weakly connected components to identify the largest community of highly connected accounts and attributes. What if we wanted to identify which accounts and attributes are the most influential within that community? We can then use the following query, which identifies the top five most influential nodes that are present in our largest component:

Export the data as CSV

Now that we’ve calculated our insights, perhaps we want to use them downstream for further analyses. To extract this information from the graph, you can use the export feature of Neptune Analytics.

Let’s say that we want to enrich our online transactional processing (OLTP) queries with the newly calculated insights. In this case, you only need to export the nodes along with their new component value and their new PageRank value. To initiate an export, use the start-export-task API. You can limit the scope of the export to only the new information you need by applying the export-filter parameter. The following shows an example of how to initiate the export with the desired export scope through the AWS Command Line Interface (AWS CLI):

For more details and examples on how to use the export filter, refer to Sample filters.

The output will look similar to the following table.

| ~id | ~label | wcc:Double | pagerank:Double |

| feature-+553004757840 | PhoneNumber | 2.357352929952127e+15 | 1.4649E-05 |

| b994f2b117a8404fa1ca775b26a51999 | Transaction | ||

| feature-103.22.164.112 | IpAddress | 2.357352929952639e+15 | 1.4649E-05 |

| 711c78881c9844a596e0e2d7de21723b | Transaction | ||

| … | … | … | … |

When exporting as a CSV, the export format will be in the Gremlin CSV bulk load format. This makes it straightforward to directly re-import the data (more specifically in our case, add or overwrite properties) into Neptune Database.

Import the CSV export into Neptune Database

When the status of the export task is SUCCEEDED, you can either serve the results from Amazon S3 to consumers, or you can directly load the data back into the graph in Neptune Database. Because the export to CSV is in the bulk load format, you can immediately re-import this into your Neptune Database graph through the bulk loader. As part of the export, an export summary will be output into the same location as the exported data. Be sure to remove this summary before bulk loading into Neptune. Using the bulk load magic from the Neptune notebook, you can initiate a bulk load with the following command:

If you’re running subsequent reloads to overwrite the same-named property value, be sure to specify True in the overwriteSingleCardinality parameter in the bulk load request.

Now that component data has also been written to Neptune Database, you can use this information within your transactional workload. For example, if a new account is opened, a corresponding new account node will be added to the graph. If the new account is associated with attributes that already exist in the graph (attributes that have already been used by or associated with other accounts), new edges will be added to the graph, connecting the new account node to the respective preexisting attribute nodes. As part of the write query, you can also add a component property to the new account based on which other nodes it’s connected to—effectively giving you a way to keep component membership updated in real time.

Clean up

To avoid incurring future costs, make sure to delete the resources that are no longer needed. Resources to remove include:

- Neptune Database cluster

- Neptune Analytics graph

- Neptune notebook

- S3 bucket for import/export

Conclusion

In this post, we showed how you can use the new data mobility improvements in Neptune Analytics to enhance fraud detection.

Neptune Database is optimized for high-throughput graph transactional queries. In many cases, the majority of your workload is made up of graph OLTP queries. But perhaps you want to be able to calculate insights using graph algorithms, or extract all or part of your graph data to be used in downstream data and ML pipelines, such as training a graph neural network model. In these cases, combining the ease of ingesting data into Neptune Analytics, with the power of its built-in graph algorithms and export functionality, means you can provide the necessary data to these additional use cases quickly and efficiently.

Get started with Neptune Analytics today by launching a graph and deploying a Neptune notebook. Sample queries and sample data can be found within the notebook or the accompanying GitHub repo.

About the Authors

Melissa Kwok is a Senior Neptune Specialist Solutions Architect at AWS, where she helps customers of all sizes and verticals build cloud solutions according to best practices. When she’s not at her desk you can find her in the kitchen experimenting with new recipes or reading a cookbook.

Melissa Kwok is a Senior Neptune Specialist Solutions Architect at AWS, where she helps customers of all sizes and verticals build cloud solutions according to best practices. When she’s not at her desk you can find her in the kitchen experimenting with new recipes or reading a cookbook.

Ozan Eken is a Product Manager at AWS, passionate about building cutting-edge Generative AI and Graph Analytics products. With a focus on simplifying complex data challenges, Ozan helps customers unlock deeper insights and accelerate innovation. Outside of work, he enjoys trying new foods, exploring different countries, and watching soccer.

Ozan Eken is a Product Manager at AWS, passionate about building cutting-edge Generative AI and Graph Analytics products. With a focus on simplifying complex data challenges, Ozan helps customers unlock deeper insights and accelerate innovation. Outside of work, he enjoys trying new foods, exploring different countries, and watching soccer.

Source: Read More