Video diffusion models have emerged as powerful tools for video generation and physics simulation, showing promise in developing game engines. These generative game engines function as video generation models with action controllability, allowing them to respond to user inputs like keyboard and mouse interactions. A critical challenge in this field is scene generalization – the ability to create new game scenes beyond existing ones. While collecting large-scale action-annotated video datasets would be the most straightforward approach to achieve this, such annotation is prohibitively expensive and impractical for open-domain scenarios. This limitation creates a barrier to developing versatile game engines that can generate diverse and novel game environments.

Recent approaches in video generation and game physics have explored various methodologies, with video diffusion models emerging as a significant advancement. These models have evolved from U-Net to Transformer-based architectures, enabling the generation of more realistic and longer-duration videos. Further, methods like Direct-a-Video, offer basic camera control, while MotionCtrl and CameraCtrl provide more complex camera pose manipulation. In the gaming domain, various projects like DIAMOND, GameNGen, and PlayGen have attempted game-specific implementations but suffer from overfitting to specific games and datasets, showing limited scene generalization capabilities.

Researchers from The University of Hong Kong and Kuaishou Technology have proposed GameFactory, a groundbreaking framework designed to address scene generalization in-game video generation. The framework utilizes pre-trained video diffusion models trained on open-domain video data to enable the creation of entirely new and diverse games. Researchers also developed a multi-phase training strategy that separates game-style learning from action control to overcome the domain gap between open-domain priors and limited game datasets. They have also released GF-Minecraft, a high-quality action-annotated video dataset, and expanded their framework to support autoregressive action-controllable game video generation, enabling the production of unlimited-length interactive game videos.

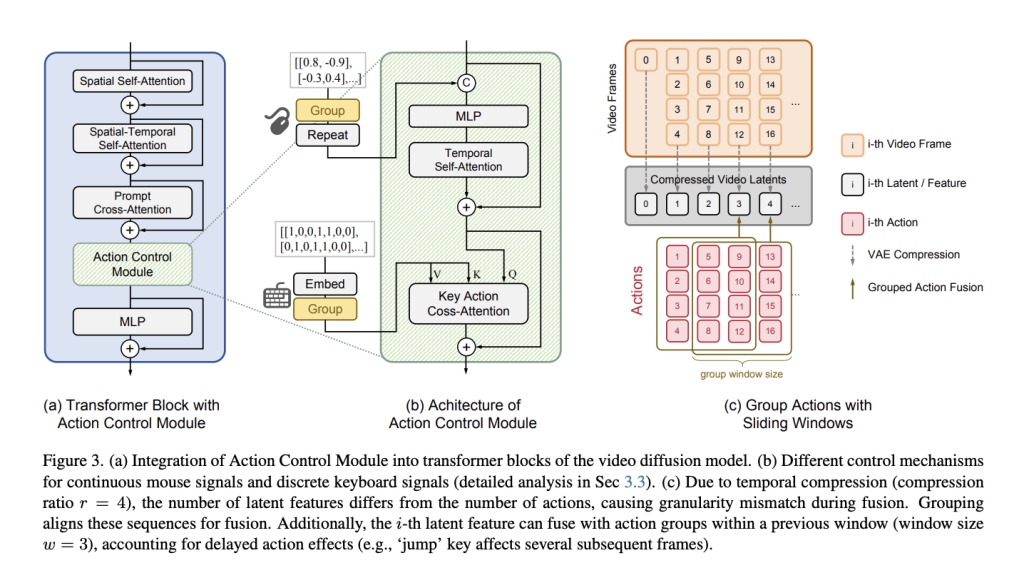

GameFactory employs a complex multi-phase training strategy, to achieve effective scene generalization and action control. The process begins with a pre-trained video diffusion model and proceeds through three phases. In Phase #1, the model uses LoRA adaptation to specialize in the target game domain while preserving most original parameters. Phase #2 focuses exclusively on training the action control module, with pre-trained parameters and LoRA frozen. This separation prevents style-control entanglement and enables the model to focus purely on learning action controls. During Phase #3, the LoRA weights are removed while retaining the action control module parameters allowing the system to generate controlled game videos across diverse open-domain scenarios without being tied to specific game styles.

Evaluation of GameFactory’s performance reveals significant insights into different control mechanisms and their effectiveness. Cross-attention shows superior performance over concatenation for discrete control signals like keyboard inputs, as measured by Flow-MSE metrics. However, concatenation proves more effective for continuous mouse movement signals, likely because cross-attention similarity computation tends to diminish the impact of the control signal’s magnitude. Different methods show comparable performance due to the decoupled style of learning in Phase #1 in terms of style consistency, measured by CLIPSim and FID metrics. The system masters basic atomic actions and complex combined movements across diverse game scenarios.

In this paper, researchers introduced GameFactory which represents a significant advancement in generative game engines, addressing the crucial challenge of scene generalization in-game video generation. The framework shows the feasibility of creating new games through generative interactive videos by effectively utilizing open-domain video data and implementing a novel multi-phase training strategy. While this achievement marks an important milestone, several challenges remain in developing generative game engines, that are fully capable. This includes diverse levels creation, implementation of gameplay mechanics, development of player feedback systems, in-game object manipulation, and real-time game generation. GameFactory establishes a promising foundation for future research in this evolving field.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

The post GameFactory: Leveraging Pre-trained Video Models for Creating New Game appeared first on MarkTechPost.

Source: Read MoreÂ