Code retrieval has become essential for developers in modern software development, enabling efficient access to relevant code snippets and documentation. Unlike traditional text retrieval, which effectively handles natural language queries, code retrieval must address unique challenges, such as programming languages’ structural variations, dependencies, and contextual relevance. With tools like GitHub Copilot gaining popularity, advanced code retrieval systems are increasingly vital for enhancing productivity and reducing errors.

Existing retrieval models often struggle to capture programming-specific nuances like syntax, control flow, and variable dependencies. These limitations hinder problem-solving in code summarization, debugging, and translation between languages. While text retrieval models have seen significant advancements, they fail to meet the specific requirements of code retrieval, highlighting the demand for specialized models that improve accuracy and efficiency across diverse programming tasks. Models like CodeBERT, CodeGPT, and UniXcoder have addressed aspects of code retrieval using pre-trained architectures. Still, they are limited in scalability and versatility due to their smaller sizes and task-specific focus. Although Voyage-Code introduced large-scale capabilities, its closed-source nature restricts broader adoption. This highlights the critical need for an open-source, scalable code retrieval system to generalize across multiple tasks.

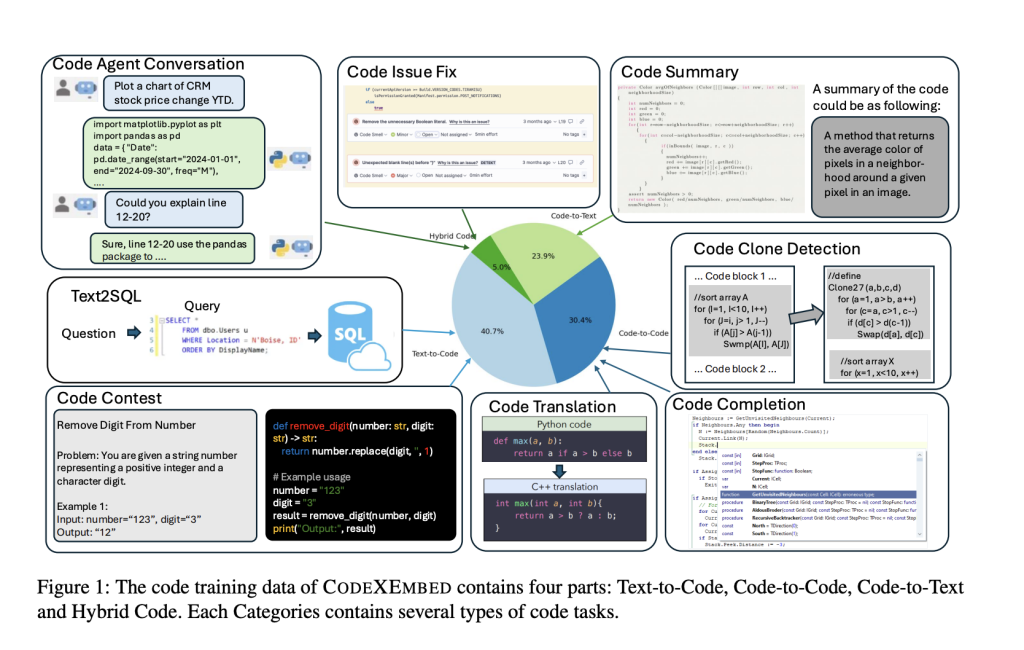

Researchers at Salesforce AI Research introduced CodeXEmbed, a family of open-source embedding models specifically designed for code and text retrieval. These models, released in three sizes, SFR-Embedding-Code-400M_R, SFR-Embedding-Code-2B_R, and 7 billion parameters, address various programming languages and retrieval tasks. CodeXEmbed’s innovative training pipeline integrates 12 programming languages and transforms five distinct code retrieval categories into a unified framework. By supporting diverse tasks such as text-to-code, code-to-text, and hybrid retrievals, the model expands the boundaries of what retrieval systems can achieve, offering unprecedented flexibility and performance.

CodeXEmbed employs an innovative approach that transforms code-related tasks into a unified query-and-answer framework, enabling versatility across various scenarios. Text-to-code retrieval maps natural language queries to relevant code snippets, streamlining tasks like code generation and debugging. Code-to-text retrieval generates explanations and summaries of code, enhancing documentation and knowledge sharing. Hybrid retrieval integrates text and code data, effectively addressing complex queries requiring technical and descriptive insights. The model’s training leverages contrastive loss to optimize query-answer alignment while reducing irrelevant data influence. Advanced techniques like low-rank adaptation and token pooling boost efficiency without sacrificing performance.

In tests, it has been evaluated across various benchmarks. On the CoIR benchmark, a comprehensive code retrieval evaluation dataset covering 10 subsets and over 2 million entries, the 7-billion parameter model achieved a performance improvement of more than 20% compared to the previous state-of-the-art Voyage-Code model. Notably, the 400-million and 2-billion parameter models also outperformed Voyage-Code, demonstrating the architecture’s scalability across different sizes. Also, CodeXEmbed excelled in text retrieval tasks, with the 7-billion parameter model achieving an average score of 60 on the BEIR benchmark, a suite of 15 datasets covering diverse retrieval tasks such as question answering and fact-checking.

The models can retrieve code and enhance end-to-end retrieval-augmented generation (RAG) systems. For instance, when applied to repository-level tasks like code completion and issue resolution, the 7-billion parameter model achieved notable results on benchmarks like RepoEval and SWE-Bench-Lite. RepoEval, focusing on repository-level code completion, saw top-1 accuracy improvements when the model retrieved contextually relevant snippets. In SWE-Bench-Lite, a curated dataset for GitHub issue resolution, CodeXEmbed outperformed traditional retrieval systems.

Key takeaways from the research highlight the contributions and implications of CodeXEmbed in advancing code retrieval:

- The 7-billion parameter model achieved state-of-the-art performance, with over 20% improvement on the CoIR benchmark and competitive results on BEIR. It demonstrated versatility across code and text tasks.

- The 400-million and 2-billion parameter models offer practical alternatives for environments where computational resources are limited.

- The models address a broad spectrum of code-related applications by unifying 12 programming languages and five retrieval categories.

- Unlike closed systems such as Voyage-Code, CodeXEmbed promotes community-driven research and innovation.

- Integration with retrieval-augmented generation systems improves outcomes for tasks like code completion and issue resolution.

- Using contrastive loss and token pooling optimizes retrieval accuracy and model adaptability.

In conclusion, Salesforce’s introduction of the CodeXEmbed family advances code retrieval. These models demonstrate unmatched versatility and scalability by achieving state-of-the-art performance on the CoIR benchmark and excelling in text retrieval tasks. The multilingual and multi-task unified framework, supporting 12 programming languages, positions CodeXEmbed as a pivotal tool for developers and researchers. Its open-source accessibility encourages community-driven innovation while bridging the gap between natural language and code retrieval.

Check out the Paper, 400M Model, and 2B Model. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

The post Salesforce AI Research Introduced CodeXEmbed (SFR-Embedding-Code): A Code Retrieval Model Family Achieving #1 Rank on CoIR Benchmark and Supporting 12 Programming Languages appeared first on MarkTechPost.

Source: Read MoreÂ