Speech processing systems often struggle to deliver clear audio in noisy environments. This challenge impacts applications such as hearing aids, automatic speech recognition (ASR), and speaker verification. Conventional single-channel speech enhancement (SE) systems use neural network architectures like LSTMs, CNNs, and GANs, but they are not without limitations. For instance, attention-based models such as Conformers, while powerful, require extensive computational resources and large datasets, which can be impractical for certain applications. These constraints highlight the need for scalable and efficient alternatives.

Introducing xLSTM-SENet

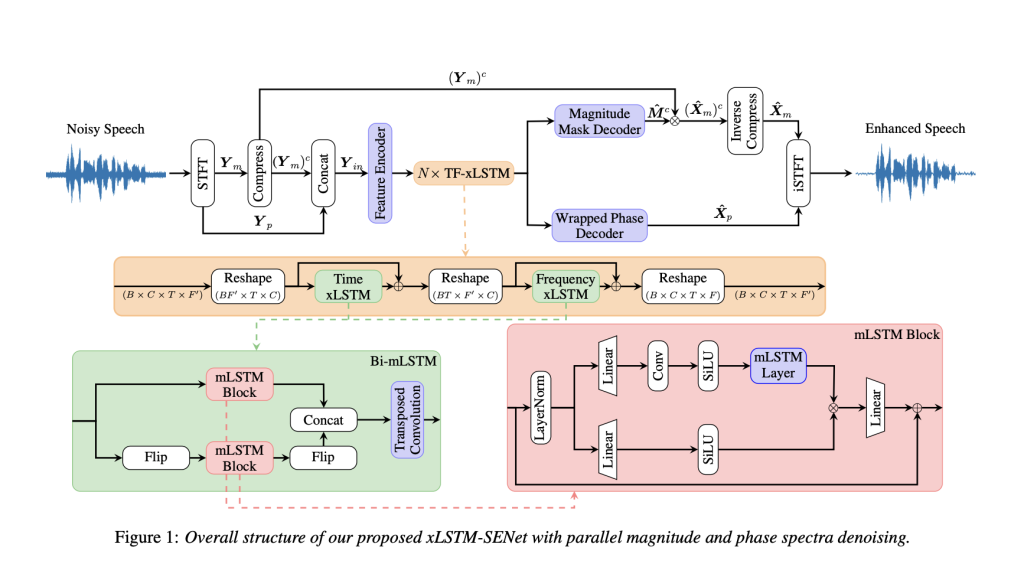

To address these challenges, researchers from Aalborg University and Oticon A/S developed xLSTM-SENet, the first xLSTM-based single-channel SE system. This system builds on the Extended Long Short-Term Memory (xLSTM) architecture, which refines traditional LSTM models by introducing exponential gating and matrix memory. These enhancements resolve some of the limitations of standard LSTMs, such as restricted storage capacity and limited parallelizability. By integrating xLSTM into the MP-SENet framework, the new system can effectively process both magnitude and phase spectra, offering a streamlined approach to speech enhancement.

Technical Overview and Advantages

xLSTM-SENet is designed with a time-frequency (TF) domain encoder-decoder structure. At its core are TF-xLSTM blocks, which use mLSTM layers to capture both temporal and frequency dependencies. Unlike traditional LSTMs, mLSTMs employ exponential gating for more precise storage control and a matrix-based memory design for increased capacity. The bidirectional architecture further enhances the model’s ability to utilize contextual information from both past and future frames. Additionally, the system includes specialized decoders for magnitude and phase spectra, which contribute to improved speech quality and intelligibility. These innovations make xLSTM-SENet efficient and suitable for devices with constrained computational resources.

Performance and Findings

Evaluations using the VoiceBank+DEMAND dataset highlight the effectiveness of xLSTM-SENet. The system achieves results comparable to or better than state-of-the-art models such as SEMamba and MP-SENet. For example, it recorded a Perceptual Evaluation of Speech Quality (PESQ) score of 3.48 and a Short-Time Objective Intelligibility (STOI) of 0.96. Additionally, composite metrics like CSIG, CBAK, and COVL showed notable improvements. Ablation studies underscored the importance of features like exponential gating and bidirectionality in enhancing performance. While the system requires longer training times than some attention-based models, its overall performance demonstrates its value.

Conclusion

xLSTM-SENet offers a thoughtful response to the challenges in single-channel speech enhancement. By leveraging the capabilities of the xLSTM architecture, the system balances scalability and efficiency with robust performance. This work not only advances the state of speech enhancement technology but also opens doors for its application in real-world scenarios, such as hearing aids and speech recognition systems. As these techniques continue to evolve, they promise to make high-quality speech processing more accessible and practical for diverse needs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

Recommend Open-Source Platform: Parlant is a framework that transforms how AI agents make decisions in customer-facing scenarios. (Promoted)

The post Redefining Single-Channel Speech Enhancement: The xLSTM-SENet Approach appeared first on MarkTechPost.

Source: Read MoreÂ