- A Brief Overview of Multimodal AI

- How do Multimodal AI Systems Process Data?

- Multimodal Changing the AI Landscape

- 5 Business Use Cases of Multimodal AI

- How can Tx Assist with AI Implementation?

- Summary

The AI ecosystem is rapidly evolving, and at the forefront is a technology that will completely change how humans interact with machines: multimodal AI. On September 25, 2024, Meta launched the latest LLM series—LlaMA 3.2—which features multimodal capabilities to process visual and text-based information simultaneously. This marks a significant step towards improving AI’s functionality to handle more complex prompts. On the other hand, other AI players like OpenAI and Google DeepMind are also heavily investing in developing multimodal AI systems. The goal is to improve user interactions and content outputs across varying modalities.

Now the question is, how will multimodal AI change the AI landscape, and how can businesses leverage these systems for their success?

A Brief Overview of Multimodal AI

Multimodal combines multiple modes/data types to generate accurate insights about real-world issues. ML models process and integrate information from various modalities, including text, video, images, audio, and other formats. The primary difference between multimodal AI and traditional AI (single-modal AI) is the type of data they handle. Multimodal AI integrates and analyzes various forms of data inputs to provide comprehensive and robust outputs.

For instance, Google’s multimodal AI, Gemini, will analyze photos of cookies to generate a recipe as an output in text format and vice versa. Another example is how multimodal AI makes GenAI more valuable and robust by leveraging multiple inputs to generate outputs. Dall-e, for instance, was OpenAI’s multimodal for its GPT model, but GPT-4o also brought multimodal features to ChatGPT.

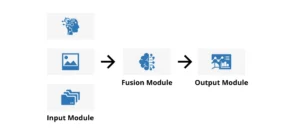

Multimodal AI mainly consists of three primary components:

| Module | Description | Examples/Techniques |

| Input Module | It handles and processes multiple types of data inputs, similar to a sensory system. | It gathers incoming data such as audio, text, and images. |

| Fusion Module | It combines, categorizes, and aligns data from different modalities. | Fusion Techniques:

|

| Output Module | It generates the final result based on fused data tailored to the task and system design. | Numerical predictions, text, image, video outputs, multi-class choices, audio, and prompts for automation. |

How do Multimodal AI Systems Process Data?

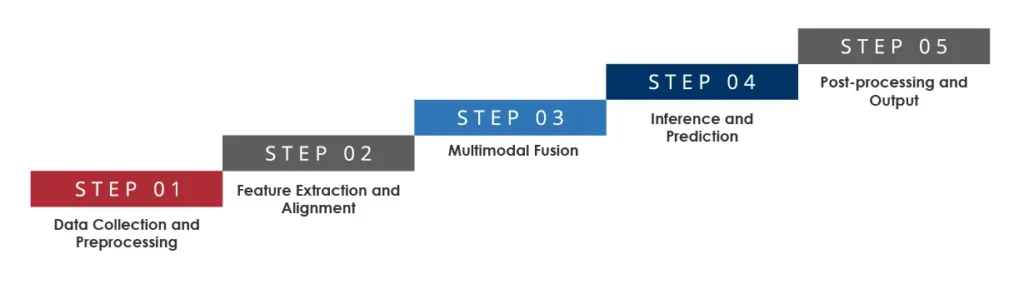

Let’s take a look at how multimodal AI works to have a clear understanding of how it integrates and processes different data types:

Step 1: Data Collection and Preprocessing:

Collect data from multiple sources (text, images, audio, or video) and preprocess data to ensure consistency across modalities (cleaning text, spectrograms from audio, tokenization, etc.)

Step 2: Feature Extraction and Alignment:

Leverage unimodal encoders to extract features from each modality, such as NLP techniques for text and CNNs (convolutional neural networks) for images. After that, techniques like cross-modal attention, embedding, etc., will be used to ensure the features correspond to similar concepts/

Step 3: Multimodal Fusion:

Integrate aligned features from each modality using early, late, and intermediate fusion methods to deliver a unified representation for comprehensive processing.

Step 4: Inference and Prediction:

Leverage the trained model to deduce results from multimodal inputs. The output can be in text, video, image, or other formats, depending on the application.

Step 5: Post-processing and Output:

Process the unified representation for better interpretation, such as content generation or classification. The trained model can also process new data and generate accurate outputs.

Multimodal Changing the AI Landscape

Multimodal is evolving the AI ecosystem rapidly by enabling it to process and integrate varying data types in a unified manner. Here’s how it’s impacting the industry:

Improved UX

It will enhance human-computer interactions by facilitating more natural and intuitive communication. For example, users can combine images, speech, and gestures to interact with AI systems. This will make virtual assistants and chatbots more effective and engaging.

Richer Context Understanding

Multimodal integrates multiple data types to allow AI systems to understand the context better. For example, synching audio into video can enhance real-time comprehension in scenarios such as autonomous driving, video conferencing, etc.

Cross-domain Applications

Multimodal AI will support entertainment, healthcare, education, and many other industries by seamlessly synching data into a unified manner. For instance, it can analyze patient records and perform medical imaging simultaneously for better diagnoses.

Better Accessibility

Businesses can create more inclusive solutions by leveraging text-to-speech and image recognition technologies. This will help bridge communication gaps.

Optimized Education and Training Programs

In education and training, multimodal AI can personalize learning experiences. AI systems can learn individual learning styles, offer text explanations in multiple languages, visualize diagrams, showcase interactive simulations, and generate audio guides.

5 Business Use Cases of Multimodal AI

Multimodal artificial intelligence can address broader use cases, making it a valuable asset for organizations in the modern business era. Some of the common multimodal AI use cases include:

Improved Diagnostics in Healthcare:

The healthcare industry processes a massive amount of data from multiple sources, such as patient records, lab results, medical imaging, etc. Multimodal AI optimizes medical diagnosis by synching diverse datasets into a unified manner, allowing healthcare providers to make highly accurate diagnoses and construct relevant treatment plans. Its applications include:

- Virtual Healthcare Assistants

- Advanced Medical Imaging and Diagnostics

- Accelerated Drug Development Process

- Personalized Treatment Plans

Optimized Robotics Development

Multimodal AI is the core of robotics development, as it assists robots in interacting with real-world elements such as humans, cars, access points, buildings, etc. It uses data from GPS, cameras, and other sensors to analyze the environment, understand it, and interact more efficiently with it.

Better Retail Customer Experience

Retailers can deliver a more customer-centric, data-driven, and efficient user experience, enhancing operational efficiency and customer satisfaction. Multimodal AI can also create targeted marketing campaigns by analyzing social media images, voice searches, and user interaction data. It automatically tracks stock levels, and forecasts demand using sales history and recent trends.

Advanced AR and VR Technology

Multimodal AI optimizes AR and VR by delivering immersive, intuitive, and interactive experiences. In AR technology, it integrates sensor, visual, and spatial data for contextual awareness to enable interactions via touch, voice, and gesture. This also improves AR’s object recognition capability. In VR technology, it integrates user feedback with voice and visual data to develop a dynamic environment, personalize the experience, and improve user avatar quality.

Upscale Autonomous Vehicle Technology

Autonomous vehicles or self-driving cars utilize multimodal AI technology to analyze data from different sources, such as LiDAR, cameras, sensors, GPS, etc., before developing a prototype of the surroundings. This environmental perception helps ensure safe navigation on the road.

How can TestingXperts (Tx) Assist with AI Implementation?

Although multimodal AI’s potential is promising, implementing these systems is a challenge. Tx can assist you in empowering your journey with expert AI consulting and implementation services. Our expertise focuses on strategic integration and operational efficiency across multiple industries. We offer tailored solutions that help you deliver measurable outcomes. Our AI consulting services cover the following:

- AI implementation strategy development by evaluating your AI readiness and creating a successful adoption roadmap

- Assist in AI model selection and customize models that meet your unique requirements and operational contexts.

- Leverage AI tools to provide continuous testing services that automatically adapt to changing app environments and reduce manual effort.

- Incorporate advanced analytics into app development for real-time data processing and decision-making.

- Ensure data compliance with regulatory standards and guide teams in preparing high-quality datasets.

Summary

Multimodal AI is changing how humans interact with machines and integrates diverse data types to optimize AI functionality. Combining data inputs enhances context understanding, UX, and cross-domain applications. From healthcare diagnostics to autonomous vehicles, multimodal AI is transforming industries by enabling personalized experiences, accurate insights, and efficient operations. Tx supports AI implementation through tailored consulting services, ensuring strategic integration, compliance, and operational excellence. With expertise in model selection and continuous testing, we can help you unlock the full potential of multimodal AI systems. To know how we can help, contact our experts now.

The post The Future of AI: How Multimodal AI is Driving Innovation first appeared on TestingXperts.

Source: Read More