This post was co-written with Joe Ellis a Backend Engineer at Monzo with 5+ years experience in the field. Joe works within the Platform collective at Monzo, with a specific focus on building stateful systems that facilitate development of secure, scalable, reliable, and performant production software.

Founded in 2015, Monzo is the leading digital bank in the UK and now serves more than 11 million personal customers and more than 500,000 businesses. With a mission to make money work for everyone, Monzo is known for pioneering industry-first features like the Gambling Block and Call Status and co-creating with its customers to deliver products and tools that put them in control of their finances.

At Monzo, we use Amazon Keyspaces (for Apache Cassandra) as our main operational database. Today, we store over 350 TB of data across more than 2,000 tables in Amazon Keyspaces, handling over 2,000,000 reads and 100,000 writes per second at peak. Amazon Keyspaces has allowed us to focus our engineering efforts on our customers by offering high performance, elasticity, and scalability without ongoing engineering time or maintenance costs.

In this post, we share how we used a different mechanism for row expiry than the Time to Live setting in Amazon Keyspaces to reduce our operating costs for an index while preserving its semantics.

Our use case

One of our Amazon Keyspaces tables indexes events on our platform over time. This allows us to perform event lookups for a time range in our operational domain. There’s a very large volume of events flowing through our platform, and we don’t need to index them all indefinitely. Ideally, we want to keep events in our index table for a period of time (6 weeks, specifically) before removing them to lower our storage costs.

One way of doing this is to use the TTL feature in Amazon Keyspaces. With this, you can set an expiry date at insert time for individual rows in a table. After this time has elapsed, the row will be filtered from query results, and within 10 days, be automatically removed from the backing store.

We used this feature for many years, and it worked well. But as the bank grew, onboarding new users and introducing new products, the number of events flowing through the platform increased substantially. Compared to earlier on, we now store many more events in our index table—so many, in fact, that using TTLs became expensive for us. Amazon Keyspaces uses a per-row billing model for TTLs, so the cost effectively scales with write throughput to our index table.

In the interest of reducing our running costs, we recently embarked on a project to eliminate TTLs from the event index table. With the help of AWS, we identified an alternative mechanism for row expiry—bulk deletes—that allowed us to maintain the semantics of our index table without incurring the per-row TTL costs.

Since we launched our solution, Amazon Keyspaces announced a price reduction for TTL by 75%. While this price reduction has lowered TTL costs, you can use this useful design pattern to save even more.

Solution overview

The key insight is that dropping tables in Amazon Keyspaces is completely free. If your data is shaped in such a way that it can be partitioned across multiple tables, you can use this to expire rows in table-sized batches. Our index table stores time series data, which works particularly well for this approach. Instead of storing all rows in a single time series table, partitioned by an index ID and timestamp, we can distribute these rows across multiple tables, with each table representing a bucket of time. By dynamically dropping old tables (containing only old rows) and creating new tables as needed, we can expire old data without using TTL deletes.

Naturally, this introduces some code complexity; we had to write some table management code for creating, dropping, and scaling tables, as well as sharding code for routing queries. But for our purposes, the cost savings are worthwhile.

How our original index table worked

The following schema closely resembles what we used for our original index (before removing TTLs):

The crucial column here is timestamp, which represents the time an event was published. Aside from that, the structure of the primary key is what really matters. The partition key comprises the following:

- indexid – An ID that we want to index across

- bucket – Derived by truncating the value of our timestamp column with some fixed unit of time (for example, 1 day) and grouping rows with similar timestamps into the same partition

The clustering key (which defines a sorting order within a partition) comprises the following:

- timestamp – As previously mentioned, this represents the time an event was published on the platform

- eventid – The unique ID of an event

With this schema, it’s intuitive to think of there being bucket boundaries in time. When writing a row, we truncate the value of its time column down to the nearest bucket boundary, and use that to determine which partition we write to. So, we derive bucket—the nearest (rounding down) bucket boundary—from timestamp. That means, for a fixed index ID, the corresponding events are distributed across multiple partitions depending on the event timestamp. Events with similar timestamps are grouped into the same partition.

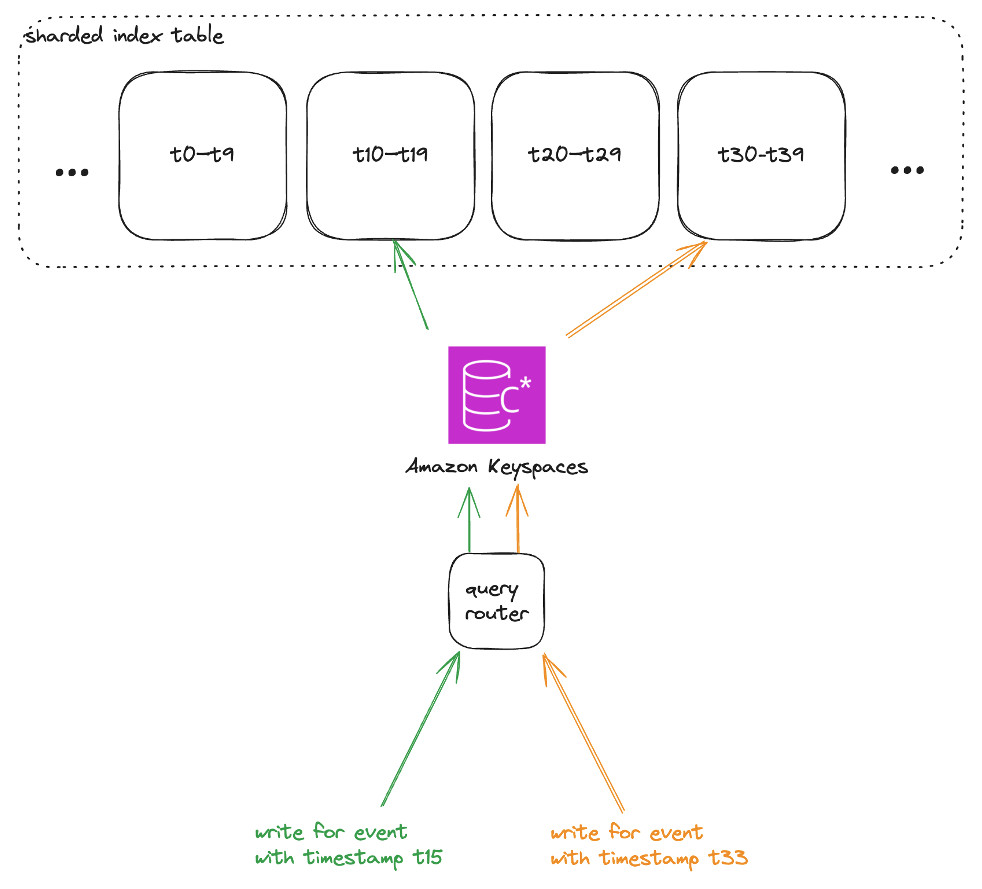

Query routing with multiple tables

Similar to how a time series table distributes rows across partitions using a bucketing mechanism, we use a comparable technique to distribute rows across tables. These table shards have the same schema as before—they are also time series tables—but they will only ever house rows whose timestamp falls within a fixed time period.

We have two bucketing criteria: one that decides the table shard a row belongs to based on its time column, and a second that decides which partition a row belongs to within its designated table.

To illustrate, let’s suppose that we set the table bucket size to 1 week. The table create statement looks like the following where we add a suffix representing the start of the week and remove the default ttl by setting it to 0.

Write path

The write path consists of the following:

- When a new event is inbound, we take its timestamp and round it down to its bucket boundary for the 1-week bucket size we’ve set.

- The timestamp we get from doing that—for example,

1719446400, as a UNIX timestamp—is used as a suffix for the table that we should write to—for example,events_1719446400. Of course, we would need to make sure this table is created in advance—but an empty table with no storage or throughput has zero ongoing cost.

Read path

For a read (which includes a start and end timestamp representing a range over which we want to query), we do the following:

- We round the start and end timestamps down to their respective bucket boundaries.

- We query every table shard within these bucket boundaries (inclusive).

- We collate the results (in order) from each of these table shards.

- We proactively prune out any results whose

timestampfield is sufficiently far in the past so as to be considered expired. (This is what we call read-resolved TTLs—the data will still exist in the table shard, and is still physically accessible, but to maintain functional parity with Amazon Keyspaces TTLs, we can resolve expiry times on our end.)

To summarize, this is exactly the same as what we do within a single time series table, but also using higher-level entities (such as sharding at the table level, in addition to partitions).

Integrating this into our platform was straightforward—because our database accessors were already packaged behind a DAO layer in the code, we were able to effectively swap out the old implementation with the new without requiring changes to any business logic.

Table lifecycle management

We had to build a solution for creating, dropping, and scaling table shards. We built a table manager for this, which runs periodically on a cron job and performs the following actions:

- Examines which table shards currently exist in Amazon Keyspaces by reading from the API

- Establishes what table shards should exist based on the wall clock time

- Computes the difference between the current and desired state (what needs to change to bring reality in line with our expectations)

- Reconciles the actual state with the desired state with the Amazon Keyspaces API—which can mean creating, deleting, or scaling table shards

Creating table shards

In Amazon Keyspaces, new tables are not created automatically as they are used—we must create them ourselves. We do this well in advance, to allow time to address potential issues with our table manager. This means our solution continues to function even if our table manager is completely broken, giving us ample time to investigate and resolve any problems.

Dropping table shards

Table shards in our index that contain only rows whose expiry time has been reached can be dropped to keep our storage costs low. We can infer when this is the case by looking at the time boundaries for each table shard; the upper bound, relative to the current wall clock time, can be used to calculate the maximum age of all rows contained within. If this maximum age exceeds our predefined TTL (6 weeks), the table shard is ready to be dropped.

Table shard capacity

It was important to get scaling right from a cost-efficiency perspective and to avoid throttling errors on new table shards.

In terms of cost-efficiency, our index table is write-heavy, predictably reaching throughput of up to 25,000 writes per second. Previously, we would just set our singular index table into provisioned capacity mode. With table shards, however, we now have the additional complexity of individually managing scaling modes for multiple table shards.

We can use properties of our data access pattern here. We write events to the index table shortly after they are emitted on the platform, so the event timestamps are close to wall clock time. Consequently, the table shard that corresponds to the present is usually very hot, and should be put into provisioned capacity mode to save money. The other table shards—ones that correspond to times in the past, or ones that are part of our future runway—are much cooler, and can be put into on-demand mode. Our table manager automatically switches the scaling mode of the index’s constituent table shards accordingly, making sure we don’t overspend.

To avoid throttling, we pre-warm new table shards by creating them in provisioned capacity mode with our peak load parameters, well ahead of when they’re receiving high traffic volume. This signals Amazon Keyspaces to provide higher throughput by creating more storage partitions to meet peak load parameters. When this process is complete, we can put the pre-warmed table shard back into on-demand mode to save money. In Amazon Keyspaces, a table’s partitions remain after this pre-warming process, so the table is ready to go when it’s time for them to become the hot table shard.

Rolling out

Our new sharded table index represents a logically separate system where events are stored. As a result, we needed to decide how to migrate from our old event index table to the new system. At Monzo, we pride ourselves on zero-downtime migrations, and this was no exception.

Our rollout process was as follows:

- We slowly ramped up dual-writing to the new index table in shadow mode to test the suitability and production readiness of the write path. (Because our index table is based on asynchronous event consumption, partial failure when dual-writing was of minimal concern; the event would just be retried.)

- When we were satisfied that writes were working as expected, we started to slowly ramp up reads from the new index table, building up confidence in the read path.

- Of course, some events would only exist in the old index table (because they were written before we had enabled dual-writing). There was therefore a period of time where requests spanning a large time range would entail reading from both the old and new index tables, combining the results together to make things look seamless. This was only temporary, however; eventually, all of the rows in the old index table expired because of their TTLs, which meant we could transition over to the new index table fully.

Conclusion

By using a different mechanism for row expiry than the TTL feature in Amazon Keyspaces, we were able to drastically reduce our operating costs for our event index while preserving its semantics. This involved splitting up a singular time series table into multiple shards, each for a particular period of time. With this configuration, we are able to bulk-expire rows by dropping entire tables, which is free in Amazon Keyspaces.

We built two principal components: a query router abstraction, responsible for reading and writing across multiple tables while preserving the same semantics as a regular time series table, and a table manager, responsible for the creation, dropping, and scaling of the constituent table shards.

Our sharded index table has been running in our production environment without issue for several months now. As with most technical changes, this project introduced some code complexity. But for us, this was easily justified; we were able to reduce our TTL-related operating costs.

To get started with Amazon Keyspaces, and try any of the models we shared in this post, head to the Amazon Keyspaces console CQL Editor to have a new serverless keyspace and table in seconds.

About the authors

Joe Ellis is a Backend Engineer at Monzo with 5+ years experience in the field. Joe works within the Platform collective at Monzo, with a specific focus on building stateful systems that facilitate development of secure, scalable, reliable, and performant production software.

Joe Ellis is a Backend Engineer at Monzo with 5+ years experience in the field. Joe works within the Platform collective at Monzo, with a specific focus on building stateful systems that facilitate development of secure, scalable, reliable, and performant production software.

Michael Raney is a Principal Specialist Solutions Architect based in New York. He works with customers like Monzo Bank to migrate Cassandra workloads to Amazon keyspaces. Michael has spent over a decade building distributed systems for high-scale and low-latency stateful applications.

Michael Raney is a Principal Specialist Solutions Architect based in New York. He works with customers like Monzo Bank to migrate Cassandra workloads to Amazon keyspaces. Michael has spent over a decade building distributed systems for high-scale and low-latency stateful applications.

Source: Read More