Multimodal Large Language Models (MLLMs) have shown impressive capabilities in visual understanding. However, they face significant challenges in fine-grained perception tasks such as object detection, which is critical for applications like autonomous driving and robotic navigation. Current models fail to achieve precise detection, reflected in the low recall rates of even state-of-the-art systems like Qwen2-VL, which only manages 43.9% of the COCO dataset. This gap emerges from inherent conflicts of tasks associated with perception and understanding and limited datasets that would be able to fairly balance these two required parts.

Traditional efforts toward incorporating perception into MLLMs usually involve tokenizing the coordinates of a bounding box to fit this form with auto-regressive models. Though these techniques guarantee compatibility with understanding tasks, they suffer from cascading errors, ambiguous object prediction orders, and quantization inaccuracies in complex images. A retrieval-based perception framework is, for instance, as in Groma and Shikra; it could change the detection of an object but isn’t as strong as a robust real-world task on diverse tasks. Moreover, the mentioned limitations are added to insufficient training datasets, which fail to address the twin requirements of perception and understanding.

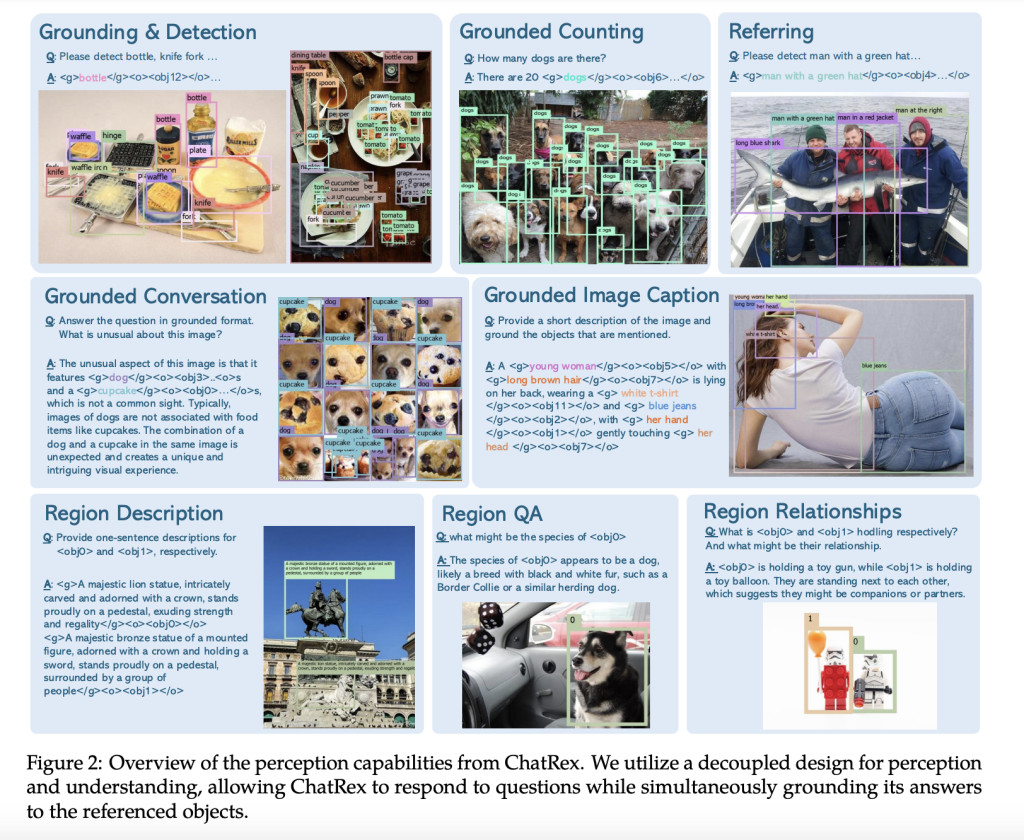

To overcome this challenge, researchers from the International Digital Economy Academy (IDEA) developed ChatRex, an advanced MLLM that is designed with decoupled architecture with strict separation between perception and understanding tasks. ChatRex is built on a retrieval-based framework where object detection is considered as retrieving bounding box indices rather than a direct coordinate prediction. This novel formulation removes quantization errors and increases the accuracy of detection. A Universal Proposal Network (UPN) was developed to generate comprehensive fine-grained and coarse-grained bounding box proposals that addressed ambiguities in object representation. The architecture further integrates a dual-vision encoder, which integrates high-resolution and low-resolution visual features to enhance the precision of object tokenization. The training was further enhanced by the newly developed Rexverse-2M dataset, an enormous collection of annotated images with multi-granular annotations, thus ensuring balanced training across perception and understanding tasks.

The Universal Proposal Network is based on DETR. The UPN generates robust bounding box proposals at multiple levels of granularity, which has effectively mitigated inconsistencies in object labeling across datasets. The UPN can then accurately detect objects in different scenarios by using fine-grained and coarse-grained prompts during training. The dual-vision encoder enables the encoding of visuals to be done compactly and efficiently by replacing high-resolution image features with low-resolution representations. The dataset for training, Rexverse-2M, contains more than two million annotated images, along with region descriptions, bounding boxes, and captions, which balanced the perception of the understanding and contextual analysis of ChatRex.

ChatRex performs top-notch in both perception and understanding benchmarks as it surpasses all other present models. In object detection, it has better or higher precision, recall, and mean Average Precision, or mAP, score than competitors on datasets including COCO and LVIS. In referring to object detection, can accurately associate descriptive expressions to corresponding objects, which explains its ability to deal with complex interactions between textual inputs and visual inputs. The system excels further in generating grounded image captions, answering region-specific queries, and object-aware conversational scenarios. This success stems from its decoupled architecture, retrieval-based detection strategy, and the broad training enabled by the Rexverse-2M dataset.

ChatRex is the first multimodal AI model that resolves the long-standing conflict between perception and understanding tasks. Its innovative design, combined with a robust training dataset, sets a new standard for MLLMs, allowing for precise object detection and context-rich understanding. These dual capabilities open up novel applications in dynamic and complex environments, illustrating how the integration of perception and understanding can unlock the full potential of multimodal systems.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post ChatRex: A Multimodal Large Language Model (MLLM) with a Decoupled Perception Design appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘