In AI, a key challenge lies in improving the efficiency of systems that process unstructured datasets to extract valuable insights. This involves enhancing retrieval-augmented generation (RAG) tools, combining traditional search and AI-driven analysis to answer localized and overarching queries. These advancements address diverse questions, from highly specific details to more generalized insights spanning entire datasets. RAG systems are critical for document summarization, knowledge extraction, and exploratory data analysis tasks.

One of the main problems with existing systems is the trade-off between operational costs and output quality. Traditional methods like vector-based RAG work well for localized tasks like retrieving direct answers from specific text fragments. However, these methods fail when addressing global queries requiring a comprehensive dataset understanding. In contrast, graph-enabled RAG systems address these broader questions by leveraging relationships within data structures. Yet, the high indexing costs associated with graph RAG systems make them inaccessible for cost-sensitive use cases. As such, achieving a balance between scalability, affordability, and quality remains a critical bottleneck for existing technologies.

Retrieval tools like vector RAG and GraphRAG are the industry benchmarks. Vector RAG is optimized to identify the most relevant content using similarity-based chunking. This method excels in precision but needs more breadth to handle complex global queries. On the other hand, GraphRAG adopts a breadth-first search approach, identifying hierarchical community structures within datasets to answer broad and intricate questions. However, GraphRAG’s reliance on summarizing data beforehand increases its computational and financial burden, limiting its use to large-scale projects with significant resources. Alternative methods such as RAPTOR and DRIFT have attempted to address some of these limitations, but challenges persist.

Microsoft researchers have introduced LazyGraphRAG, a novel system that surpasses the limitations of existing tools while integrating their strengths. LazyGraphRAG removes the need for expensive initial data summarization, reducing indexing costs to nearly the same level as vector RAG. The researchers designed this system to operate on-the-fly, leveraging lightweight data structures to answer both local and global queries without prior summarization. LazyGraphRAG is currently being integrated into the open-source GraphRAG library, making it a cost-effective and scalable solution for varied applications.

LazyGraphRAG employs a unique iterative deepening approach that combines best-first and breadth-first search strategies. It dynamically uses NLP techniques to extract concepts and their co-occurrences, optimizing graph structures as queries are processed. By deferring LLM use until necessary, LazyGraphRAG achieves efficiency while maintaining quality. The system’s relevance test budget, a tunable parameter, allows users to balance computational costs with query accuracy, scaling effectively across diverse operational demands.

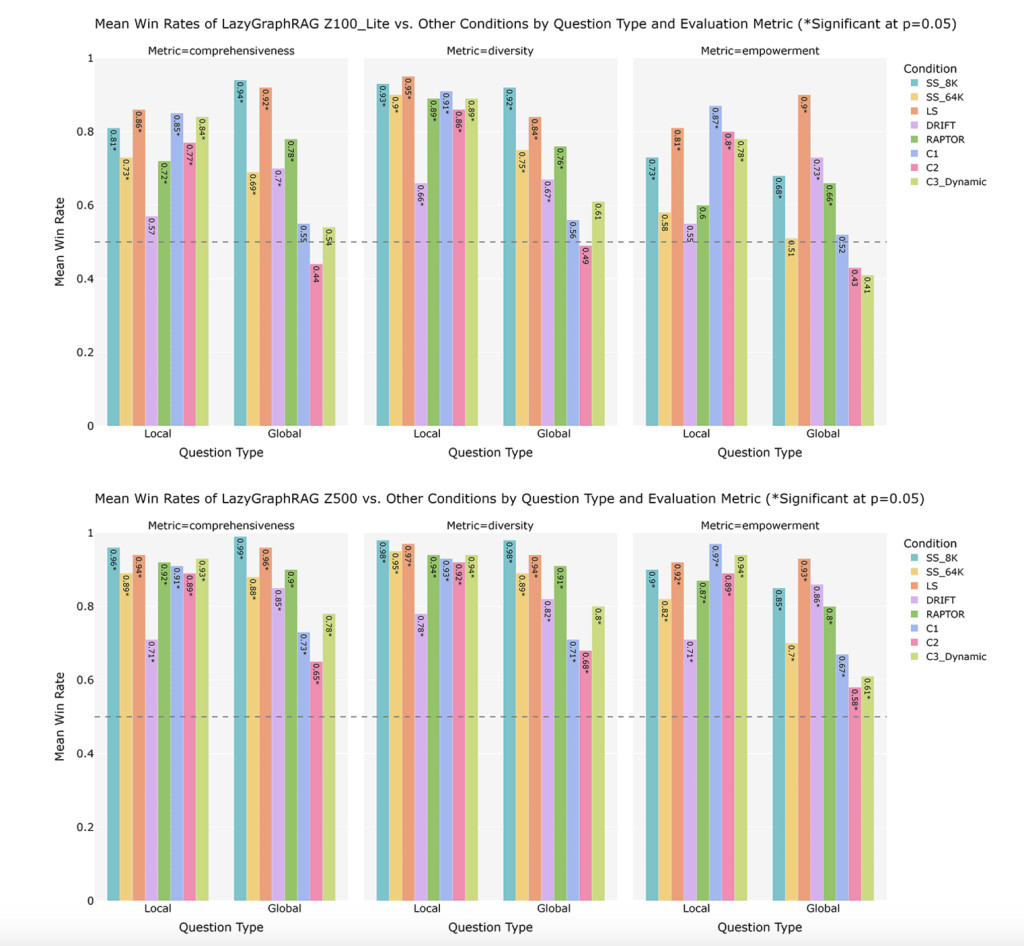

LazyGraphRAG achieves answer quality comparable to GraphRAG’s global search but at 0.1% of its indexing cost. It outperformed vector RAG and other competing systems on local and global queries, including GraphRAG DRIFT search and RAPTOR. Despite a minimal relevance test budget of 100, LazyGraphRAG excelled in metrics like comprehensiveness, diversity, and empowerment. At a budget of 500, it surpassed all alternatives while incurring only 4% of GraphRAG’s global search query cost. This scalability ensures that users can achieve high-quality answers at a fraction of the expense, making it ideal for exploratory analysis and real-time decision-making applications.

The research provides several important takeaways that underline its impact:

- Cost Efficiency: LazyGraphRAG reduces indexing costs by over 99.9% compared to full GraphRAG, making advanced retrieval accessible to resource-limited users.

- Scalability: It balances quality and cost dynamically using the relevance test budget, ensuring suitability for diverse use cases.

- Performance Superiority: The system outperformed eight competing methods across all evaluation metrics, demonstrating state-of-the-art local and global query-handling capabilities.

- Adaptability: Its lightweight indexing and deferred computation make it ideal for streaming data and one-off queries.

- Open Source Contribution: Its integration into the GraphRAG library promotes accessibility and community-driven enhancements.

In conclusion, LazyGraphRAG represents a groundbreaking advancement in retrieval-augmented generation. By blending cost-effectiveness with exceptional performance, it resolves longstanding limitations in both vector and graph-based RAG systems. Its innovative architecture allows users to extract insights from vast datasets without the financial burden of pre-indexing or compromising quality. This research marks a significant leap forward, providing a flexible and scalable solution that sets new data exploration and query generation standards.

Check out the Details and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post Microsoft AI Introduces LazyGraphRAG: A New AI Approach to Graph-Enabled RAG that Needs No Prior Summarization of Source Data appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘