In recent years, large language models (LLMs) have become a cornerstone of AI, powering chatbots, virtual assistants, and a variety of complex applications. Despite their success, a significant problem has emerged: the plateauing of the scaling laws that have historically driven model advancements. Simply put, building larger models is no longer providing the significant leaps in performance it once did. Moreover, these enormous models are expensive to train and maintain, creating accessibility and usability challenges. This plateau has driven a new focus on targeted post-training methods to enhance and specialize model capabilities instead of relying solely on sheer size.

Introducing Athene-V2: A New Approach to LLM Development

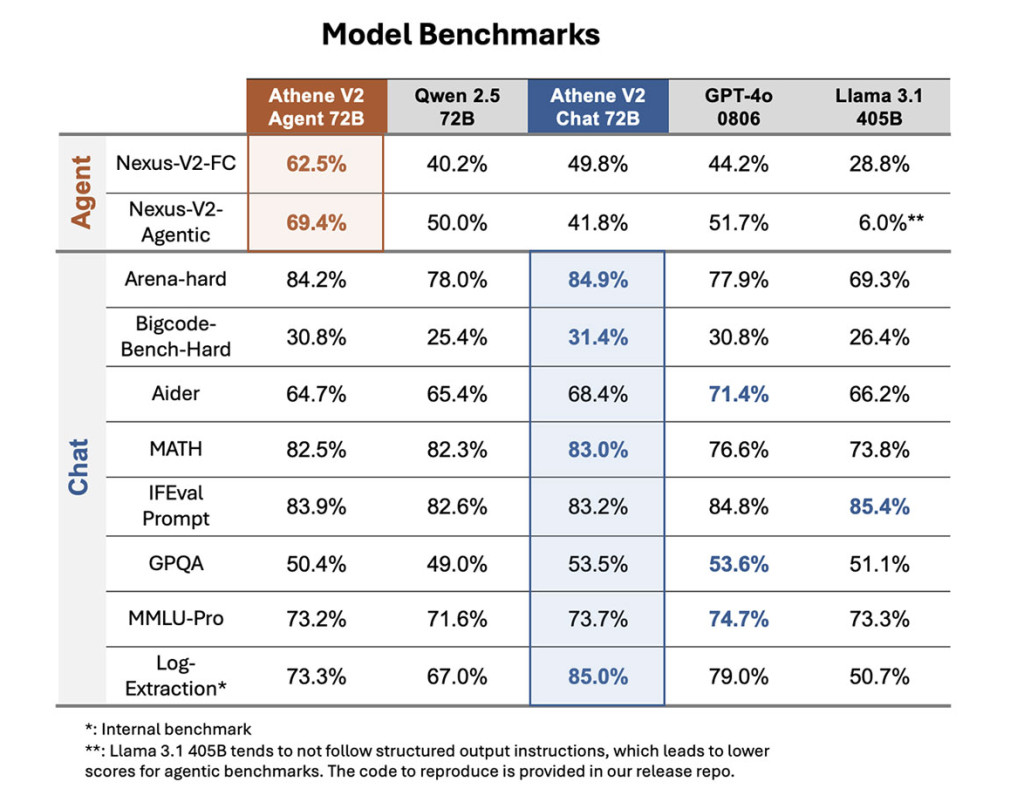

Nexusflow introduces Athene-V2: an open 72-billion-parameter model suite that aims to address this shift in AI development. Athene-V2 is comparable to OpenAI’s GPT-4o across various benchmarks, offering a specialized, cutting-edge approach to solving real-world problems. This suite includes two distinctive models: Athene-V2-Chat and Athene-V2-Agent, each optimized for specific capabilities. The introduction of Athene-V2 aims to break through the current limitations by offering tailored functionality through focused post-training, making LLMs more efficient and usable in practical settings.

Technical Details and Benefits

Athene-V2-Chat is designed for general-purpose conversational use, including chat-based applications, coding assistance, and mathematical problem-solving. It competes directly with GPT-4o across these benchmarks, proving its versatility and reliability in everyday use cases. Meanwhile, Athene-V2-Agent focuses on agent-specific functionalities, excelling in function calling and agent-oriented applications. Both models are built from Qwen 2.5, and they have undergone rigorous post-training to amplify their respective strengths. This targeted approach allows Athene-V2 to bridge the gap between general-purpose and highly specialized LLMs, delivering more relevant and efficient outputs depending on the task at hand. This makes the suite not only powerful but also adaptable, addressing a broad spectrum of user needs.

The technical details of Athene-V2 reveal its robustness and specialized enhancements. With 72 billion parameters, it remains within a manageable range compared to some of the larger, more computationally intensive models while still delivering comparable performance to GPT-4o. Athene-V2-Chat is particularly adept at managing conversational intricacies, coding queries, and solving math problems. The training process included extensive datasets for natural language understanding, programming languages, and mathematical logic, allowing it to excel across multiple tasks. Athene-V2-Agent, on the other hand, was optimized for scenarios involving API function calls and decision-making workflows, surpassing GPT-4o in specific agent-based operations. These focused improvements make the models not only competitive in general benchmarks but also highly capable in specialized domains, providing a well-rounded suite that can effectively replace multiple standalone tools.

This release is particularly important for several reasons. Firstly, with the scaling law reaching a plateau, innovation in LLMs requires a different approach—one that focuses on enhancing specialized capabilities rather than increasing size alone. Nexusflow’s decision to implement targeted post-training on Qwen 2.5 enables the models to be more adaptable and cost-effective without sacrificing performance. Benchmark results are promising, with Athene-V2-Chat and Athene-V2-Agent showing significant improvements over existing open models. For instance, Athene-V2-Chat matches GPT-4o in natural language understanding, code generation, and mathematical reasoning, while Athene-V2-Agent demonstrates superior ability in complex function-calling tasks. Such targeted gains underscore the efficiency and effectiveness of Nexusflow’s methodology, pushing the boundaries of what smaller-scale but highly optimized models can achieve.

Conclusion

In conclusion, Nexusflow’s Athene-V2 represents an essential step forward in the evolving landscape of large language models. By emphasizing targeted post-training and focusing on specialized capabilities, Athene-V2 offers a powerful, adaptable alternative to larger, more unwieldy models like GPT-4o. The ability of Athene-V2-Chat and Athene-V2-Agent to compete across various benchmarks with such a streamlined architecture is a testament to the power of specialization in AI development. As we move into the post-scaling-law era, approaches like that of Nexusflow’s Athene-V2 are likely to define the next wave of advancements, making AI more efficient, accessible, and tailored to specific use cases.

Check out the Athene-V2-Chat Model on Hugging Face and Athene-V2-Agent Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions

The post Nexusflow Releases Athene-V2: An Open 72B Model Suite Comparable to GPT-4o Across Benchmarks appeared first on MarkTechPost.

Source: Read MoreÂ