The rapid advancement of generative AI promises transformative innovation, yet it also presents significant challenges. Concerns about legal implications, accuracy of AI-generated outputs, data privacy, and broader societal impacts have underscored the importance of responsible AI development. Responsible AI is a practice of designing, developing, and operating AI systems guided by a set of dimensions with the goal to maximize benefits while minimizing potential risks and unintended harm. Our customers want to know that the technology they are using was developed in a responsible way. They also want resources and guidance to implement that technology responsibly in their own organization. Most importantly, they want to make sure the technology they roll out is for everyone’s benefit, including end-users. At AWS, we are committed to developing AI responsibly, taking a people-centric approach that prioritizes education, science, and our customers, integrating responsible AI across the end-to-end AI lifecycle.

What constitutes responsible AI is continually evolving. For now, we consider eight key dimensions of responsible AI: Fairness, explainability, privacy and security, safety, controllability, veracity and robustness, governance, and transparency. These dimensions make up the foundation for developing and deploying AI applications in a responsible and safe manner.

At AWS, we help our customers transform responsible AI from theory into practice—by giving them the tools, guidance, and resources to get started with purpose-built services and features, such as Amazon Bedrock Guardrails. In this post, we introduce the core dimensions of responsible AI and explore considerations and strategies on how to address these dimensions for Amazon Bedrock applications. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Safety

The safety dimension in responsible AI focuses on preventing harmful system output and misuse. It focuses on steering AI systems to prioritize user and societal well-being.

Amazon Bedrock is designed to facilitate the development of secure and reliable AI applications by incorporating various safety measures. In the following sections, we explore different aspects of implementing these safety measures and provide guidance for each.

Addressing model toxicity with Amazon Bedrock Guardrails

Amazon Bedrock Guardrails supports AI safety by working towards preventing the application from generating or engaging with content that is considered unsafe or undesirable. These safeguards can be created for multiple use cases and implemented across multiple FMs, depending on your application and responsible AI requirements. For example, you can use Amazon Bedrock Guardrails to filter out harmful user inputs and toxic model outputs, redact by either blocking or masking sensitive information from user inputs and model outputs, or help prevent your application from responding to unsafe or undesired topics.

Content filters can be used to detect and filter harmful or toxic user inputs and model-generated outputs. By implementing content filters, you can help prevent your AI application from responding to inappropriate user behavior, and make sure your application provides only safe outputs. This can also mean providing no output at all, in situations where certain user behavior is unwanted. Content filters support six categories: hate, insults, sexual content, violence, misconduct, and prompt injections. Filtering is done based on confidence classification of user inputs and FM responses across each category. You can adjust filter strengths to determine the sensitivity of filtering harmful content. When a filter is increased, it increases the probability of filtering unwanted content.

Denied topics are a set of topics that are undesirable in the context of your application. These topics will be blocked if detected in user queries or model responses. You define a denied topic by providing a natural language definition of the topic along with a few optional example phrases of the topic. For example, if a medical institution wants to make sure their AI application avoids giving any medication or medical treatment-related advice, they can define the denied topic as “Information, guidance, advice, or diagnoses provided to customers relating to medical conditions, treatments, or medication†and optional input examples like “Can I use medication A instead of medication B,†“Can I use Medication A for treating disease Y,†or “Does this mole look like skin cancer?†Developers will need to specify a message that will be displayed to the user whenever denied topics are detected, for example “I am an AI bot and cannot assist you with this problem, please contact our customer service/your doctor’s office.†Avoiding specific topics that aren’t toxic by nature but can potentially be harmful to the end-user is crucial when creating safe AI applications.

Word filters are used to configure filters to block undesirable words, phrases, and profanity. Such words can include offensive terms or undesirable outputs, like product or competitor information. You can add up to 10,000 items to the custom word filter to filter out topics you don’t want your AI application to produce or engage with.

Sensitive information filters are used to block or redact sensitive information such as personally identifiable information (PII) or your specified context-dependent sensitive information in user inputs and model outputs. This can be useful when you have requirements for sensitive data handling and user privacy. If the AI application doesn’t process PII information, your users and your organization are safer from accidental or intentional misuse or mishandling of PII. The filter is configured to block sensitive information requests; upon such detection, the guardrail will block content and display a preconfigured message. You can also choose to redact or mask sensitive information, which will either replace the data with an identifier or delete it completely.

Measuring model toxicity with Amazon Bedrock model evaluation

Amazon Bedrock provides a built-in capability for model evaluation. Model evaluation is used to compare different models’ outputs and select the most appropriate model for your use case. Model evaluation jobs support common use cases for large language models (LLMs) such as text generation, text classification, question answering, and text summarization. You can choose to create either an automatic model evaluation job or a model evaluation job that uses a human workforce. For automatic model evaluation jobs, you can either use built-in datasets across three predefined metrics (accuracy, robustness, toxicity) or bring your own datasets. For human-in-the-loop evaluation, which can be done by either AWS managed or customer managed teams, you must bring your own dataset.

If you are planning on using automated model evaluation for toxicity, start by defining what constitutes toxic content for your specific application. This may include offensive language, hate speech, and other forms of harmful communication. Automated evaluations come with curated datasets to choose from. For toxicity, you can use either RealToxicityPrompts or BOLD datasets, or both. If you bring your custom model to Amazon Bedrock, you can implement scheduled evaluations by integrating regular toxicity assessments into your development pipeline at key stages of model development, such as after major updates or retraining sessions. For early detection, implement custom testing scripts that run toxicity evaluations on new data and model outputs continuously.

Amazon Bedrock and its safety capabilities helps developers create AI applications that prioritize safety and reliability, thereby fostering trust and enforcing ethical use of AI technology. You should experiment and iterate on chosen safety approaches to achieve their desired performance. Diverse feedback is also important, so think about implementing human-in-the-loop testing to assess model responses for safety and fairness.

Controllability

Controllability focuses on having mechanisms to monitor and steer AI system behavior. It refers to the ability to manage, guide, and constrain AI systems to make sure they operate within desired parameters.

Guiding AI behavior with Amazon Bedrock Guardrails

To provide direct control over what content the AI application can produce or engage with, you can use Amazon Bedrock Guardrails, which we discussed under the safety dimension. This allows you to steer and manage the system’s outputs effectively.

You can use content filters to manage AI outputs by setting sensitivity levels for detecting harmful or toxic content. By controlling how strictly content is filtered, you can steer the AI’s behavior to help avoid undesirable responses. This allows you to guide the system’s interactions and outputs to align with your requirements. Defining and managing denied topics helps control the AI’s engagement with specific subjects. By blocking responses related to defined topics, you help AI systems remain within the boundaries set for its operation.

Amazon Bedrock Guardrails can also guide the system’s behavior for compliance with content policies and privacy standards. Custom word filters allow you to block specific words, phrases, and profanity, giving you direct control over the language the AI uses. And managing how sensitive information is handled, whether by blocking or redacting it, allows you to control the AI’s approach to data privacy and security.

Monitoring and adjusting performance with Amazon Bedrock model evaluation

To asses and adjust AI performance, you can look at Amazon Bedrock model evaluation. This helps systems operate within desired parameters and meet safety and ethical standards. You can explore both automatic and human-in-the loop evaluation. These evaluation methods help you monitor and guide model performance by assessing how well models meet safety and ethical standards. Regular evaluations allow you to adjust and steer the AI’s behavior based on feedback and performance metrics.

Integrating scheduled toxicity assessments and custom testing scripts into your development pipeline helps you continuously monitor and adjust model behavior. This ongoing control helps AI systems to remain aligned with desired parameters and adapt to new data and scenarios effectively.

Fairness

The fairness dimension in responsible AI considers the impacts of AI on different groups of stakeholders. Achieving fairness requires ongoing monitoring, bias detection, and adjustment of AI systems to maintain impartiality and justice.

To help with fairness in AI applications that are built on top of Amazon Bedrock, application developers should explore model evaluation and human-in-the-loop validation for model outputs at different stages of the machine learning (ML) lifecycle. Measuring bias presence before and after model training as well as at model inference is the first step in mitigating bias. When developing an AI application, you should set fairness goals, metrics, and potential minimum acceptable thresholds to measure performance across different qualities and demographics applicable to the use case. On top of these, you should create remediation plans for potential inaccuracies and bias, which may include modifying datasets, finding and deleting the root cause for bias, introducing new data, and potentially retraining the model.

Amazon Bedrock provides a built-in capability for model evaluation, as we explored under the safety dimension. For general text generation evaluation for measuring model robustness and toxicity, you can use the built-in fairness dataset Bias in Open-ended Language Generation Dataset (BOLD), which focuses on five domains: profession, gender, race, religious ideologies, and political ideologies. To assess fairness for other domains or tasks, you must bring your own custom prompt datasets.

Transparency

The transparency dimension in generative AI focuses on understanding how AI systems make decisions, why they produce specific results, and what data they’re using. Maintaining transparency is critical for building trust in AI systems and fostering responsible AI practices.

To help meet the growing demand for transparency, AWS introduced AWS AI Service Cards, a dedicated resource aimed at enhancing customer understanding of our AI services. AI Service Cards serve as a cornerstone of responsible AI documentation, consolidating essential information in one place. They provide comprehensive insights into the intended use cases, limitations, responsible AI design principles, and best practices for deployment and performance optimization of our AI services. They are part of a comprehensive development process we undertake to build our services in a responsible way.

At the time of writing, we offer the following AI Service Cards for Amazon Bedrock models:

Service cards for other Amazon Bedrock models can be found directly on the provider’s website. Each card details the service’s specific use cases, the ML techniques employed, and crucial considerations for responsible deployment and use. These cards evolve iteratively based on customer feedback and ongoing service enhancements, so they remain relevant and informative.

An additional effort in providing transparency is the Amazon Titan Image Generator invisible watermark. Images generated by Amazon Titan come with this invisible watermark by default. This watermark detection mechanism enables you to identify images produced by Amazon Titan Image Generator, an FM designed to create realistic, studio-quality images in large volumes and at low cost using natural language prompts. By using watermark detection, you can enhance transparency around AI-generated content, mitigate the risks of harmful content generation, and reduce the spread of misinformation.

Content creators, news organizations, risk analysts, fraud detection teams, and more can use this feature to identify and authenticate images created by Amazon Titan Image Generator. The detection system also provides a confidence score, allowing you to assess the reliability of the detection even if the original image has been modified. Simply upload an image to the Amazon Bedrock console, and the API will detect watermarks embedded in images generated by the Amazon Titan model, including both the base model and customized versions. This tool not only supports responsible AI practices, but also fosters trust and reliability in the use of AI-generated content.

Veracity and robustness

The veracity and robustness dimension in responsible AI focuses on achieving correct system outputs, even with unexpected or adversarial inputs. The main focus of this dimension is to address possible model hallucinations. Model hallucinations occur when an AI system generates false or misleading information that appears to be plausible. Robustness in AI systems makes sure model outputs are consistent and reliable under various conditions, including unexpected or adverse situations. A robust AI model maintains its functionality and delivers consistent and accurate outputs even when faced with incomplete or incorrect input data.

Measuring accuracy and robustness with Amazon Bedrock model evaluation

As introduced in the AI safety and controllability dimensions, Amazon Bedrock provides tools for evaluating AI models in terms of toxicity, robustness, and accuracy. This makes sure the models don’t produce harmful, offensive, or inappropriate content and can withstand various inputs, including unexpected or adversarial scenarios.

Accuracy evaluation helps AI models produce reliable and correct outputs across various tasks and datasets. In the built-in evaluation, accuracy is measured against a TREX dataset and the algorithm calculates the degree to which the model’s predictions match the actual results. The actual metric for accuracy depends on the chosen use case; for example, in text generation, the built-in evaluation calculates a real-world knowledge score, which examines the model’s ability to encode factual knowledge about the real world. This evaluation is essential for maintaining the integrity, credibility, and effectiveness of AI applications.

Robustness evaluation makes sure the model maintains consistent performance across diverse and potentially challenging conditions. This includes handling unexpected inputs, adversarial manipulations, and varying data quality without significant degradation in performance.

Methods for achieving veracity and robustness in Amazon Bedrock applications

There are several techniques that you can consider when using LLMs in your applications to maximize veracity and robustness:

- Prompt engineering – You can instruct that model to only engage in discussion about things that the model knows and not generate any new information.

- Chain-of-thought (CoT) – This technique involves the model generating intermediate reasoning steps that lead to the final answer, improving the model’s ability to solve complex problems by making its thought process transparent and logical. For example, you can ask the model to explain why it used certain information and created a certain output. This is a powerful method to reduce hallucinations. When you ask the model to explain the process it used to generate the output, the model has to identify different the steps taken and information used, thereby reducing hallucination itself. To learn more about CoT and other prompt engineering techniques for Amazon Bedrock LLMs, see General guidelines for Amazon Bedrock LLM users.

- Retrieval Augmented Generation (RAG) – This helps reduce hallucination by providing the right context and augmenting generated outputs with internal data to the models. With RAG, you can provide the context to the model and tell the model to only reply based on the provided context, which leads to fewer hallucinations. With Amazon Bedrock Knowledge Bases, you can implement the RAG workflow from ingestion to retrieval and prompt augmentation. The information retrieved from the knowledge bases is provided with citations to improve AI application transparency and minimize hallucinations.

- Fine-tuning and pre-training – There are different techniques for improving model accuracy for specific context, like fine-tuning and continued pre-training. Instead of providing internal data through RAG, with these techniques, you add data straight to the model as part of its dataset. This way, you can customize several Amazon Bedrock FMs by pointing them to datasets that are saved in Amazon Simple Storage Service (Amazon S3) buckets. For fine-tuning, you can take anything between a few dozen and hundreds of labeled examples and train the model with them to improve performance on specific tasks. The model learns to associate certain types of outputs with certain types of inputs. You can also use continued pre-training, in which you provide the model with unlabeled data, familiarizing the model with certain inputs for it to associate and learn patterns. This includes, for example, data from a specific topic that the model doesn’t have enough domain knowledge of, thereby increasing the accuracy of the domain. Both of these customization options make it possible to create an accurate customized model without collecting large volumes of annotated data, resulting in reduced hallucination.

- Inference parameters – You can also look into the inference parameters, which are values that you can adjust to modify the model response. There are multiple inference parameters that you can set, and they affect different capabilities of the model. For example, if you want the model to get creative with the responses or generate completely new information, such as in the context of storytelling, you can modify the temperature parameter. This will affect how the model looks for words across probability distribution and select words that are farther apart from each other in that space.

- Contextual grounding – Lastly, you can use the contextual grounding check in Amazon Bedrock Guardrails. Amazon Bedrock Guardrails provides mechanisms within the Amazon Bedrock service that allow developers to set content filters and specify denied topics to control allowed text-based user inputs and model outputs. You can detect and filter hallucinations in model responses if they are not grounded (factually inaccurate or add new information) in the source information or are irrelevant to the user’s query. For example, you can block or flag responses in RAG applications if the model response deviates from the information in the retrieved passages or doesn’t answer the question by the user.

Model providers and tuners might not mitigate these hallucinations, but can inform the user that they might occur. This could be done by adding some disclaimers about using AI applications at the user’s own risk. We currently also see advances in research in methods that estimate uncertainty based on the amount of variation (measured as entropy) between multiple outputs. These new methods have proved much better at spotting when a question was likely to be answered incorrectly than previous methods.

Explainability

The explainability dimension in responsible AI focuses on understanding and evaluating system outputs. By using an explainable AI framework, humans can examine the models to better understand how they produce their outputs. For the explainability of the output of a generative AI model, you can use techniques like training data attribution and CoT prompting, which we discussed under the veracity and robustness dimension.

For customers wanting to see attribution of information in completion, we recommend using RAG with an Amazon Bedrock knowledge base. Attribution works with RAG because the possible attribution sources are included in the prompt itself. Information retrieved from the knowledge base comes with source attribution to improve transparency and minimize hallucinations. Amazon Bedrock Knowledge Bases manages the end-to-end RAG workflow for you. When using the RetrieveAndGenerate API, the output includes the generated response, the source attribution, and the retrieved text chunks.

Security and privacy

If there is one thing that is absolutely critical to every organization using generative AI technologies, it is making sure everything you do is and remains private, and that your data is protected at all times. The security and privacy dimension in responsible AI focuses on making sure data and models are obtained, used, and protected appropriately.

Built-in security and privacy of Amazon Bedrock

With Amazon Bedrock, if we look from a data privacy and localization perspective, AWS does not store your data—if we don’t store it, it can’t leak, it can’t be seen by model vendors, and it can’t be used by AWS for any other purpose. The only data we store is operational metrics—for example, for accurate billing, AWS collects metrics on how many tokens you send to a specific Amazon Bedrock model and how many tokens you receive in a model output. And, of course, if you create a fine-tuned model, we need to store that in order for AWS to host it for you. Data used in your API requests remains in the AWS Region of your choosing—API requests to the Amazon Bedrock API to a specific Region will remain completely within that Region.

If we look at data security, a common adage is that if it moves, encrypt it. Communications to, from, and within Amazon Bedrock are encrypted in transit—Amazon Bedrock doesn’t have a non-TLS endpoint. Another adage is that if it doesn’t move, encrypt it. Your fine-tuning data and model will by default be encrypted using AWS managed AWS Key Management Service (AWS KMS) keys, but you have the option to use your own KMS keys.

When it comes to identity and access management, AWS Identity and Access Management (IAM) controls who is authorized to use Amazon Bedrock resources. For each model, you can explicitly allow or deny access to actions. For example, one team or account could be allowed to provision capacity for Amazon Titan Text, but not Anthropic models. You can be as broad or as granular as you need to be.

Looking at network data flows for Amazon Bedrock API access, it’s important to remember that traffic is encrypted at all time. If you’re using Amazon Virtual Private Cloud (Amazon VPC), you can use AWS PrivateLink to provide your VPC with private connectivity through the regional network direct to the frontend fleet of Amazon Bedrock, mitigating exposure of your VPC to internet traffic with an internet gateway. Similarly, from a corporate data center perspective, you can set up a VPN or AWS Direct Connect connection to privately connect to a VPC, and from there you can have that traffic sent to Amazon Bedrock over PrivateLink. This should negate the need for your on-premises systems to send Amazon Bedrock related traffic over the internet. Following AWS best practices, you secure PrivateLink endpoints using security groups and endpoint policies to control access to these endpoints following Zero Trust principles.

Let’s also look at network and data security for Amazon Bedrock model customization. The customization process will first load your requested baseline model, then securely read your customization training and validation data from an S3 bucket in your account. Connection to data can happen through a VPC using a gateway endpoint for Amazon S3. That means bucket policies that you have can still be applied, and you don’t have to open up wider access to that S3 bucket. A new model is built, which is then encrypted and delivered to the customized model bucket—at no time does a model vendor have access to or visibility of your training data or your customized model. At the end of the training job, we also deliver output metrics relating to the training job to an S3 bucket that you had specified in the original API request. As mentioned previously, both your training data and customized model can be encrypted using a customer managed KMS key.

Best practices for privacy protection

The first thing to keep in mind when implementing a generative AI application is data encryption. As mentioned earlier, Amazon Bedrock uses encryption in transit and at rest. For encryption at rest, you have the option to choose your own customer managed KMS keys over the default AWS managed KMS keys. Depending on your company’s requirements, you might want to use a customer managed KMS key. For encryption in transit, we recommend using TLS 1.3 to connect to the Amazon Bedrock API.

For terms and conditions and data privacy, it’s important to read the terms and conditions of the models (EULA). Model providers are responsible for setting up these terms and conditions, and you as a customer are responsible for evaluating these and deciding if they’re appropriate for your application. Always make sure you read and understand the terms and conditions before accepting, including when you request model access in Amazon Bedrock. You should make sure you’re comfortable with the terms. Make sure your test data has been approved by your legal team.

For privacy and copyright, it is the responsibility of the provider and the model tuner to make sure the data used for training and fine-tuning is legally available and can actually be used to fine-tune and train those models. It is also the responsibility of the model provider to make sure the data they’re using is appropriate for the models. Public data doesn’t automatically mean public for commercial usage. That means you can’t use this data to fine-tune something and show it to your customers.

To protect user privacy, you can use the sensitive information filters in Amazon Bedrock Guardrails, which we discussed under the safety and controllability dimensions.

Lastly, when automating with generative AI (for example, with Amazon Bedrock Agents), make sure you’re comfortable with the model making automated decisions and consider the consequences of the application providing wrong information or actions. Therefore, consider risk management here.

Governance

The governance dimension makes sure AI systems are developed, deployed, and managed in a way that aligns with ethical standards, legal requirements, and societal values. Governance encompasses the frameworks, policies, and rules that direct AI development and use in a way that is safe, fair, and accountable. Setting and maintaining governance for AI allows stakeholders to make informed decisions around the use of AI applications. This includes transparency about how data is used, the decision-making processes of AI, and the potential impacts on users.

Robust governance is the foundation upon which responsible AI applications are built. AWS offers a range of services and tools that can empower you to establish and operationalize AI governance practices. AWS has also developed an AI governance framework that offers comprehensive guidance on best practices across vital areas such as data and model governance, AI application monitoring, auditing, and risk management.

When looking at auditability, Amazon Bedrock integrates with the AWS generative AI best practices framework v2 from AWS Audit Manager. With this framework, you can start auditing your generative AI usage within Amazon Bedrock by automating evidence collection. This provides a consistent approach for tracking AI model usage and permissions, flagging sensitive data, and alerting on issues. You can use collected evidence to assess your AI application across eight principles: responsibility, safety, fairness, sustainability, resilience, privacy, security, and accuracy.

For monitoring and auditing purposes, you can use Amazon Bedrock built-in integrations with Amazon CloudWatch and AWS CloudTrail. You can monitor Amazon Bedrock using CloudWatch, which collects raw data and processes it into readable, near real-time metrics. CloudWatch helps you track usage metrics such as model invocations and token count, and helps you build customized dashboards for audit purposes either across one or multiple FMs in one or multiple AWS accounts. CloudTrail is a centralized logging service that provides a record of user and API activities in Amazon Bedrock. CloudTrail collects API data into a trail, which needs to be created inside the service. A trail enables CloudTrail to deliver log files to an S3 bucket.

Amazon Bedrock also provides model invocation logging, which is used to collect model input data, prompts, model responses, and request IDs for all invocations in your AWS account used in Amazon Bedrock. This feature provides insights on how your models are being used and how they are performing, enabling you and your stakeholders to make data-driven and responsible decisions around the use of AI applications. Model invocation logs need to be enabled, and you can decide whether you want to store this log data in an S3 bucket or CloudWatch logs.

From a compliance perspective, Amazon Bedrock is in scope for common compliance standards, including ISO, SOC, FedRAMP moderate, PCI, ISMAP, and CSA STAR Level 2, and is Health Insurance Portability and Accountability Act (HIPAA) eligible. You can also use Amazon Bedrock in compliance with the General Data Protection Regulation (GDPR). Amazon Bedrock is included in the Cloud Infrastructure Service Providers in Europe Data Protection Code of Conduct (CISPE CODE) Public Register. This register provides independent verification that Amazon Bedrock can be used in compliance with the GDPR. For the most up-to-date information about whether Amazon Bedrock is within the scope of specific compliance programs, see AWS services in Scope by Compliance Program and choose the compliance program you’re interested in.

Implementing responsible AI in Amazon Bedrock applications

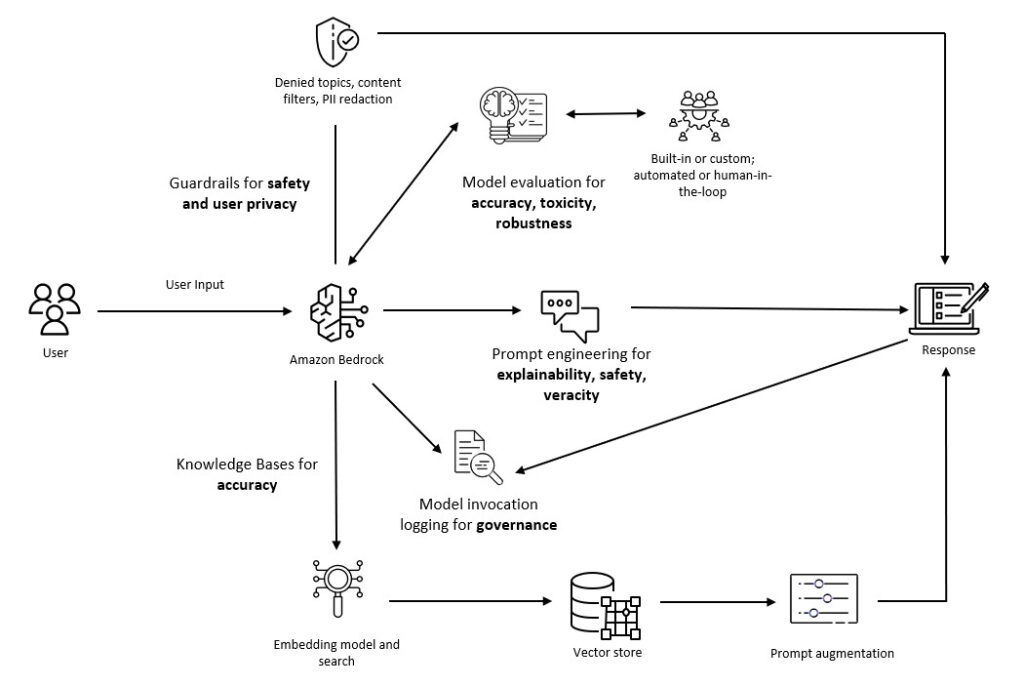

When building applications in Amazon Bedrock, consider your application context, needs, and behaviors of your end-users. Also, look into your organization’s needs, legal and regulatory requirements, and metrics you want or need to collect when implementing responsible AI. Take advantage of managed and built-in features available. The following diagram outlines various measures you can implement to address the core dimensions of responsible AI. This is not an exhaustive list, but rather a proposition of how the measures mentioned in this post could be combined together. These measures include:

- Model evaluation – Use model evaluation to assess fairness, accuracy, toxicity, robustness, and other metrics to evaluate your chosen FM and its performance.

- Amazon Bedrock Guardrails – Use Amazon Bedrock Guardrails to establish content filters, denied topics, word filters, sensitive information filters, and contextual grounding. With guardrails, you can guide model behavior by denying any unsafe or harmful topics or words and protect the safety of your end-users.

- Prompt engineering – Utilize prompt engineering techniques, such as CoT, to improve explainability, veracity and robustness, and safety and controllability of your AI application. With prompt engineering, you can set a desired structure for the model response, including tone, scope, and length of responses. You can emphasize safety and controllability by adding denied topics to the prompt template.

- Amazon Bedrock Knowledge Bases – Use Amazon Bedrock Knowledge Bases for end-to-end RAG implementation to decrease hallucinations and improve accuracy of the model for internal data use cases. Using RAG will improve veracity and robustness, safety and controllability, and explainability of your AI application.

- Logging and monitoring – Maintain comprehensive logging and monitoring to enforce effective governance.

Diagram outlining the various measures you can implement to address the core dimensions of responsible AI.

Conclusion

Building responsible AI applications requires a deliberate and structured approach, iterative development, and continuous effort. Amazon Bedrock offers a robust suite of built-in capabilities that support the development and deployment of responsible AI applications. By providing customizable features and the ability to integrate your own datasets, Amazon Bedrock enables developers to tune AI solutions to their specific application contexts and align them with organizational requirements for responsible AI. This flexibility makes sure AI applications are not only effective, but also ethical and aligned with best practices for fairness, safety, transparency, and accountability.

Implementing AI by following the responsible AI dimensions is key for developing and using AI solutions transparently, and without bias. Responsible development of AI will also help with AI adoption across your organization and build reliability with end customers. The broader the use and impact of your application, the more important following the responsibility framework becomes. Therefore, consider and address the responsible use of AI early on in your AI journey and throughout its lifecycle.

To learn more about the responsible use of ML framework, refer to the following resources:

- Responsible Use of ML

- AWS generative AI best practices framework v2

- Building Generative AI prompt chaining workflows with human in the loop

- Foundation Model Evaluations Library

About the Authors

Laura Verghote is a senior solutions architect for public sector customers in EMEA. She works with customers to design and build solutions in the AWS Cloud, bridging the gap between complex business requirements and technical solutions. She joined AWS as a technical trainer and has wide experience delivering training content to developers, administrators, architects, and partners across EMEA.

Laura Verghote is a senior solutions architect for public sector customers in EMEA. She works with customers to design and build solutions in the AWS Cloud, bridging the gap between complex business requirements and technical solutions. She joined AWS as a technical trainer and has wide experience delivering training content to developers, administrators, architects, and partners across EMEA.

Maria Lehtinen is a solutions architect for public sector customers in the Nordics. She works as a trusted cloud advisor to her customers, guiding them through cloud system development and implementation with strong emphasis on AI/ML workloads. She joined AWS through an early-career professional program and has previous work experience from cloud consultant position at one of AWS Advanced Consulting Partners.

Maria Lehtinen is a solutions architect for public sector customers in the Nordics. She works as a trusted cloud advisor to her customers, guiding them through cloud system development and implementation with strong emphasis on AI/ML workloads. She joined AWS through an early-career professional program and has previous work experience from cloud consultant position at one of AWS Advanced Consulting Partners.

Source: Read MoreÂ