In recent years, AI-powered communication has rapidly evolved, yet challenges persist in optimizing real-time reasoning and efficiency. Many natural language models today, while impressive in generating human-like responses, struggle with inference speed, adaptability, and scalable reasoning capabilities. These shortcomings often leave developers facing high costs and latency issues, limiting the practical use of AI models in dynamic environments. Users expect seamless, intelligent interaction, but traditional AI tools fall short of providing quick, adaptable, and resource-efficient responses, particularly at scale. Addressing these issues requires not only innovative architectural changes but also new methods for optimizing inference, all while maintaining model quality.

Forge Reasoning API Beta and Nous Chat

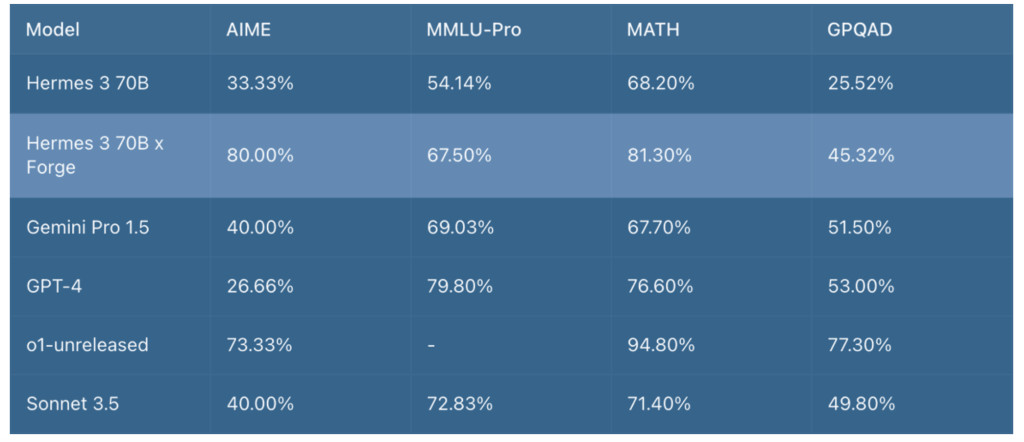

Nous Research introduces two new projects: the Forge Reasoning API Beta and Nous Chat, a simple chat platform featuring the Hermes language model. The Forge Reasoning API contains some of Nous’ advancements in inference-time AI research, building on their journey from the original Hermes model. The Hermes language model has been known for its capabilities in understanding context and generating coherent responses, but the Forge Reasoning API takes these capabilities further, making the deployment of advanced reasoning processes more feasible in real-time applications. Nous Chat, on the other hand, provides a streamlined chat experience, leveraging the Hermes model to allow users to witness the improved capabilities in conversational settings. Both of these projects signify a leap towards bridging the gap between user expectations of responsiveness and the technical demands of complex AI models.

Technical Details

The Forge Reasoning API Beta is designed with inference optimization in mind, focusing on delivering highly contextual responses with minimal latency. It does this by using advanced heuristics and architectural improvements over traditional models. One significant enhancement is the dynamic adaptation of inference paths within the model, allowing it to allocate resources more intelligently during response generation. This results in reduced computational overhead, which translates into faster response times without sacrificing the depth or coherence of reasoning. Additionally, the Hermes model embedded in Nous Chat makes it more accessible for general use, showcasing its robustness in handling typical conversational scenarios while benefiting from the enhanced inference capabilities provided by Forge. These advancements not only enhance user experience through quicker response times but also allow for more scalable deployment, making the models suitable for enterprise-level applications that require real-time reasoning.

Impact

These technical advancements are crucial because they address the efficiency and scalability issues plaguing many modern language models. By refining inference-time techniques, Nous Research is pushing the envelope on what can be achieved with large language models in practical applications. Results from preliminary testing indicate that the Forge Reasoning API achieves a reduction in response latency by nearly 30% compared to earlier Hermes iterations. This improvement not only supports better end-user interaction but also reduces the cloud computing resources needed to deploy such AI systems effectively. Moreover, the simplicity of Nous Chat allows developers, as well as general users, to experience a streamlined version of an advanced AI interaction, bridging the divide between highly technical capabilities and everyday usability.

Conclusion

In conclusion, Nous Research’s introduction of the Forge Reasoning API Beta and Nous Chat marks an important milestone in addressing some of the fundamental limitations of AI-driven communication. By enhancing inference-time efficiency and providing accessible, conversational AI experiences, these projects are setting a new standard for what real-time reasoning in AI can look like. The innovations brought forward by the Forge Reasoning API and the integration of the Hermes model aim to make AI more adaptable, faster, and ultimately more practical for a wide range of applications. As Nous Research continues to refine these tools, we can expect further advancements that not only meet but exceed the current benchmarks for conversational AI performance.

Check out the Details here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live LinkedIn event] ‘One Platform, Multimodal Possibilities,’ where Encord CEO Eric Landau and Head of Product Engineering, Justin Sharps will talk how they are reinventing data development process to help teams build game-changing multimodal AI models, fast‘

The post Nous Research Introduces Two New Projects: The Forge Reasoning API Beta and Nous Chat appeared first on MarkTechPost.

Source: Read MoreÂ