The financial and banking industry can significantly enhance investment research by integrating generative AI into daily tasks like financial statement analysis. By taking advantage of advanced natural language processing (NLP) capabilities and data analysis techniques, you can streamline common tasks like these in the financial industry:

- Automating data extraction – The manual data extraction process to analyze financial statements can be time-consuming and prone to human errors. Generative AI models can automate finding and extracting financial data from documents like 10-Ks, balance sheets, and income statements. Foundation model (FMs) are trained to identify and extract relevant information like expenses, revenue, and liabilities.

- Trend analysis and forecasting – Identifying trends and forecasting requires domain expertise and advanced mathematics. This limits the ability for individuals to run one-time reporting, while creating dependencies within an organization on a small subset of employees. Generative AI applications can analyze financial data and identify trends and patterns while forecasting future financial performance, all without manual intervention from an analyst. Removing the manual analysis step and allowing the generative AI model to build a report analyzing trends in the financial statement can increase the organization’s agility to make quick market decisions.

- Financial reporting statements – Writing detailed financial analysis reports manually can be time-consuming and resource intensive. Dedicated resources to generate financial statements can create bottlenecks within the organization, requiring specialized roles to handle the translation of financial data into a consumable narrative. FMs can summarize financial statements, highlighting key metrics found through trend analysis and providing insights. An automated report writing process not only provides consistency and speed, but minimizes resource constraints in the financial reporting process.

Amazon Bedrock is a fully managed service that makes FMs from leading AI startups and Amazon available through an API, so you can choose from a wide range of FMs to find the model that is best suited for your use case. Amazon Bedrock offers a serverless experience, so you can get started quickly, privately customize FMs with your own data, and quickly integrate and deploy them into your applications using AWS tools without having to manage infrastructure.

In this post, we demonstrate how to deploy a generative AI application that can accelerate your financial statement analysis on AWS.

Solution overview

Building a generative AI application with Amazon Bedrock to analyze financial statements involves a series of steps, from setting up the environment to deploying the model and integrating it into your application.

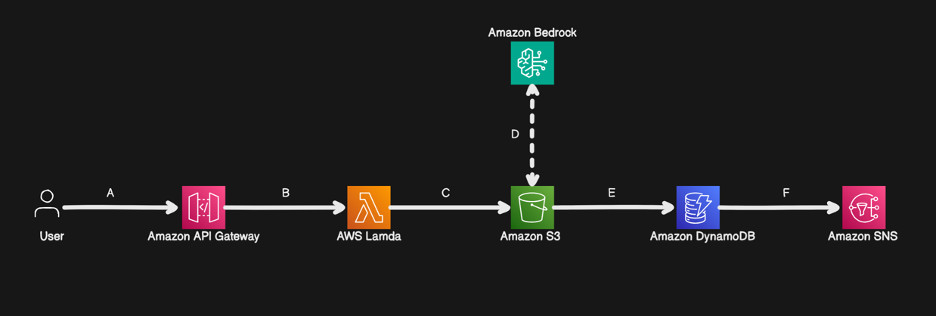

The following diagram illustrates an example solution architecture using AWS services.

Â

Â

The workflow consists of the following steps:

- The user interfaces with a web or mobile application, where they upload financial documents.

- Amazon API Gateway manages and routes the incoming request from the UI.

- An AWS Lambda function is invoked when new documents are added to the Amazon Simple Storage Service (Amazon S3) bucket.

- Amazon Bedrock analyzes the documents stored in Amazon S3. The analysis results are returned to the S3 bucket through a Lambda function and stored there.

- Amazon DynamoDB provides a fast, scalable way to store and retrieve metadata and analysis results to display to users.

- Amazon Simple Notification Service (Amazon SNS) sends notifications about the status of document processing to the application user.

In the following sections, we discuss the key considerations in each step to build and deploy a generative AI application.

Prepare the data

Gather the financial statements you want to analyze. These can be balance sheets, income statements, cash flow statements, and so on. Make sure the data is clean and in a consistent format. You might need to preprocess the data to remove noise and standardize the format. Preprocessing the data will transform the raw data into a state that can be efficiently used for model training. This is often necessary due to messiness and inconsistencies in real-world data. The outcome is to have consistent data for the model to ingest. The two most common types of data preprocessing are normalization and standardization.

Normalization modifies the numerical columns within a dataset to standardize the scale. By rearranging the data within a dataset, the scaling method reduces duplication in which the numbers are scaled from 0–1. Because outliers are removed, undesirable characteristics from the dataset are also removed. When dealing with a significant amount of data, normalizing the dataset enhances the performance of a machine learning model in environments where feature distribution is unclear.

Standardization is a method designed to rescale the values of a dataset to meet the characteristics of a standard normal distribution. By using this methodology, the data can transmit more reliably across systems, making it simpler to process, analyze, and store data in a database. Standardization is beneficial when feature distribution is consistent and values on a scale aren’t constrained within a particular range.

Choose your model

Amazon Bedrock gives you the power of choice by providing a flexible and scalable environment that allows you to access and use multiple FMs from leading AI model providers. This flexibility enables you to select the most appropriate models for your specific use cases, whether you’re working on tasks like NLP, text generation, image generation, or other AI-driven applications.

Deploy the model

If you don’t already have access to Amazon Bedrock FMs, you’ll need to request access through the Amazon Bedrock console. Then you can use the Amazon Bedrock console to deploy the chosen model. Configure the deployment settings according to your application’s requirements.

Develop the backend application

Create a backend service to interact with the deployed model. This service will handle requests from the frontend, send data to the model, and process the model’s responses. You can use Lambda, API Gateway, or other preferred REST API endpoints.

Use the Amazon Bedrock API to send financial statements to the model and receive the analysis results.

The following is an example of the backend code.

Develop the frontend UI

Create a frontend interface for users to upload financial statements and view analysis results. This can be a web or mobile application. Make sure the frontend can send financial statement data to the backend service and display the analysis results.

Conclusion

In this post, we discussed the benefits to building a generative AI application powered by Amazon Bedrock to accelerate the analysis of financial documents. Stakeholders will be able to use AWS services to deploy and manage LLMs that help improve the efficiency of pulling insights from common documents like 10-Ks, balance sheets, and income statements.

For more information on working with generative AI on AWS, visit the AWS Skill Builder generative AI training modules.

For instructions on building frontend applications and full-stack applications powered by Amazon Bedrock, refer to Front-End Web & Mobile on AWS and Create a Fullstack, Sample Web App powered by Amazon Bedrock.

About the Author

Jason D’Alba is an AWS Solutions Architect leader focused on enterprise applications, helping customers architect highly available and scalable data & ai solutions.

Jason D’Alba is an AWS Solutions Architect leader focused on enterprise applications, helping customers architect highly available and scalable data & ai solutions.

Source: Read MoreÂ