Artificial intelligence (AI) is poised to affect every aspect of the world economy and play a significant role in the global financial system, leading financial regulators around the world to take various steps to address the impact of AI on their areas of responsibility. The economic risks of AI to the financial systems include everything from the potential for consumer and institutional fraud to algorithmic discrimination and AI-enabled cybersecurity risks. The impacts of AI on consumers, banks, nonbank financial institutions, and the financial system’s stability are all concerns to be investigated and potentially addressed by regulators.

It is the goal of Perficient’s Financial Services consultants to help financial services executives, whether they lead banks, bank branches, bank holding companies, broker-dealers, financial advisors, insurance companies or investment management firms, the knowledge to know the status of AI regulation and the risk and regulatory trend of AI regulation not only in the US, but around the world where their firms are likely to have investment and trading operations.

EU Regulations

European Union lawmakers signed the Artificial Intelligence (“AIâ€) Act in June 2024. The AI act, the first binding worldwide horizontal regulation on AI, sets a common framework for the use and supply of AI systems by financial institutions in the European Union.

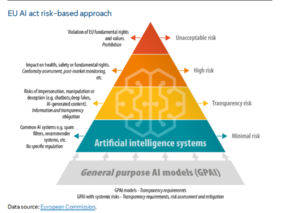

The new act offers a classification for AI systems with different requirements and obligations tailored to a ‘risk-based approach’. In our opinion, the proposed risk-based system will be very familiar to bankers who remember the original rollout and asset-based classification system required by regulators in the original BASEL risk-based capital requirements of the early 1990s. Some AI systems presenting ‘unacceptable’ risks are outright prohibited regardless of controls. A wide range of ‘high-risk’ AI systems that can have a detrimental impact on people’s health, safety or on their fundamental rights are permitted, but subject to a set of requirements and obligations to gain access to the EU market. AI systems posing limited risks because of their lack of transparency will be subject to information and transparency requirements, while AI systems presenting what are classified “minimal risks†are not subjected to further obligations.

The regulation also lays down specific rules for General Purpose AI (GPAI) models and lays down more stringent requirements for GPAI models with “high-impact capabilities†that could pose a systemic risk and have a significant impact on the EU marketplace. The AI Act was published in the EU’s Official Journal on July 12, 2024, and became effective August 31, 2024.

The EU AI act adopts a risk-based approach and classifies AI systems into several risk categories, with different degrees of regulation applying.

Prohibited AI practices

The final text prohibits a wider range of AI practices than originally proposed by the Commission because of their harmful impact:

- AI systems using subliminal or manipulative or deceptive techniques to distort people’s or a group of people’s behavior and impair informed decision making, leading to significant harm;

- AI systems exploiting vulnerabilities due to age, disability, or social or economic situations, causing significant harm;

- Biometric categorization systems inferring race, political opinions, trade union membership, religious or philosophical beliefs, sex life, or sexual orientation;

- AI systems evaluating or classifying individuals or groups based on social behavior or personal characteristics, leading to detrimental or disproportionate treatment in unrelated contexts or unjustified or disproportionate to their behavior;

- AI systems assessing the risk of individuals committing criminal offences based solely on profiling or personality traits;

- AI systems creating or expanding facial recognition databases through untargeted scraping from the Internet or CCTV footage; and

- AI systems inferring emotions in workplaces or educational institutions.

High-risk AI systems

The AI act identifies a number of use cases in which AI systems are to be considered high-risk because they can potentially create an adverse impact on people’s health, safety or their fundamental rights.

- The risk classification is based on the intended purpose of the AI system. The function performed by the AI system and the specific purpose and modalities for which the system is used are key to determine if an AI system is high-risk or not. High-risk AI systems can be safety components of products covered by sectoral EU law (e.g. medical devices) or AI systems that, as a matter of principle, are classified as high risk when they are used in specific areas listed in an annex.13 of the regulation. The Commission is tasked with maintaining an EU database for the high-risk AI systems listed in this annex.

- A new test has been enshrined at the Parliament’s request (‘filter provision’), according to which AI systems will not be considered high risk if they do not pose a significant risk of harm to the health, safety or fundamental rights of natural persons. However, an AI system will always be considered high risk if the AI system performs profiling of natural persons.

- Providers of such high-risk AI systems will have to run a conformity assessment procedure before their products can be sold and used in the EU. They will need to comply with a range of requirements including testing, data training and cybersecurity and, in some cases, will have to conduct a fundamental rights impact assessment to ensure their systems comply with EU law. The conformity assessment should be conducted either based on internal control (self-assessment) or with the involvement of a notified body (e.g. biometrics). Compliance with European harmonized standards to be developed will grant high-risk AI systems providers a presumption of conformity. After such AI systems are placed in the market, providers must implement post-market monitoring and take corrective actions if necessary.

Transparency risk

Certain AI systems intended to interact with natural persons or to generate content may pose specific risks of impersonation or deception, irrespective of whether they qualify as high-risk AI systems or not. Such systems are subject to information and transparency requirements. Users must be made aware that they interact with chatbots. Deployers of AI systems that generate or manipulate image, audio or video content (i.e. deep fakes), must disclose that the content has been artificially generated or manipulated except in very limited cases (e.g. when it is used to prevent criminal offences). Providers of AI systems that generate large quantities of synthetic content must implement sufficiently reliable, interoperable, effective and robust techniques and methods (such as watermarks) to enable marking and detection that the output has been generated or manipulated by an AI system and not a human. Employers who deploy AI systems in the workplace must inform the workers and their representatives.

Minimal risks

Systems presenting minimal risk for people (e.g. spam filters) are not subject to further obligations beyond currently applicable legislation (e.g. GDPR).

General-purpose AI (GPAI)

The regulation provides specific rules for general purpose AI models and for general-purpose AI models that pose systemic risks.

GPAI system transparency requirements

All GPAI models will have to draw up and maintain up-to-date technical documentation and make information and documentation available to downstream providers of AI systems. All providers of GPAI models have to implement a policy to respect EU copyright law, including through state-of-the-art technologies (e.g. watermarking), to carry out lawful text- and data-mining exceptions as envisaged under the Copyright Directive. In addition, GPAIs must draw up and make publicly available a sufficiently detailed summary of the content used in training the GPAI models according to a template provided by the AI Office. Financial Institutions headquartered outside the EU will have to appoint a representative in the EU. However, AI models made accessible under a free and open source will be exempt from some of the obligations (i.e., disclosure of technical documentation) given they have, in principle, positive effects on research, innovation and competition.

Systemic-risk GPAI obligations

GPAI models with ‘high-impact capabilities’ could pose a systemic risk and have a significant impact due to their reach and their actual or reasonably foreseeable negative effects (on public health, safety, public security, fundamental rights, or the society as a whole). GPAI providers must therefore notify the European Commission if their model is trained using a total computing power exceeding 10^25 FLOPs (i.e. floating-point operations per second). When this threshold is met, the presumption will be that the model is a GPAI model posing systemic risks. In addition to the requirements on transparency and copyright protection falling on all GPAI models, providers of systemic-risk GPAI models are required to constantly assess and mitigate the risks they pose and to ensure cybersecurity protection. That requires keeping track of, documenting, and reporting to regulators serious incidents and implementing corrective measures.

- Codes of practice and presumption of conformity

GPAI model providers will be able to rely on codes of practice to demonstrate compliance with the obligations set under the act. By means of implementing acts, the Commission may decide to approve a code of practice and give it a general validity within the EU, or alternatively, provide common rules for implementing the relevant obligations. Compliance with a European standard grants GPAI providers the presumption of conformity. Providers of GPAI models with systemic risks who do not adhere to an approved code of practice will be required to demonstrate adequate alternative means of compliance.

Source: Read MoreÂ