Flow-based generative modeling stands out in computational science as a sophisticated approach that facilitates rapid and accurate inferences for complex, high-dimensional datasets. It is particularly relevant in domains requiring efficient inverse problem-solving, such as astrophysics, particle physics, and dynamical system predictions. In these fields, researchers work to understand and interpret complex data by developing models that can estimate posterior distributions of the probable underlying causes of observed phenomena. Traditional inference methods are often computationally intensive and time-consuming, motivating a search for advanced techniques that optimize both speed and accuracy in modeling efforts.

One major challenge in this field is posterior inference’s computational cost and complexity, particularly for high-dimensional datasets. Classical inference methods like Markov Chain Monte Carlo (MCMC) are reliable and precise but suffer from prohibitively long processing times, making them impractical for applications requiring near-real-time inference. The high demands on computational resources and time are further complicated by the need for feedback mechanisms in existing models, leading to limitations in the accuracy and adaptability of these models to new data. This challenge emphasizes the need for a solution that can retain the accuracy of traditional methods while significantly reducing the computational load.

Standard methods employed in flow-based generative modeling include normalizing flows and diffusion models. These approaches offer a pathway for transforming a simple noise distribution into a more complex posterior distribution, which models the underlying processes that generated the observed data. While diffusion models improve performance by iteratively transforming data towards a target distribution, normalizing flows, achieve sampling and likelihood evaluation, they still need to be optimized for real-time feedback. Without a mechanism for simulator-based feedback, these models struggle to provide dynamically accurate results, leaving room for improvement in adaptability to complex, evolving datasets. Researchers have sought to bridge this gap through simulation-based inference (SBI) techniques, though even SBI techniques are constrained by data size and model complexity.

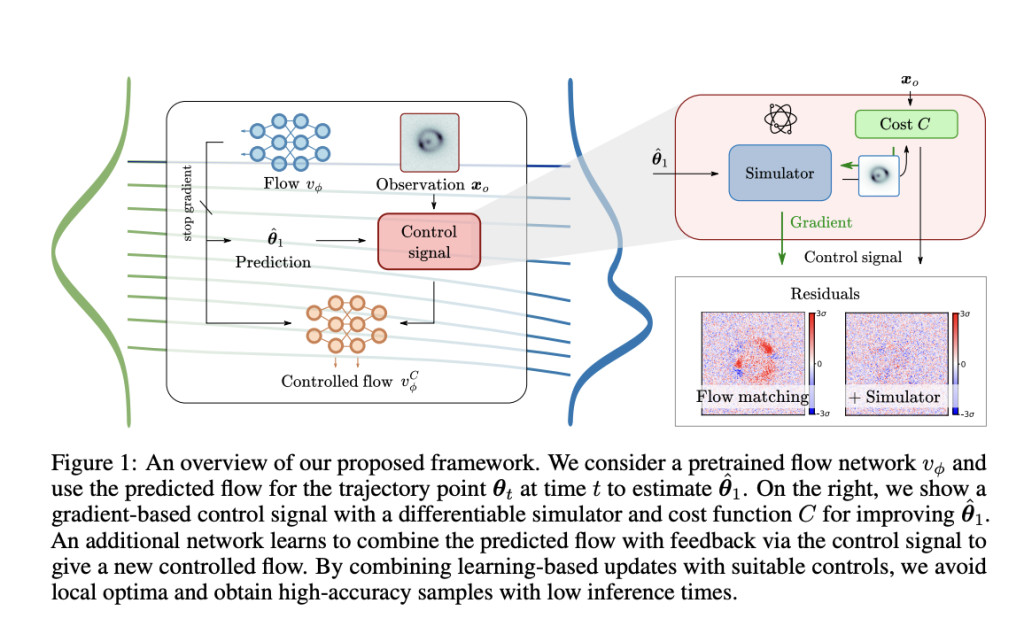

In a breakthrough approach, a research team from the Technical University of Munich introduced a refined method that integrates simulator control signals into the flow-based generative modeling process. This method combines a pretrained flow network with a smaller control network to incorporate real-time feedback from a simulator. The innovation lies in using gradient-based signals and learned cost functions to adjust model trajectories dynamically. This design allows for more accurate predictions without the need to extensively retrain or adjust the entire model, offering an efficient way to improve the precision of flow models in real-world applications.

The proposed method begins with a pretrained flow model, which receives feedback through a control network connected to a differentiable simulator. This setup allows the control network to adjust sample trajectories in real time using gradient-based information or learned cost functions. The control signals refine the flow network’s sampling process without requiring significant computational resources, thereby minimizing the model’s need for additional parameters and extensive retraining. By incorporating gradient-based and learned controls, the researchers achieved a method capable of higher sample accuracy with reduced inference time. The control network comprises only around 10% of the weights of the primary flow network, keeping the model efficient and scalable for larger datasets.

Performance evaluation of the proposed model revealed significant improvements over traditional inference methods. Tests on astrophysics applications, particularly strong gravitational lens systems, demonstrated the model’s ability to produce high-accuracy samples that were competitive with results from established MCMC methods. The researchers achieved a 53% improvement in sample accuracy and a reduction in inference time by up to 67 times compared to classical approaches. The model performed exceptionally well in tasks requiring precise modeling of posterior distributions, such as galaxy-scale gravitational lensing, where the correct interpretation of lensing effects is sensitive to dark matter distribution models. In comparison, methods like MCMC required extensive processing times, often exceeding several minutes per lens model, while the flow-matching approach with simulator feedback generated similarly accurate results in seconds. The researchers quantified their results, highlighting that the enhanced flow model with feedback achieved an average χ2 statistic of 1.48, outperforming the AIES baseline’s χ2 score of 1.74.

This research illustrates the potential of integrating control-based simulator feedback into flow-based generative models, enabling significant advancements in model accuracy without the need for large datasets or lengthy training. Refining flow networks with minimal computational costs, the proposed method addresses a long-standing challenge in simulation-based inference, especially in fields like astrophysics that require both accuracy and computational efficiency. These findings indicate that flow matching with simulator feedback can efficiently bridge the gap between traditional inference methods and advanced machine learning techniques, offering a robust solution for high-dimensional scientific inference tasks. This innovation promises broader applicability across other complex inverse problems in scientific fields that demand reliable and rapid inference, opening new opportunities for research and development in computational modeling.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post This AI Paper from the Technical University of Munich Introduces a Novel Machine Learning Approach to Improving Flow-Based Generative Models with Simulator Feedback appeared first on MarkTechPost.

Source: Read MoreÂ