Understanding and analyzing long videos has been a significant challenge in AI, primarily due to the vast amount of data and computational resources required. Traditional Multimodal Large Language Models (MLLMs) struggle to process extensive video content because of limited context length. This challenge is especially evident with hour-long videos, which need hundreds of thousands of tokens to represent visual information—often exceeding the memory capacity of even advanced hardware. Consequently, these models struggle to provide consistent and comprehensive video understanding, limiting their real-world applications.

Meta AI Releases LongVU

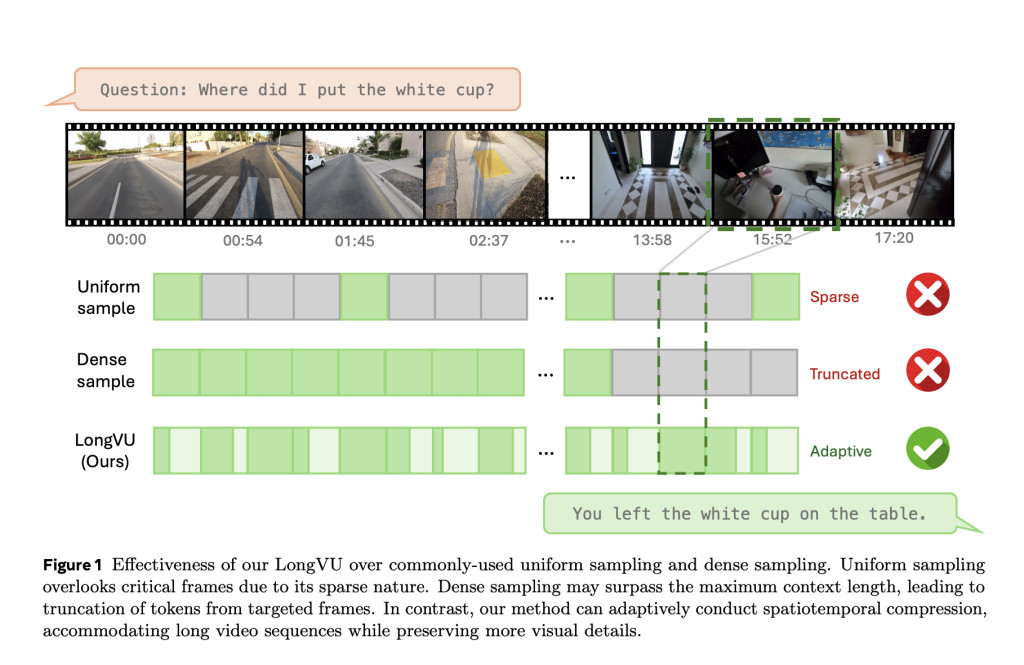

Meta AI has released LongVU, an MLLM designed to address the challenge of long video understanding within a commonly used context length. LongVU employs a spatiotemporal adaptive compression mechanism that intelligently reduces the number of video tokens while preserving essential visual details. By leveraging a combination of DINOv2 features and cross-modal queries, LongVU effectively reduces spatial and temporal redundancies in video data, enabling the processing of long-form video sequences without losing critical information.

LongVU uses a selective frame feature reduction approach guided by text queries and leverages DINOv2’s self-supervised features to discard redundant frames. This method has a significant advantage over traditional uniform sampling techniques, which either lead to the loss of important information by discarding keyframes or become computationally infeasible by retaining too many tokens. The resulting MLLM has a lightweight design, allowing it to operate efficiently and achieve state-of-the-art results on video understanding benchmarks.

Technical Details and Benefits of LongVU

LongVU’s architecture combines DINOv2 features for frame extraction, selective frame feature reduction through text-guided cross-modal queries, and spatial token reduction based on temporal dependencies. Initially, DINOv2’s feature similarity objective is used to eliminate redundant frames, reducing the token count. LongVU then applies a cross-modal query to prioritize frames relevant to the input text query. For the remaining frames, a spatial pooling mechanism further reduces the token representation while preserving the most important visual details.

This approach maintains high performance even when processing hour-long videos. The spatial token reduction mechanism ensures that essential spatial information is retained while redundant data is eliminated. LongVU processes one-frame-per-second (1fps) sampled video input, effectively reducing the number of tokens per frame to an average of two, accommodating hour-long video sequences within an 8k context length—a common limitation for MLLMs. The architecture balances token reduction with the preservation of crucial visual content, making it highly efficient for long video processing.

Importance and Performance of LongVU

LongVU represents a significant breakthrough in long video understanding by overcoming the fundamental issue of limited context length faced by most MLLMs. Through spatiotemporal compression and effective cross-modal querying, LongVU achieves impressive results on key video understanding benchmarks. For example, on the VideoMME benchmark, LongVU outperforms a strong baseline model, LLaVA-OneVision, by approximately 5% in overall accuracy. Even when scaled down to a lightweight version using the Llama3.2-3B language backbone, LongVU demonstrated substantial gains, achieving a 3.4% improvement over previous state-of-the-art models in long video tasks.

LongVU’s robustness is further highlighted by its competitive results against proprietary models like GPT-4V. On the MVBench evaluation set, LongVU not only reduced the performance gap with GPT-4V but also surpassed it in some cases, demonstrating its effectiveness in understanding densely sampled video inputs. This makes LongVU particularly valuable for applications that require real-time video analysis, such as security surveillance, sports analysis, and video-based educational tools.

Conclusion

Meta AI’s LongVU is a major advancement in video understanding, especially for lengthy content. By using spatiotemporal adaptive compression, LongVU effectively addresses the challenges of processing videos with temporal and spatial redundancies, providing an efficient solution for long video analysis. Its superior performance across benchmarks highlights its edge over traditional MLLMs, paving the way for more advanced applications.

With its lightweight architecture and efficient compression, LongVU extends high-level video understanding to diverse use cases, including mobile and low-resource environments. By reducing computational costs without compromising accuracy, LongVU sets a new standard for future MLLMs.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

The post Meta AI Releases LongVU: A Multimodal Large Language Model that can Address the Significant Challenge of Long Video Understanding appeared first on MarkTechPost.

Source: Read MoreÂ