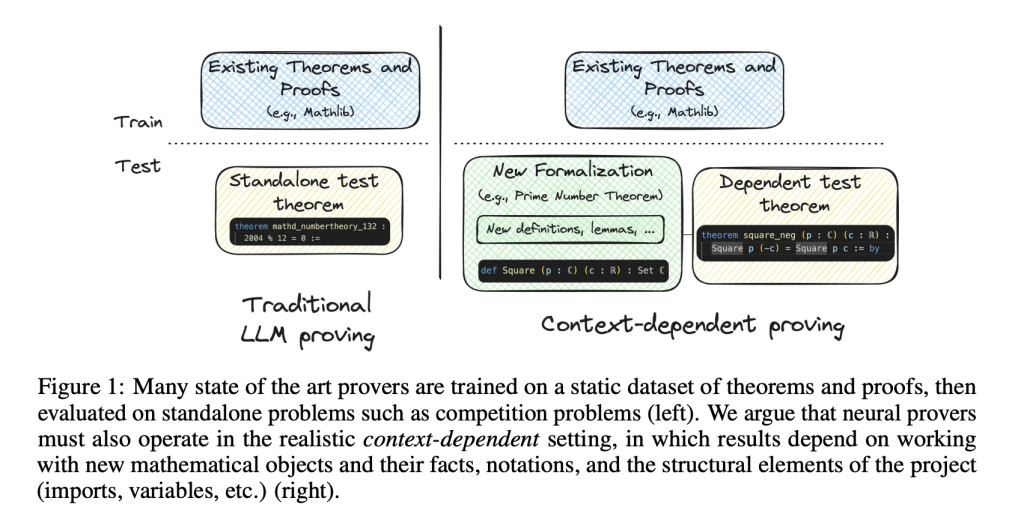

Formal theorem proving has emerged as a critical benchmark for assessing the reasoning capabilities of large language models (LLMs), with significant implications for mathematical automation. While these models show promise in assisting mathematicians through proof completion and formalization tools, a substantial challenge persists in bridging the gap between current evaluation methods and real-world theorem proving complexity. The disconnect between laboratory performance and practical applications raises concerns about the true effectiveness of LLM-based provers. Current methodologies often fail to capture the intricate nature of mathematical reasoning required in authentic theorem-proving scenarios, limiting their practical utility. This disparity highlights the need for more sophisticated evaluation frameworks that can accurately assess an LLM’s ability to handle the multifaceted challenges encountered in real mathematical proofs.

Various approaches have been developed to enhance language models’ theorem-proving capabilities. The earliest breakthrough came with next tactic prediction, where models generate the next proof step based on the current proof state. This was followed by more sophisticated methods like premise retrieval conditioning, which incorporates relevant mathematical premises into the generation process, and informal proof conditioning, which uses natural language proofs as guidance. Another notable approach involves fine-tuning models with file context, enabling them to generate complete proofs without intermediate proof states. While these methods demonstrated incremental improvements, they primarily focused on isolated aspects of theorem proving rather than addressing the full complexity of mathematical reasoning. Each approach brought specific innovations but remained limited in handling the comprehensive requirements of formal theorem proving.

Carnegie Mellon University researchers present MiniCTX, a robust benchmark system designed to revolutionize the evaluation of theorem-proving capabilities in large language models. The system introduces a comprehensive approach to context handling in theorem proving by incorporating multiple contextual elements that previous methods overlooked. This innovative framework specifically addresses the challenge of real-world theorem proving by integrating premises, prior proofs, comments, notation, and structural components like imports and declarations. The system is supported by NTP-TOOLKIT, an automated tool that extracts relevant theorems and contexts from Lean projects, ensuring continuous updates and preventing data contamination. This robust architecture represents a significant step forward in creating more realistic and practical theorem-proving evaluations.

MiniCTX’s architecture is built on a comprehensive dataset comprising 376 theorems drawn from six diverse mathematical projects, including the Prime Number Theorem, Polynomial Freiman-Ruzsa Conjecture, and scientific computing formalizations. The system’s structure revolves around three key components for each theorem: the theorem statement itself, the complete preceding file contents, and detailed metadata. The metadata component is particularly sophisticated, incorporating file information, version control data, positional context, premise relationships, module imports, and proof characteristics. This layered architecture enables precise context reconstruction, allowing users to access both in-file and cross-file contextual information. The system maintains all data in JSON format, ensuring accessibility and standardization. The implementation includes both self-contained theorems and those with complex dependencies across multiple files, creating a realistic representation of mathematical proof environments.

Experimental results demonstrate significant performance improvements when utilizing context-dependent methods in theorem proving. The file-tuned model, trained on comprehensive file contexts, achieved a 35.94% success rate compared to 19.53% for the state-tactic model that relied solely on proof states. Similarly, providing preceding file context to GPT-4o yielded a substantial improvement, reaching 27.08% compared to 11.72% with proof state alone. Premise selection showed varying effectiveness across different scenarios, notably improving performance on high cross-file dependency cases for GPT-4o, particularly in projects like PFR and SciLean. However, the file-tuned model showed inconsistent results with premise selection, suggesting challenges in effectively integrating cross-file context. Notably, when tested on the miniF2F benchmark, which focuses on standalone problems without contextual dependencies, the file-tuned model showed minimal improvement over the state-tactic model, highlighting the unique ability of miniCTX to evaluate context-dependent proving capabilities.

The research reveals several critical areas for future advancement in context-dependent theorem proving. Current limitations in handling long contexts, where truncation to meet token budgets potentially discards valuable information, present a significant challenge. The integration of repository-level context and cross-file dependencies remains particularly challenging, as current premise selection methods show inconsistent improvements. Also, the relatively low performance on complex proofs, especially those requiring more than five lines, indicates that handling sophisticated mathematical reasoning remains an open challenge. These findings underscore the need for more sophisticated approaches to context handling in automated theorem proving.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post MiniCTX: Advancing Context-Dependent Theorem Proving in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ