A major challenge in AI research is how to develop models that can balance fast, intuitive reasoning with slower, more detailed reasoning in an efficient way. Human cognition operates by using two systems: System 1, which is fast and intuitive, and System 2, which is slow but more analytical. In AI models, this dichotomy between the two systems mostly presents itself as a trade-off between computational efficiency and accuracy. Fast models mainly return quick results but mostly by sacrificing accuracy, while slow models return high accuracy but with a price of computational expense and are time-consuming. It is challenging to integrate these two modes into one seamlessly, which allows for efficient decision-making without performance degradation. This is where much of the challenge lies, and overcoming it would greatly enhance the applicability of AI in complex real-world tasks like navigation, planning, and reasoning.

Current techniques in reasoning task handling generally depend on either rapid, intuitive decision-making or slow and deliberate processing. Fast models, like Solution-Only models, capture solutions with no steps to the reason, options are less accurate and suboptimal operational models for complex tasks. On the other hand, models relying on slow and complete reasoning traces, such as Searchformer, provide better accuracy but underperform due to longer steps of reasoning and its high computational cost. Most methods combining these modes, such as distilling the slow reasoning output into fast models, often require additional fine-tuning and external controllers, thereby rapidly increasing complexity and limiting flexibility. The big limitation in the field remains the absence of a unified framework that is able of dynamically switch between fast and slow modes of reasoning.

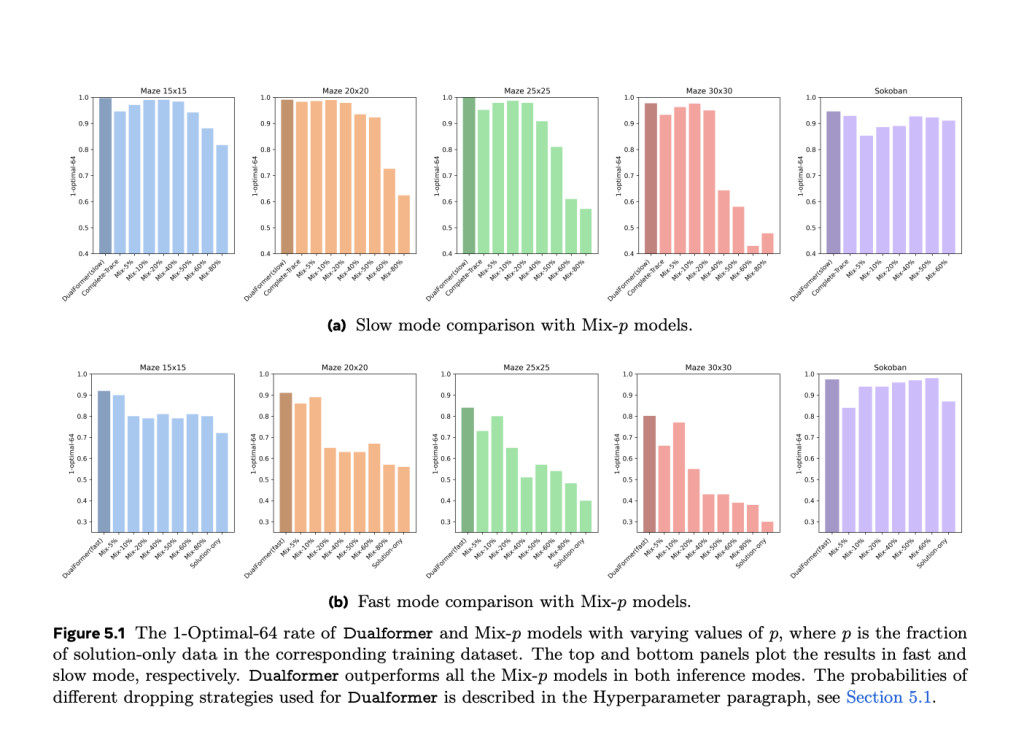

Researchers from Meta introduce Dualformer, a novel solution that seamlessly integrates both fast and slow reasoning into a single transformer-based model. It uses randomized reasoning traces during training for the model to learn to adapt between a fast, solution-only mode and a trace-driven slower reasoning mode​. On the contrary, Dualformer automatically and self-consistently adjusts its reasoning procedure according to task difficulties and flexibly switches among the modes. This novelty directly addresses the limitations of past models with improved computational efficiency and increased reasoning accuracy. The model also reduces computational overhead by using structured trace-dropping strategies mimicking human shortcuts while making decisions.

The model constructed is based on a systematic trace-dropping method where the traces of reasoning are progressively pruned over the training process to instill efficiency. Thus, one can conduct training for such a strategy on complex tasks like maze navigation or Sokoban games using traces generated by the A* search algorithm​. In this regard, close nodes, cost tokens, and search steps in the trace of reasoning are selectively dropped during training to simulate much quicker decision processes. This randomization is performed to encourage the model to generalize well across tasks while being efficient in both fast and slow modes of reasoning. The Dual-former architecture is an encoder-decoder framework that can handle such complex tasks of reasoning while attempting to keep computational costs as low as possible.

Dualformer demonstrates outstanding results in a wide variety of reasoning tasks, significantly outperforming its state-of-the-art performance in both accuracy and computational efficiency. Thus, in the slow mode, it achieves 97.6% optimality for maze tasks using 45.5% fewer steps of reasoning compared to the baseline Searchformer model. In the fast mode, it demonstrates an 80% optimal solution rate, thereby outperforming the Solution-Only model by a huge margin, which attained only 30% performance. Besides that, when in auto mode, the model selects its strategy, it still stays high, with a high optimal rate of 96.6% and nearly 60% fewer steps compared to other approaches. These performances outline the trade-off of dualformers between computational speed and accuracy, hence their robustness and flexibility in such complex tasks of reasoning.

In conclusion, Dualformer has successfully resolved the incorporation of fast and slow reasoning in AI models. During training, the model operates with randomized reasoning traces and structured trace-dropping strategies; hence, it is efficient across the modalities of reasoning, and its acclimatization to task complexity is dynamic. This makes great reductions in the computational demands while retaining high accuracy, showing a leap in reasoning tasks that require both speed and precision. Due to this innovatively unique architecture, Dualformer opens new possibilities for applying AI in complex real-world scenarios, furthering its potential across diverse fields.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post This AI Paper from Meta AI Unveils Dualformer: Controllable Fast and Slow Thinking with Randomized Reasoning Traces, Revolutionizing AI Decision-Making appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)