Utilizing Large Language Models (LLMs) through different prompting strategies has become popular in recent years. However, many current methods frequently offer very general frameworks that neglect to handle the particular difficulties involved in creating compelling urges. Differentiating prompts in multi-turn interactions, which involve several exchanges between the user and model, is a crucial problem that remains mostly unresolved.

By studying how hierarchical relationships between cues can enhance these interactions, a recent study from Center of Juris-Informatics, ROIS-DS, Tokyo, Japan, has tried to close that gap. It specifically presents the idea of “thought hierarchies,†which helps in honing and sifting possible answers. This method makes more accurate, understandable, and structured retrieval procedures possible. The hierarchical structure of these ideas is essential for creating algorithms that are both effective and simple to comprehend.

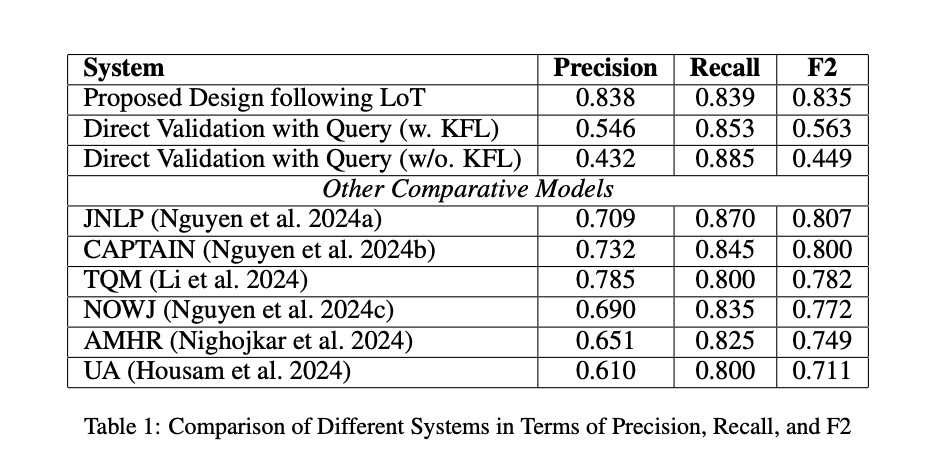

To filter and improve query responses, this study has presented a unique technique called Layer-of-Thoughts Prompting (LoT) based on hierarchical constraints. TCenter of Juris-Informatics, ROIS-DS, Tokyo, Japanhis method delivers a better organized and explicable information retrieval process by automating the procedures necessary to make the retrieval process more efficient. The application of constraint hierarchies, which help in methodically reducing the number of potential answers depending on the particular criteria of a query, distinguishes LoT from other approaches.

LLMs can be promoted in various ways. Still, most rely on generalized frameworks that don’t adequately handle the complexity of multi-turn interactions, in which users and models exchange information multiple times before concluding. The depth required to address the specific difficulties of sustaining consistent and context-aware prompts across several exchanges is lacking in these earlier methods. LoT has highlighted the prompts’ hierarchical structure and interrelationships.

One important component of LoT’s effectiveness is its conceptual framework. To create retrieval algorithms that are effective and simple to understand, the system arranges prompts and their answers into a layered, hierarchical structure. Because the system can explain why certain information is being retrieved and how it pertains to the original question, the resulting results are more accurate and easier to grasp.

Building on the strength of LLMs, LoT uses their capabilities to enhance information retrieval tasks. The approach attains greater precision in obtaining pertinent data by directing the model through a more structured process of filtering responses and imposing constraints at various tiers. Furthermore, utilizing thought hierarchies improves the retrieval process’s transparency, facilitating users’ understanding of how the model arrived at its final result.

In conclusion, by providing a more sophisticated and effective means of handling multi-turn interactions, the Layer-of-Thoughts Prompting approach is a significant breakthrough in the field of LLMs. LoT overcomes the drawbacks of more generic methods by emphasizing the hierarchical structure of prompts and implementing constraint-based filtering, which enhances information retrieval accuracy and interoperability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post Layer-of-Thoughts Prompting (LoT): A Unique Approach that Uses Large Language Model (LLM) based Retrieval with Constraint Hierarchies appeared first on MarkTechPost.

Source: Read MoreÂ