Neural audio compression has emerged as a critical challenge in digital signal processing, particularly in achieving efficient audio representation while preserving quality. Traditional audio codecs, despite their widespread use, face limitations in achieving lower bitrates without compromising audio fidelity. While recent neural compression methods have demonstrated superior performance in reducing bitrates, they encounter significant challenges in capturing long-term audio structures. The primary limitation stems from high token granularity in existing audio tokenizers, which creates computational bottlenecks when processing extended sequences in transformer architectures. This limitation becomes particularly evident when dealing with complex audio signals that inherently contain multiple levels of abstraction, from local acoustic features to higher-level semantic structures, as observed in speech and music. Understanding and effectively representing these hierarchical structures while maintaining computational efficiency remains a fundamental challenge in audio processing systems.

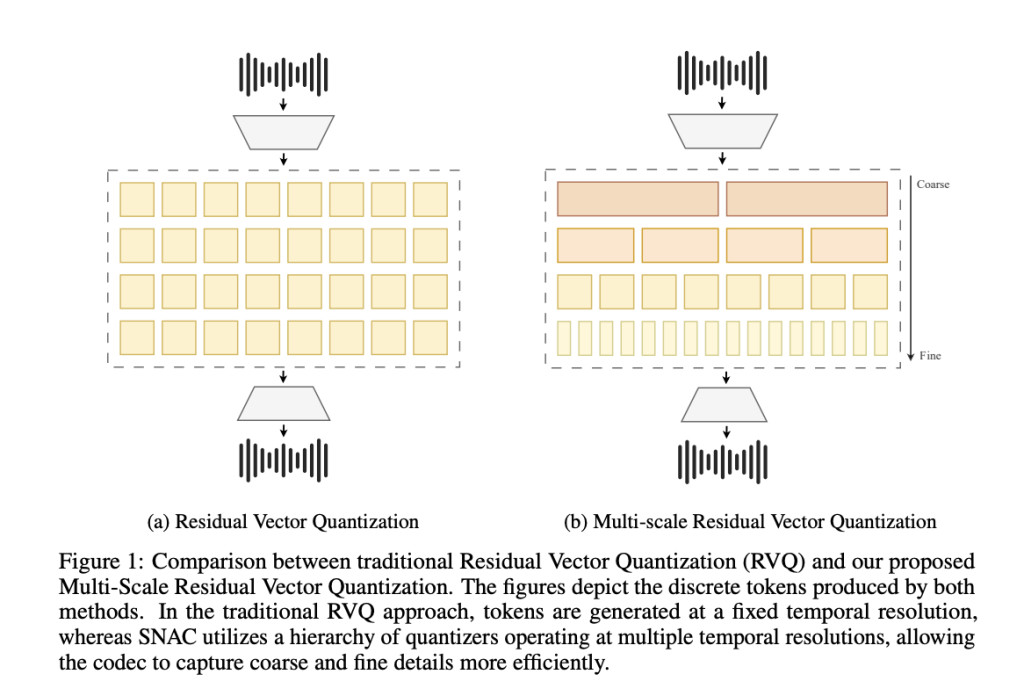

Prior attempts to address audio compression challenges have primarily centered around two main approaches: neural audio codecs and multi-scale modeling techniques. Vector quantization (VQ) emerged as a fundamental tool, mapping high-dimensional audio data to discrete code vectors through VQ-VAE models. However, VQ faced efficiency limitations at higher bitrates due to codebook size constraints. This led to the development of Residual Vector Quantization (RVQ), which introduced a multi-stage quantization process. In parallel, researchers explored multi-scale models with hierarchical decoders and separate VQ-VAE models at different temporal resolutions to capture long-term musical structures, though these approaches still had limitations in balancing compression efficiency with structural representation.

Researchers from Papla Media and ETH Zurich present SNAC (Multi-Scale Neural Audio Codec), representing a significant advancement in audio compression technology by extending the residual quantization approach with multi-scale temporal resolutions. The method enhances the RVQGAN framework through strategic additions of noise blocks, depthwise convolutions, and local windowed attention mechanisms. This innovative approach enables more efficient compression while maintaining high audio quality across different temporal scales.

SNAC’s architecture extends RVQGAN by implementing a sophisticated multi-scale approach through several key components. The core structure consists of an encoder-decoder network with cascaded Residual Vector Quantization layers in the bottleneck. At each iteration, the system performs downsampling of residuals using average pooling, followed by codebook lookup and upsampling via nearest-neighbor interpolation. The architecture incorporates three key elements: noise blocks that inject input-dependent Gaussian noise for enhanced expressiveness, depthwise convolutions for efficient computation and training stability, and local windowed attention layers at the lowest temporal resolution to capture contextual relationships effectively.

Performance evaluation of SNAC demonstrates significant improvements across both speech and music compression tasks. In music compression, SNAC outperformed competing codecs like Encodec and DAC at comparable bitrates, even matching the quality of systems operating at twice its bitrate. The 32 kHz SNAC model showed similar performance to its 44 kHz counterpart, suggesting optimal efficiency at lower sampling rates. In speech compression, SNAC exhibited remarkable results, maintaining near-reference audio quality even at bitrates below 1 kbit/s. These results were validated through both objective metrics and MUSHRA listening tests conducted with audio experts, confirming SNAC’s superior performance in bandwidth-constrained applications.

SNAC represents a significant advancement in neural audio compression through its innovative multi-scale approach to Residual Vector Quantization. By operating at multiple temporal resolutions, the system effectively adapts to audio signals’ inherent structures, achieving superior compression efficiency. Comprehensive evaluations through both objective metrics and subjective testing confirm SNAC’s ability to deliver higher audio quality at lower bitrates compared to existing state-of-the-art codecs.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post Multi-Scale Neural Audio Codec (SNAC): An Wxtension of Residual Vector Quantization that Uses Quantizers Operating at Multiple Temporal Resolutions appeared first on MarkTechPost.

Source: Read MoreÂ