In Part 1 of this series, we explored best practices for creating accurate and reliable agents using Amazon Bedrock Agents. Amazon Bedrock Agents help you accelerate generative AI application development by orchestrating multistep tasks. Agents use the reasoning capability of foundation models (FMs) to create a plan that decomposes the problem into multiple steps. The model is augmented with the developer-provided instruction to create an orchestration plan and then carry out the plan. The agent can use company APIs and external knowledge through Retrieval Augmented Generation (RAG).

In this second part, we dive into the architectural considerations and development lifecycle practices that can help you build robust, scalable, and secure intelligent agents. Whether you are just starting to explore the world of conversational AI or looking to optimize your existing agent deployments, this comprehensive guide can provide valuable long-term insights and practical tips to help you achieve your goals.

Enable comprehensive logging and observability

From the outset of your agent development journey, you should implement thorough logging and observability practices. This is crucial for debugging, auditing, and troubleshooting your agents. The first step to achieve comprehensive logging is to enable Amazon Bedrock model invocation logging to capture prompts and responses securely in your account.

Amazon Bedrock Agents also provides you with traces, a detailed overview of the steps being orchestrated by the agents, the underlying prompts invoking the FM, the references being returned from the knowledge bases, and code being generated by the agent. Trace events are streamed in real time, which allows you to customize UX cues to keep the end-user informed about the progress of their request. You can log your agent’s traces and use them to track and troubleshoot your agents.

When moving agent applications to production, it’s a best practice to set up a monitoring workflow to continuously analyze your logs. You can do so by either creating a custom solution or using an open source solution such as Bedrock-ICYM.

Use infrastructure as code

Just as you would with any other software development project, you should use infrastructure as code (IaC) frameworks to facilitate iterative and reliable deployment. This lets you create repeatable and production-ready agents that can be readily reproduced, tested, and monitored. Amazon Bedrock Agents allows you to write IaC code with AWS CloudFormation, the AWS Cloud Development Kit (AWS CDK), or Terraform. We also recommend that you get started using our Agent Blueprints construct. We provide blueprint templates of the most common capabilities of Amazon Bedrock Agents, which can be deployed and updated with a single AWS CDK command.

When creating agents that use action groups, you can specify your function definitions as a JSON object to the agent or provide an API schema in the OpenAPI schema format. If you already have an OpenAPI schema for your application, the best practice is to start with it. Make sure the functions have proper natural language descriptions, because your agent will use them to understand when to use each function. If you’re starting with no existing schema, the simplest way to provide tool metadata for your agent is to use simple JSON function definitions. Either way, you can use the Amazon Bedrock console to quickly create a default AWS Lambda function to get started implementing your actions or tools.

After you start to scale the development of agents, you should consider the reusability of the agent’s components. Using IaC will allow you to have predefined guardrails using Amazon Bedrock Guardrails, knowledge bases using Amazon Bedrock Knowledge Bases, and action groups that are reused over multiple agents.

Building agents that run tasks requires function definitions and Lambda functions. Another best practice is to use generative AI to accelerate the development and maintenance of this code. You can do so directly with the invoke model functionality in Amazon Bedrock, using the Amazon Q Developer support or even by creating an AWS PartyRock application that creates a framework of your Lambda function based on your action group metadata. You can directly generate the IaC required for creating your agents with function definitions and Lambda connections using generative AI. Independently of the approach selected, creating a test pipeline that validates and runs the IaC will help you optimize your agent solutions.

Use SessionState for additional agent context

You can use SessionState to provide additional context to your agent. You can pass information that is only available to the Lambda function in the action groups using SessionAttribute and information that should be available to your prompt as SessionPromptAttribute. For example, if you want to pass a user authentication token for your action to use, it’s best placed as a SessionAttribute. If you want to pass information that the large language model (LLM) needs to reason about, such as the current date and timestamp to define relative dates, it’s best placed as a SessionPromptAttribute. This lets your agent infer things like the number of days before your next payment due date or how many hours it has been since you placed your order using the reasoning capabilities of the underlying LLM model.

Optimize model selection for cost and performance

A key part of the agent building process is to select the underlying FM for your agent (or for each sub-agent). Experiment with available FMs to select the best one for your application based on cost, latency, and accuracy requirements. Implement automated testing pipelines to collect evaluation metrics, enabling data-driven decisions on model selection. This approach allows you to use faster, cheaper models like Anthropic’s Claude 3 Haiku on Amazon Bedrock for simple agents, and more complex applications can use more advanced models like Anthropic’s Claude 3.5 Sonnet or Anthropic’s Claude 3 Opus.

Implement robust testing frameworks

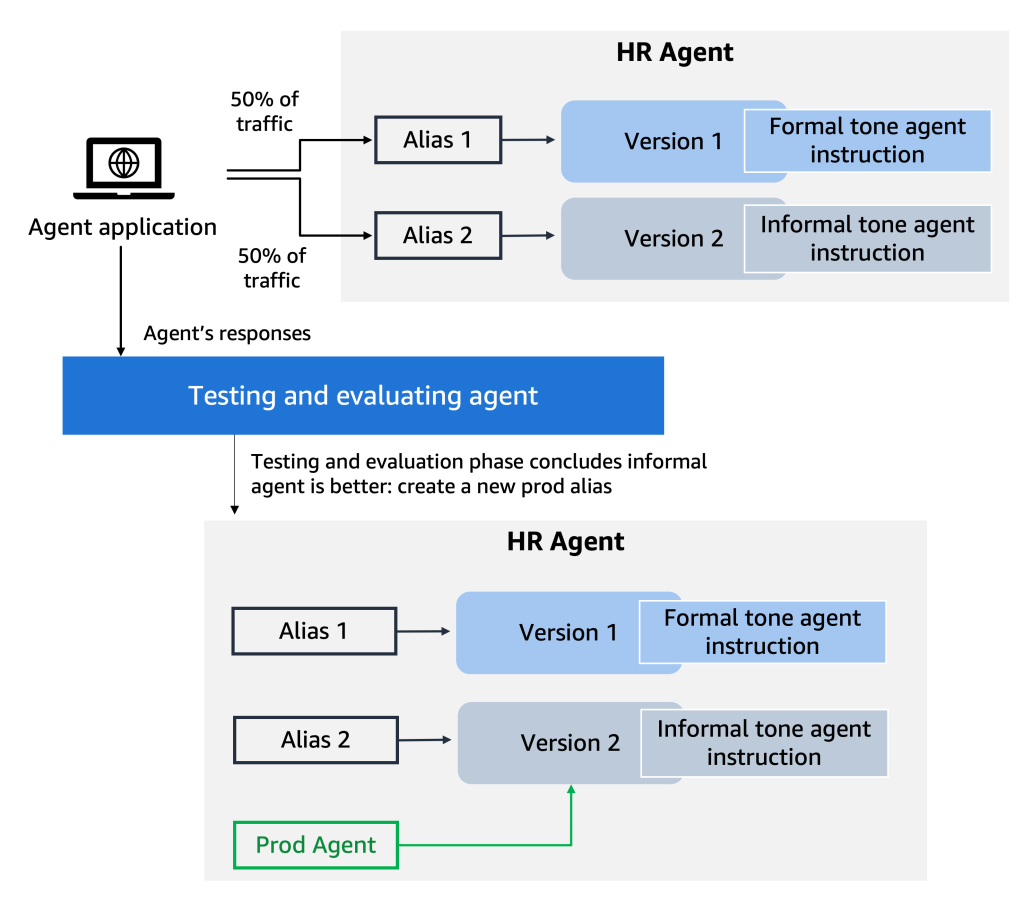

Automating the evaluation of your agent, or any generative AI-powered system, can accelerate the development process and make sure you provide your customers with the best possible solution. You should evaluate on multiple dimensions, including cost, latency, and accuracy of your agents. Use frameworks like Agent Evaluation to assess agent behavior against predefined criteria. By using the Amazon Bedrock agent versioning and alias features, you can unlock A/B testing as part of your deployment stages. You should define different aspects of agent behavior, such as formal or informal HR assistant tone, that can be tested with a subset of your user group. You can then make different agent versions available for each group during initial deployments and evaluate the agent behavior for each group. Amazon Bedrock Agents has built-in versioning capabilities to help you with this key part of testing. The following figure shows how the HR agent can be updated after a testing and evaluation phase to create a new alias pointing to the selected version of the agent for the model invocation.

Use LLMs for test case generation

You can use LLMs to generate test cases based on expected use cases for your agent. As a best practice, you should select a different LLM to generate data than the one that is powering your agent. This approach can significantly accelerate the building of comprehensive test suites, providing thorough coverage of potential scenarios. For example, you could use the following prompt to create test cases for an HR assistant agent that helps employees booking holidays:

Design robust confirmation and security mechanisms

Implement robust confirmation mechanisms for critical actions in your agent’s workflow. Clearly state in your instructions that the agent should ask for user confirmation before running certain functions, especially those that modify data or perform sensitive operations. This step helps move beyond proof of concept or prototype stages, verifying that your agent operates reliably in production environments. For instance, the following instruction tells your agent to confirm that a vacation request action should be run before updating the database for the user:

You can also use the requireConfirmation field for function schema definition or the

x-requireConfirmation field for API schema definition during the creation of a new action to enable the Amazon Bedrock Agents built-in functionality for user confirmation request before invoking an action in an action group.

Implement flexible authorization and encryption

You should provide customer managed keys to encrypt your agent’s resources, and confirm that your AWS Identity and Access Management (IAM) permissions follow the least privilege approach, limiting your agent to only have access to required resources and actions. When implementing action groups, take advantage of the sessionAttributes parameter of your sessionState to provide information about your user roles and permissions so that your action can implement fine-grained permissions (see the following sample code). Another best practice is to use the knowledgeBaseConfigurations parameter of the sessionState to provide extra configurations to your knowledge base, such as the user group defining the documents that a user should have access to through knowledge base metadata filtering.

Integrate responsible AI practices

When developing generative AI applications, you should apply responsible AI practices to create systems in an ethical, transparent, and accountable manner. Amazon Bedrock features help you develop your responsible AI practices in a scalable manner. When creating agents, you should implement Amazon Bedrock Guardrails to avoid sensitive topics, filter user input and agent output from harmful content, and redact sensitive information to protect user privacy. You can create organization-level guardrails that can be reused across multiple generative AI applications, thereby preserving consistent responsible AI practices. After you create a guardrail, you can associate it with your agent using the Amazon Bedrock Agents built-in guardrails connection (see the following sample code).

Build a reusable actions catalog and scale gradually

After the successful deployment of your first agent, you can plan to reuse common functionalities, such as action groups, knowledge bases, and guardrails, for other applications. Amazon Bedrock Agents support the creation of agents manually using the AWS Management Console, using code with the SDKs available for the agent API, or using IaC with CloudFormation templates, the AWS CDK, or Terraform templates. To reuse functionality, the best practice is to create and deploy them using IaC and reuse the components across applications. The following figure shows an example of the reusability of a utilities action group across two agents: an HR assistant and a banking assistant.

Follow a crawl-walk-run methodology when scaling agent usage

The final best practice that we would like to highlight is to follow the crawl-walk-run methodology. Start with an internal application (crawl), followed with applications made available for a smaller, controlled set of external users (walk), and finally scale your applications to all customers (run) and eventually use multi-agent collaboration. This approach helps you build reliable agents that support mission-critical business operations, while minimizing risks associated with the rollout of new technology. The following figure illustrates this process.

Conclusion

By following these architectural and development lifecycle best practices, you’ll be well-equipped to create robust, scalable, and secure agents that can effectively serve your users and integrate seamlessly with your existing systems.

For examples to get started, check out the Amazon Bedrock samples repository. To learn more about Amazon Bedrock Agents, get started with the Amazon Bedrock Workshop and the standalone Amazon Bedrock Agents Workshop, which provides a deeper dive. Additionally, check out the service introduction video from AWS re:Invent 2023.

About the Authors

Maira Ladeira Tanke is a Senior Generative AI Data Scientist at AWS. With a background in machine learning, she has over 10 years of experience architecting and building AI applications with customers across industries. As a technical lead, she helps customers accelerate their achievement of business value through generative AI solutions on Amazon Bedrock. In her free time, Maira enjoys traveling, playing with her cat, and spending time with her family someplace warm.

Mark Roy is a Principal Machine Learning Architect for AWS, helping customers design and build generative AI solutions. His focus since early 2023 has been leading solution architecture efforts for the launch of Amazon Bedrock, the flagship generative AI offering from AWS for builders. Mark’s work covers a wide range of use cases, with a primary interest in generative AI, agents, and scaling ML across the enterprise. He has helped companies in insurance, financial services, media and entertainment, healthcare, utilities, and manufacturing. Prior to joining AWS, Mark was an architect, developer, and technology leader for over 25 years, including 19 years in financial services. Mark holds six AWS certifications, including the ML Specialty Certification.

Navneet Sabbineni is a Software Development Manager at AWS Bedrock. With over 9 years of industry experience as a software developer and manager, he has worked on building and maintaining scalable distributed services for AWS, including generative AI services like Amazon Bedrock Agents and conversational AI services like Amazon Lex. Outside of work, he enjoys traveling and exploring the Pacific Northwest with his family and friends.

Monica Sunkara is a Senior Applied Scientist at AWS, where she works on Amazon Bedrock Agents. With over 10 years of industry experience, including 6 years at AWS, Monica has contributed to various AI and ML initiatives such as Alexa Speech Recognition, Amazon Transcribe, and Amazon Lex ASR. Her work spans speech recognition, natural language processing, and large language models. Recently, she worked on adding function calling capabilities to Amazon Titan text models. Monica holds a degree from Cornell University, where she conducted research on object localization under the supervision of Prof. Andrew Gordon Wilson before joining Amazon in 2018.

Source: Read MoreÂ