Large language models (LLMs) have demonstrated significant reasoning capabilities, yet they face issues like hallucinations and the inability to conduct faithful reasoning. These challenges stem from knowledge gaps, leading to factual errors during complex tasks. While knowledge graphs (KGs) are increasingly used to bolster LLM reasoning, current KG-enhanced approaches—retrieval-based and agent-based—struggle with either accurate knowledge retrieval or efficiency in reasoning on a large scale.

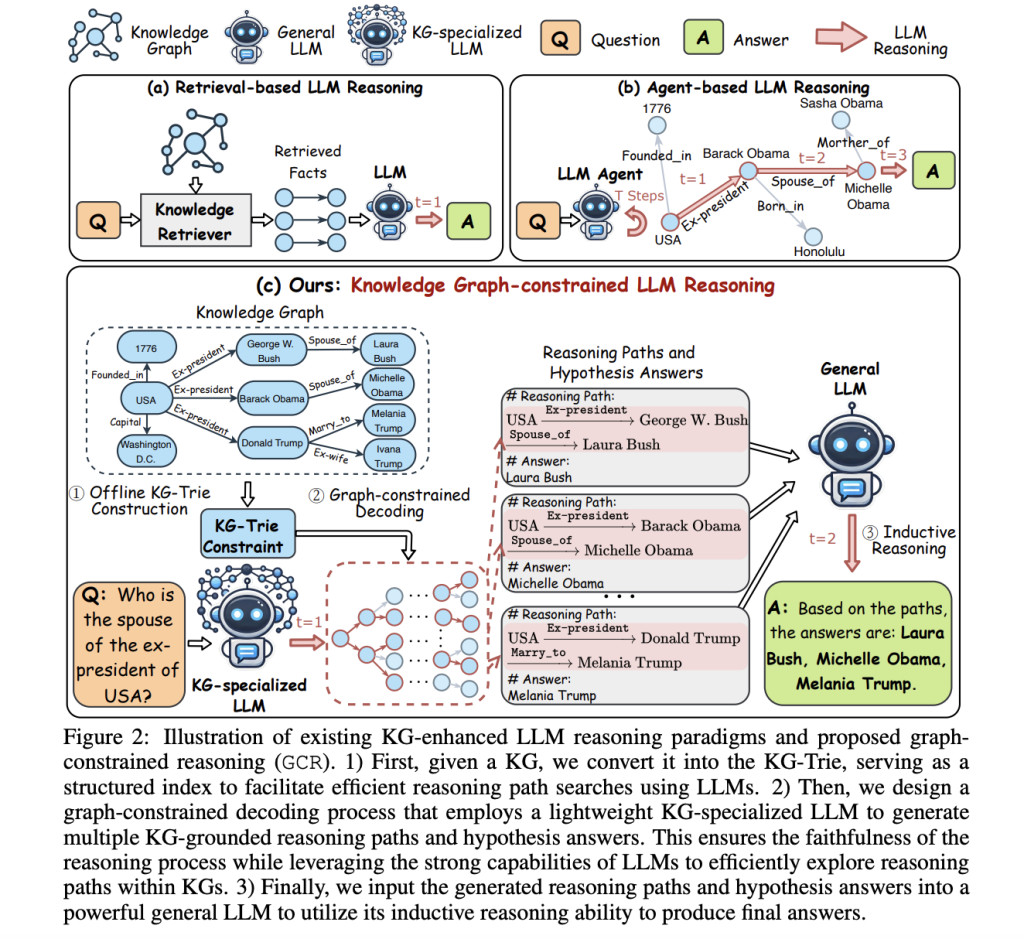

Researchers from Monash University, Nanjing University of Science and Technology, and Griffith University propose a novel framework called Graph-Constrained Reasoning (GCR). This framework aims to bridge the gap between structured knowledge in KGs and the unstructured reasoning of LLMs, ensuring faithful, KG-grounded reasoning. GCR introduces a trie-based index named KG-Trie to integrate KG structures directly into the LLM decoding process. This integration enables LLMs to generate reasoning paths grounded in KGs, minimizing hallucinations. GCR also employs two models: a lightweight KG-specialized LLM for efficient reasoning on graphs and a powerful general LLM for inductive reasoning over multiple generated paths.

The GCR framework comprises three main components. Firstly, it constructs a Knowledge Graph Trie (KG-Trie), which acts as a structured index to guide LLM reasoning by encoding the paths within the KG. Secondly, GCR uses a process called graph-constrained decoding, employing a KG-specialized LLM to generate KG-grounded reasoning paths and hypothesized answers. The KG-Trie constrains the LLM’s decoding process, ensuring all reasoning paths are valid and rooted in the KG. Finally, GCR leverages the inductive reasoning capabilities of a general LLM, which processes multiple generated reasoning paths to derive final, accurate answers.

Extensive experiments demonstrate that GCR achieves state-of-the-art performance across several KGQA benchmarks, including WebQSP and CWQ. Notably, GCR surpassed previous methods by 2.1% and 9.1% in accuracy (Hit) on these datasets, respectively. The framework successfully eliminated hallucinations, achieving a 100% faithful reasoning ratio, and exhibited strong zero-shot generalizability to unseen KGs without additional training. For example, GCR showed an impressive 7.6% increase in accuracy when tested on a new commonsense knowledge graph, compared to baseline LLMs.

In conclusion, GCR presents a robust solution to the challenges of faithful reasoning in LLMs by directly integrating structured KGs into the reasoning process. The use of a KG-Trie for decoding constraints and the dual-model approach that leverages both KG-specialized and general LLMs results in faithful, efficient, and accurate reasoning. This approach ensures that LLMs generate reliable outputs without hallucinations, showcasing its potential as a dependable method for large-scale reasoning tasks involving structured and unstructured knowledge.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post Graph-Constrained Reasoning (GCR): A Novel AI Framework that Bridges Structured Knowledge in Knowledge Graphs with Unstructured Reasoning in LLMs appeared first on MarkTechPost.

Source: Read MoreÂ