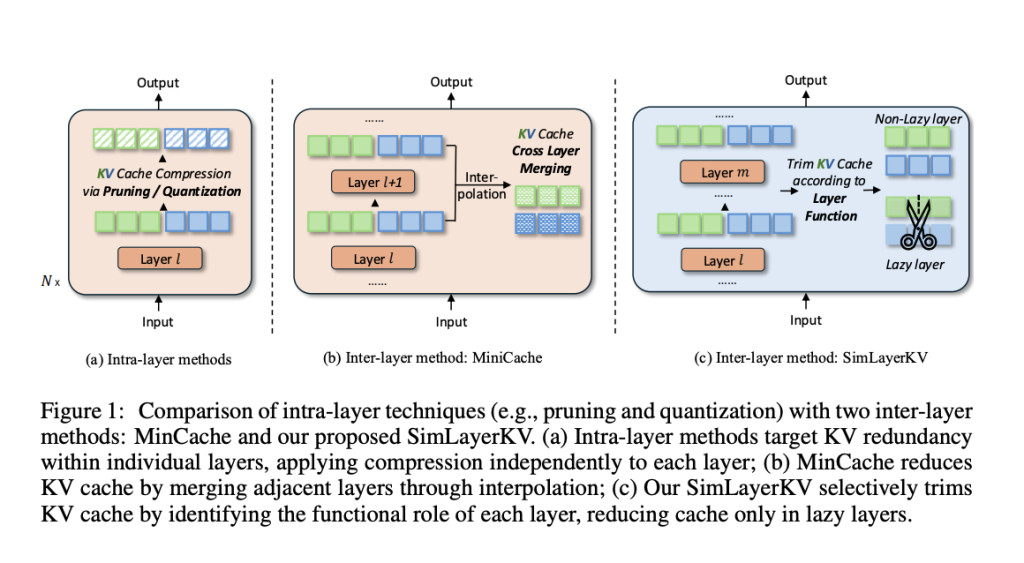

Recent advancements in large language models (LLMs) have significantly enhanced their ability to handle long contexts, making them highly effective in various tasks, from answering questions to complex reasoning. However, a critical bottleneck has emerged: the memory requirements for storing key-value (KV) caches escalate significantly as the number of model layers and the length of input sequences increase. This KV cache, which stores precomputed key and value tensors for each token to avoid recomputation during inference, requires substantial GPU memory, creating efficiency challenges for large-scale deployment. For instance, LLaMA2-7B demands approximately 62.5 GB of GPU memory for the KV cache with an input sequence length of 128K tokens. Existing methods for optimizing KV cache—such as quantization and token eviction—focus primarily on intra-layer redundancies, leaving the potential savings from inter-layer redundancies largely unexploited.

Researchers from Sea AI Lab and Singapore Management University propose SimLayerKV, a novel method aimed at reducing inter-layer KV cache redundancies by selectively dropping the KV cache in identified “lazy†layers. The technique is founded on an observation that certain layers in long-context LLMs exhibit “lazy†behavior, meaning they contribute minimally to modeling long-range dependencies compared to other layers. These lazy layers tend to focus on less important tokens or just the most recent tokens during generation. By analyzing attention weight patterns, the researchers found that the behavior of these lazy layers remains consistent across tokens for a given input, making them ideal candidates for KV cache reduction. SimLayerKV does not require retraining of models, is simple to implement (requiring only seven lines of code), and is compatible with 4-bit quantization for additional memory efficiency gains.

The proposed SimLayerKV framework selectively reduces the KV cache by trimming lazy layers without affecting non-lazy layers. The researchers designed a simple mechanism to identify lazy layers by analyzing the attention allocation pattern in each layer. Layers where attention is focused primarily on initial or recent tokens are tagged as lazy. During inference, these layers have their KV cache reduced, while non-lazy layers retain their full cache. Unlike intra-layer methods, which apply compression independently to each layer, SimLayerKV operates across layers, leveraging inter-layer redundancies to achieve greater compression. It has been evaluated on three representative LLMs: LLaMA2-7B, LLaMA3-8B, and Mistral-7B, using 16 tasks from the LongBench benchmark.

The experimental results demonstrate that SimLayerKV achieves a KV cache compression ratio of 5×, with only a 1.2% drop in performance when combined with 4-bit quantization. Specifically, it was shown to compress the KV cache effectively across various tasks with minimal performance degradation. For instance, with Mistral-7B, the model achieved an average performance score comparable to that of the full KV cache while reducing memory usage significantly. When tested on the Ruler benchmark’s Needle-in-a-Haystack (NIAH) task, SimLayerKV maintained high retrieval performance even at a context length of 32K tokens, showing only a 4.4% drop compared to full KV caching. This indicates that the proposed method successfully balances efficiency and performance.

SimLayerKV provides an effective and straightforward approach to tackle the KV cache bottleneck in large LLMs. By focusing on reducing inter-layer redundancies through selective KV cache trimming, it allows for significant memory savings with minimal performance impact. Its plug-and-play nature makes it a promising solution for enhancing inference efficiency in models handling long-context tasks. Moving forward, integrating SimLayerKV with other KV cache optimization techniques could further improve memory efficiency and model performance, presenting new opportunities in the efficient deployment of LLMs.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post SimLayerKV: An Efficient Solution to KV Cache Challenges in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ