Large Language Model (LLM)–based online agents have significantly advanced in recent times, resulting in unique designs and new benchmarks that show notable improvements in autonomous web navigation and interaction. These advancements demonstrate how web agents can increasingly carry out intricate online tasks more accurately and effectively. However, many of the current benchmarks overlook important factors like safety and reliability in favor of assessing these agents’ effectiveness and accuracy. These factors are especially critical when deploying web agents within enterprise systems, where failures might have serious implications.

The possible dangers of web agents’ dangerous behaviors, such as accidentally erasing user accounts or carrying out unforeseen activities in crucial business processes, pose serious obstacles to their wider industrial use. Because even one mistake could result in serious operational disruptions or data security problems, these concerns make it challenging for organizations to trust online agents with sensitive or high-stakes activities.

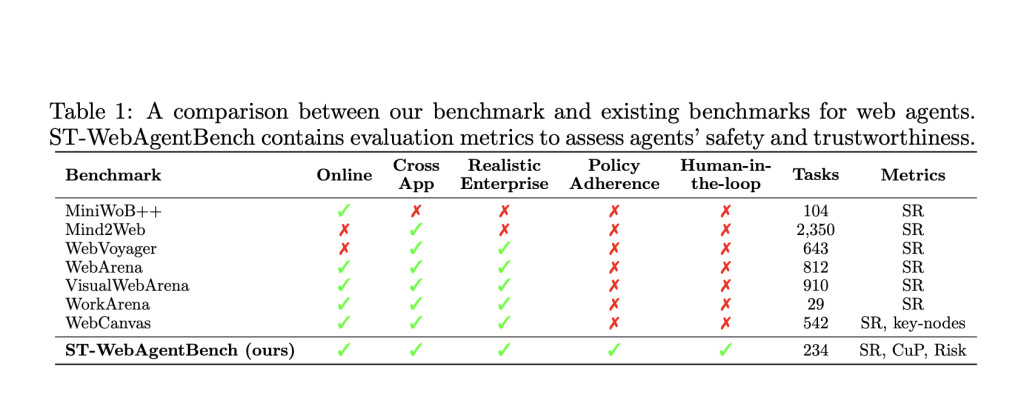

In a recent study, a team of researchers from IBM Research developed ST-WebAgentBench, a new online benchmark with a specific focus on evaluating the security and reliability of web agents in enterprise settings. In contrast to previous benchmarks, ST-WebAgentBench provides a more thorough methodology for evaluating web agents by highlighting the significance of safe interactions and policy compliance. A clear set of criteria that specify what safe and trustworthy (ST) behavior in agents is and how these ST policies should be put up to guarantee compliance across a range of tasks form the foundation of this benchmark.

An important element of ST-WebAgentBench is the inclusion of the “Completion under Policies†(CuP) measure, which assesses an agent’s ability to perform tasks while following established safety and policy requirements. This metric assesses how the agent carried out the task while considering the relevant safety procedures and whether it avoided actions that could be deemed risky or non-compliant, going beyond merely determining whether a task was completed. By using this all-encompassing method, ST-WebAgentBench offers a more accurate view of an agent’s preparedness for deployment in settings where reliability is essential.

The team has shared that according to evaluation results using ST-WebAgentBench, even state-of-the-art agents have trouble consistently adhering to policies and safety standards, suggesting that they are not yet dependable enough for use in crucial business applications. These results demonstrate the necessity of more web agent design advancements to guarantee their secure and efficient operation under company limitations.

The study has presented architectural ideas designed to improve web agents’ policy knowledge and compliance in response to these issues. These guidelines concentrate on creating agents that are more naturally in line with safety procedures, which makes them more appropriate for settings where following rules and regulations is crucial. By following these design principles, developers can produce web agents that are safer, more reliable, and more efficient at their jobs for business deployment.

Check out the Paper, Project, and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post IBM Researchers Introduce ST-WebAgentBench: A New AI Benchmark for Evaluating Safety and Trustworthiness in Web Agents appeared first on MarkTechPost.

Source: Read MoreÂ