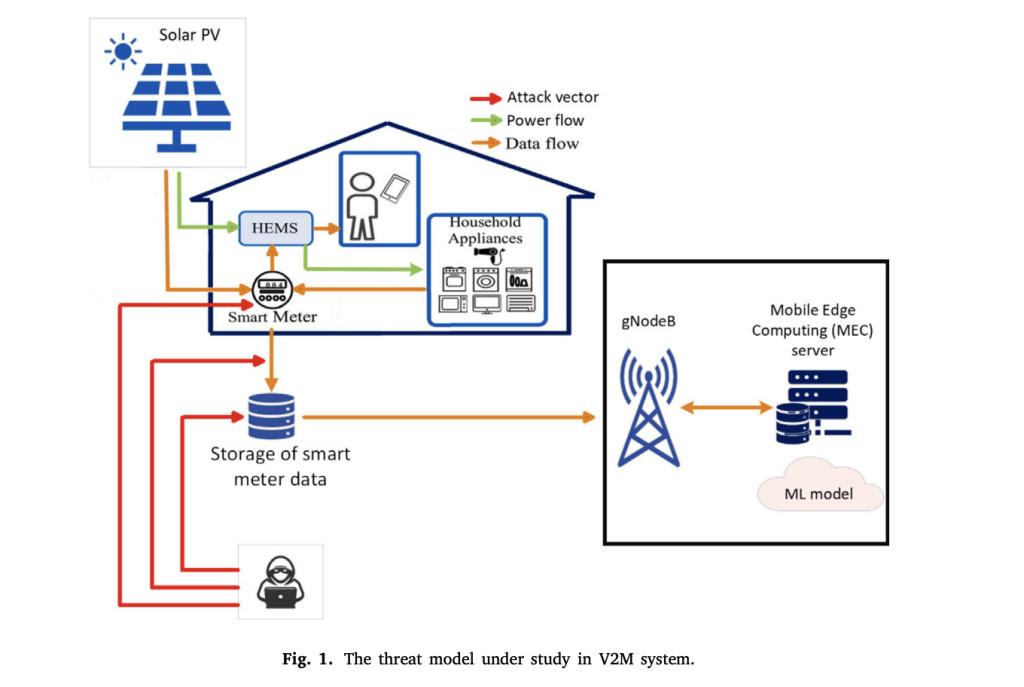

Mobile Vehicle-to-Microgrid (V2M) services enable electric vehicles to supply or store energy for localized power grids, enhancing grid stability and flexibility. AI is crucial in optimizing energy distribution, forecasting demand, and managing real-time interactions between vehicles and the microgrid. However, adversarial attacks on AI algorithms can manipulate energy flows, disrupting the balance between vehicles and the grid and potentially compromising user privacy by exposing sensitive data like vehicle usage patterns.

Although there is growing research on related topics, V2M systems still need to be thoroughly examined in the context of adversarial machine learning attacks. Existing studies focus on adversarial threats in smart grids and wireless communication, such as inference and evasion attacks on machine learning models. These studies typically assume full adversary knowledge or focus on specific attack types. Thus, there is an urgent need for comprehensive defense mechanisms tailored to the unique challenges of V2M services, especially those considering both partial and full adversary knowledge.

In this context, a groundbreaking paper was recently published in Simulation Modelling Practice and Theory to address this need. For the first time, this work proposes an AI-based countermeasure to defend against adversarial attacks in V2M services, presenting multiple attack scenarios and a robust GAN-based detector that effectively mitigates adversarial threats, particularly those enhanced by CGAN models.

Concretely, the proposed approach revolves around augmenting the original training dataset with high-quality synthetic data generated by the GAN. The GAN operates at the mobile edge, where it first learns to produce realistic samples that closely mimic legitimate data. This process involves two networks: the generator, which creates synthetic data, and the discriminator, which distinguishes between real and synthetic samples. By training the GAN on clean, legitimate data, the generator improves its ability to create indistinguishable samples from real data.

Once trained, the GAN creates synthetic samples to enrich the original dataset, increasing the variety and amount of training inputs, which is critical for strengthening the classification model’s resilience. The research team then trains a binary classifier, classifier-1, using the enhanced dataset to detect valid samples while filtering out malicious material. Classifier-1 only transmits authentic requests to Classifier-2, categorizing them as low, medium, or high priority. This tiered defensive mechanism successfully separates antagonistic requests, preventing them from interfering with crucial decision-making processes in the V2M system.Â

By leveraging the GAN-generated samples, the authors enhance the classifier’s generalization capabilities, enabling it to better recognize and resist adversarial attacks during operation. This approach fortifies the system against potential vulnerabilities and ensures the integrity and reliability of data within the V2M framework. The research team concludes that their adversarial training strategy, centered on GANs, offers a promising direction for safeguarding V2M services against malicious interference, thus maintaining operational efficiency and stability in smart grid environments, a prospect that inspires hope for the future of these systems.

To evaluate the proposed method, the authors analyze adversarial machine learning attacks against V2M services across three scenarios and five access cases. The results indicate that as adversaries have less access to training data, the adversarial detection rate (ADR) improves, with the DBSCAN algorithm enhancing detection performance. However, using Conditional GAN for data augmentation significantly reduces DBSCAN’s effectiveness. In contrast, a GAN-based detection model excels at identifying attacks, particularly in gray-box cases, demonstrating robustness against various attack conditions despite a general decline in detection rates with increased adversarial access.

In conclusion, the proposed AI-based countermeasure utilizing GANs offers a promising approach to enhance the security of Mobile V2M services against adversarial attacks. The solution improves the classification model’s robustness and generalization capabilities by generating high-quality synthetic data to enrich the training dataset. The results demonstrate that as adversarial access decreases, detection rates improve, highlighting the effectiveness of the layered defense mechanism. This research paves the way for future advancements in safeguarding V2M systems, ensuring their operational efficiency and resilience in smart grid environments.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post This AI Paper Propsoes an AI Framework to Prevent Adversarial Attacks on Mobile Vehicle-to-Microgrid Services appeared first on MarkTechPost.

Source: Read MoreÂ