With recent advances in large language models (LLMs), a wide array of businesses are building new chatbot applications, either to help their external customers or to support internal teams. For many of these use cases, businesses are building Retrieval Augmented Generation (RAG) style chat-based assistants, where a powerful LLM can reference company-specific documents to answer questions relevant to a particular business or use case.

In the last few months, there has been substantial growth in the availability and capabilities of multimodal foundation models (FMs). These models are designed to understand and generate text about images, bridging the gap between visual information and natural language. Although such multimodal models are broadly useful for answering questions and interpreting imagery, they’re limited to only answering questions based on information from their own training document dataset.

In this post, we show how to create a multimodal chat assistant on Amazon Web Services (AWS) using Amazon Bedrock models, where users can submit images and questions, and text responses will be sourced from a closed set of proprietary documents. Such a multimodal assistant can be useful across industries. For example, retailers can use this system to more effectively sell their products (for example, HDMI_adaptor.jpeg, “How can I connect this adapter to my smart TV?â€). Equipment manufacturers can build applications that allow them to work more effectively (for example, broken_machinery.png, “What type of piping do I need to fix this?â€). This approach is broadly effective in scenarios where image inputs are important to query a proprietary text dataset. In this post, we demonstrate this concept on a synthetic dataset from a car marketplace, where a user can upload a picture of a car, ask a question, and receive responses based on the car marketplace dataset.

Solution overview

For our custom multimodal chat assistant, we start by creating a vector database of relevant text documents that will be used to answer user queries. Amazon OpenSearch Service is a powerful, highly flexible search engine that allows users to retrieve data based on a variety of lexical and semantic retrieval approaches. This post focuses on text-only documents, but for embedding more complex document types, such as those with images, see Talk to your slide deck using multimodal foundation models hosted on Amazon Bedrock and Amazon SageMaker.

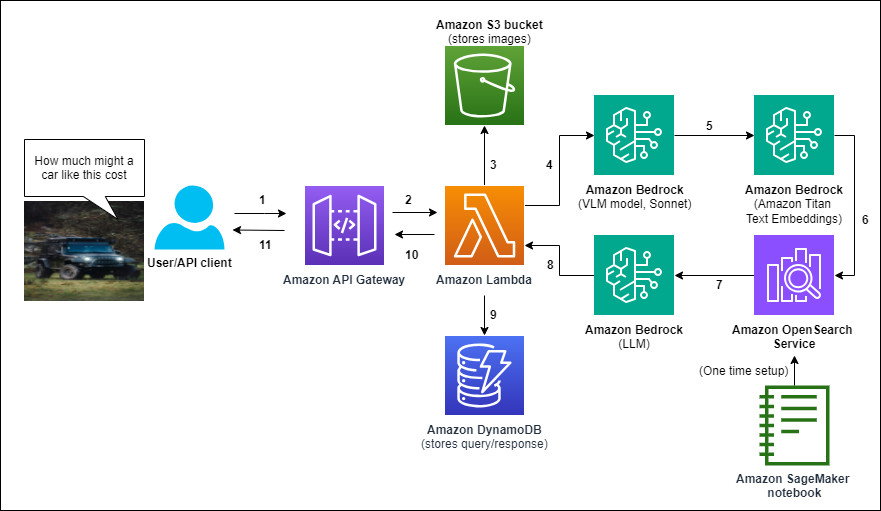

After the documents are ingested in OpenSearch Service (this is a one-time setup step), we deploy the full end-to-end multimodal chat assistant using an AWS CloudFormation template. The following system architecture represents the logic flow when a user uploads an image, asks a question, and receives a text response grounded by the text dataset stored in OpenSearch.

The logic flow for generating an answer to a text-image response pair routes as follows:

Steps 1 and 2 – To start, a user query and corresponding image are routed through an Amazon API Gateway connection to an AWS Lambda function, which serves as the processing and orchestrating compute for the overall process.

Step 3 – The Lambda function stores the query image in Amazon S3 with a specified ID. This may be useful for later chat assistant analytics.

Steps 4–8 – The Lambda function orchestrates a series of Amazon Bedrock calls to a multimodal model, an LLM, and a text-embedding model:

Query the Claude V3 Sonnet model with the query and image to produce a text description.

Embed a concatenation of the original question and the text description with the Amazon Titan Text Embeddings

Retrieve relevant text data from OpenSearch Service.

Generate a grounded response to the original question based on the retrieved documents.

Step 9 – The Lambda function stores the user query and answer in Amazon DynamoDB, linked to the Amazon S3 image ID.

Steps 10 and 11 – The grounded text response is sent back to the client.

There is also an initial setup of the OpenSearch Index, which is done using an Amazon SageMaker notebook.

Prerequisites

To use the multimodal chat assistant solution, you need to have a handful of Amazon Bedrock FMs available.

On the Amazon Bedrock console, choose Model access in the navigation pane.

Choose Manage model access.

Activate all the Anthropic models, including Claude 3 Sonnet, as well as the Amazon Titan Text Embeddings V2 model, as shown in the following screenshot.

For this post, we recommend activating these models in the us-east-1 or us-west-2 AWS Region. These should become immediately active and available.

Simple deployment with AWS CloudFormation

To deploy the solution, we provide a simple shell script called deploy.sh, which can be used to deploy the end-to-end solution in different Regions. This script can be acquired directly from Amazon S3 using aws s3 cp s3://aws-blogs-artifacts-public/artifacts/ML-16363/deploy.sh .

Using the AWS Command Line Interface (AWS CLI), you can deploy this stack in various Regions using one of the following commands:

or

The stack may take up to 10 minutes to deploy. When the stack is complete, note the assigned physical ID of the Amazon OpenSearch Serverless collection, which you will use in further steps. It should look something like zr1b364emavn65x5lki8. Also, note the physical ID of the API Gateway connection, which should look something like zxpdjtklw2, as shown in the following screenshot.

Populate the OpenSearch Service index

Although the OpenSearch Serverless collection has been instantiated, you still need to create and populate a vector index with the document dataset of car listings. To do this, you use an Amazon SageMaker notebook.

On the SageMaker console, navigate to the newly created SageMaker notebook named MultimodalChatbotNotebook (as shown in the following image), which will come prepopulated with car-listings.zip and Titan-OS-Index.ipynb.

After you open the Titan-OS-Index.ipynb notebook, change the host_id variable to the collection physical ID you noted earlier.

Run the notebook from top to bottom to create and populate a vector index with a dataset of 10 car listings.

After you run the code to populate the index, it may still take a few minutes before the index shows up as populated on the OpenSearch Service console, as shown in the following screenshot.Â

Test the Lambda function

Next, test the Lambda function created by the CloudFormation stack by submitting a test event JSON. In the following JSON, replace your bucket with the name of your bucket created to deploy the solution, for example, multimodal-chatbot-deployment-ACCOUNT_NO-REGION.

You can set up this test by navigating to the Test panel for the created lambda function and defining a new test event with the preceding JSON. Then, choose Test on the top right of the event definition.

If you are querying the Lambda function from another bucket than those allowlisted in the CloudFormation template, make sure to add the relevant permissions to the Lambda execution role.

The Lambda function may take between 10–20 seconds to run (mostly dependent on the size of your image). If the function performs properly, you should receive an output JSON similar to the following code block. The following screenshot shows the successful output on the console.

Note that if you just enabled model access, it may take a few minutes for access to propagate to the Lambda function.

Test the API

For integration into an application, we’ve connected the Lambda function to an API Gateway connection that can be pinged from various devices. We’ve included a notebook within the SageMaker notebook that allows you to query the system with a question and an image and return a response. To use the notebook, replace the API_GW variable with the physical ID of the API Gateway connection that was created using the CloudFormation stack and the REGION variable with the Region your infrastructure was deployed in. Then, making sure your image location and query are set correctly, run the notebook cell. Within 10–20 seconds, you should receive the output of your multimodal query sourced from your own text dataset. This is shown in the following screenshot.

Note that the API Gateway connection is only accessible from this specific notebook, and more comprehensive security and permission elements are required to productionize the system.

Qualitative results

A grounded multimodal chat assistant, where users can submit images with queries, can be useful in many settings. We demonstrate this application with a dataset of cars for sale. For example, a user may have a question about a car they’re looking at, so they snap a picture and submit a question, such as “How much might a car like this cost?†Rather than answering the question with generic information that the LLM was trained on (which may be out of date), responses will be grounded with your local and specific car sales dataset. In this use case, we took images from Unsplash and used a synthetically created dataset of 10 car listings to answer questions. The model and year of the 10 car listings are shown in the following screenshot.

For the examples in the following table, you can observe in the answer, not only has the vision language model (VLM) system identified the correct cars in the car listings that are most similar to the input image, but also it has answered the questions with specific numbers, costs, and locations that are only available from our closed cars dataset car-listings.zip.

Question

Image

Answer

How much would a car like this cost?

The 2013 Jeep Grand Cherokee SRT8 listing is most relevant, with an asking price of $17,000 despite significant body damage from an accident. However, it retains the powerful 470 hp V8 engine and has been well-maintained with service records.

What is the engine size of this car?

The car listing for the 2013 Volkswagen Beetle mentions it has a fuel-efficient 1.8L turbocharged engine. No other engine details are provided in the listings.

Where in the world could I purchase a used car like this?

Based on the car listings provided, the 2021 Tesla Model 3 for sale seems most similar to the car you are interested in. It’s described as a low mileage, well-maintained Model 3 in pristine condition located in the Seattle area for $48,000.

Latency and quantitative results

Because speed and latency are important for chat assistants and because this solution consists of multiple API calls to FMs and data stores, it’s interesting to measure the speed of each step in the process. We did an internal analysis of the relative speeds of the various API calls, and the following graph visualizes the results.

From slowest to fastest, we have the call to the Claude V3 Vision FM, which takes on average 8.2 seconds. The final output generation step (LLM Gen on the graph in the screenshot) takes on average 4.9 seconds. The Amazon Titan Text Embeddings model and OpenSearch Service retrieval process are much faster, taking 0.28 and 0.27 seconds on average, respectively.

In these experiments, the average time for the full multistage multimodal chatbot is 15.8 seconds. However, the time can be as low as 11.5 seconds overall if you submit a 2.2 MB image, and it could be much lower if you use even lower-resolution images.

Clean up

To clean up the resources and avoid charges, follow these steps:

Make sure all the important data from Amazon DynamoDB and Amazon S3 are saved

Manually empty and delete the two provisioned S3 buckets

To clean up the resources, delete the deployed resource stack from the CloudFormation console.

Conclusion

From applications ranging from online chat assistants to tools to help sales reps close a deal, AI assistants are a rapidly maturing technology to increase efficiency across sectors. Often these assistants aim to produce answers grounded in custom documentation and datasets that the LLM was not trained on, using RAG. A final step is the development of a multimodal chat assistant that can do so as well—answering multimodal questions based on a closed text dataset.

In this post, we demonstrated how to create a multimodal chat assistant that takes images and text as input and produces text answers grounded in your own dataset. This solution will have applications ranging from marketplaces to customer service, where there is a need for domain-specific answers sourced from custom datasets based on multimodal input queries.

We encourage you to deploy the solution for yourself, try different image and text datasets, and explore how you can orchestrate various Amazon Bedrock FMs to produce streamlined, custom, multimodal systems.

About the Authors

Emmett Goodman is an Applied Scientist at the Amazon Generative AI Innovation Center. He specializes in computer vision and language modeling, with applications in healthcare, energy, and education. Emmett holds a PhD in Chemical Engineering from Stanford University, where he also completed a postdoctoral fellowship focused on computer vision and healthcare.

Negin Sokhandan is a Principle Applied Scientist at the AWS Generative AI Innovation Center, where she works on building generative AI solutions for AWS strategic customers. Her research background is statistical inference, computer vision, and multimodal systems.

Yanxiang Yu is an Applied Scientist at the Amazon Generative AI Innovation Center. With over 9 years of experience building AI and machine learning solutions for industrial applications, he specializes in generative AI, computer vision, and time series modeling.

Source: Read MoreÂ