This blog talks about how large language models (LLMs) can connect with SQL databases. The goal is to build chat apps that are easy and fun to use. Picture chatting with your data like you would with a coworker when answering a user’s question. This guide will help you understand everything. By the end, you will know how to change the way you connect with SQL databases. You will also learn to use natural language for a clear and simple experience.

Key Highlights

Explore how Large Language Models (LLMs) and Structured Query Language (SQL) work together. This helps you talk to databases using natural language. It makes working with data feel easier.

Learn how to set up your environment for LLM-SQL. This means choosing the right tools and libraries. You will also set up your database for safe access.

We will show you how to create a simple chat interface. This will turn user requests into SQL queries and get the results.

Discover how to use LLMs like GPT to improve chat applications. They can help understand what users want and make SQL queries more flexible.

Learn about the common problems when working with LLMs and SQL. You will also find ways to solve these issues and make performance better.

Understanding the Basics of LLM and SQL for Database Chatting

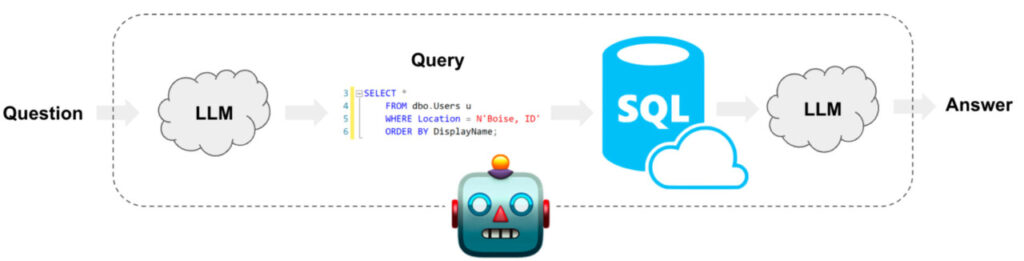

The strength of this integration comes from the teamwork of LLMs and SQL databases. LLMs, such as GPT, are skilled at understanding and writing text that seems human. This skill helps them read user requests in simple words. They can understand what a person needs, even if the question is not asked with technical database terms.

SQL databases are key for storing and managing data. They have a clear structure, which helps to keep, organize, and find information with simple queries. When we mix these two ideas, we connect how people talk with how databases work.

Introduction to Large Language Models (LLM)

Large Language Models (LLMs) are useful for Natural Language Processing (NLP). They can read text and write sentences that feel real. This makes them perfect for chat apps because they can answer questions well. When you combine LLMs with generative AI and SQL queries, you can link to a database and find information fast. Bringing together language models and databases helps build smart chatbots. This improves the user experience. Using SQL with LLMs is a smart way to handle user queries efficiently.

The Role of SQL in Database Management

SQL means Structured Query Language. It is the main language used for working with relational databases. A SQL database stores data clearly. It uses tables that have rows and columns. Rows are the records, and columns are the fields. SQL gives a strong and standard way to access and manage data.

Users can make SQL queries to get, change, add, or remove data in the database. These queries are like instructions. They inform the database about what to do and which data to handle. To create these queries, you must follow specific rules. You also need to understand the structure of the database. This means knowing the table names, column names, and data types.

Setting Up Your Environment for LLM-SQL Interactions

Before you begin building, you need to set up a good environment. This means creating a workspace where your LLM and SQL database can work together smoothly. When you do this, everything, like your code and database links, will be ready to connect.

First, pick the right tools. Langchain is a great framework for making apps that use LLM. It helps you connect to various data sources, like SQL databases. You must install the right libraries and set up the links to your database.

Tools and Libraries Needed for LLM-SQL Integration

To begin using LLM with SQL, the first thing you need to do is set up the right tools and libraries. A good idea is to create a virtual environment as your default setup. This practice will help avoid problems with dependencies and keep your project organized. In this separate environment, all the packages you need for your project will stay safe.

You will use strong tools like Langchain. This tool helps you build apps that work with Large Language Models, or LLMs. Langchain links your chosen LLM to an external SQL database.

To create your chat application, you can pick from many good open-source LLMs. You can also use advanced models like GPT from OpenAI. The OpenAI libraries give you the tools you need to add these models to your Python setup easily.

Configuring Your Database for LLM Access

Once you have your tools ready, it is time to set up your SQL database. This helps ensure that the LLM can access it safely and in a controlled way. In this guide, we will use PostgreSQL. It is a strong and popular open-source relational database. People know it is reliable and packed with many features. You can also use similar ideas with other SQL databases.

It’s really important to protect sensitive information. This includes items like database details. A good method to do this is by using environment variables. They keep this information away from your code. This makes your setup more secure.

To handle your environment variables, you need to make a .env file. This file usually stays in the main folder of your project. It gives you a simple place to set and manage important configuration details.

from langchain_community.utilities import SQLDatabase

from langchain.chains import create_sql_query_chain

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

load_dotenv()

db = SQLDatabase.from_uri(“mysql+mysqlconnector://root:Codoid%40123@localhost:3306/demo”)

print(db.dialect)

print(db.get_usable_table_names())

result = db.run(“SELECT * FROM worker_table LIMIT 10;”)

llm = ChatOpenAI(model=”gpt-3.5-turbo”, temperature=0)

chain = create_sql_query_chain(llm, db)

question = input(“enter you question here: n”)

response = chain.invoke({“question”: question})

print(“SQL IS : “, response)

print(“Result is: “, db.run(response))

Developing Your First Chat Interface with LLM and SQL

Now, you can start making your chat interface! You can create a basic command-line interface or a chat application on the web. The main goal is to allow users to enter their requests using natural language.

In the end, this interface will connect human language to how accurately queries come from databases.

Designing a Simple Chat UI

Making a complete chat application can be tough. However, in this demo, we will keep things simple. We will mainly focus on sending a request to the LLM and showing the answer. The user interface (UI) for this version will be easy to understand.

A simple command-line interface is a great place to start. Picture an easy setup where people can type their questions in plain English.

This setup allows users to practice asking questions to the database in natural language.

Connecting the Chat Interface to the Database

Connecting your chat interface to the SQL database helps it run easily. This link lets the app send questions from the LLM to the database. Then, it gets the answers back.

An API, which stands for application programming interface, allows the chat interface to work well with the SQL server. It takes requests from the chat and turns them into commands that the SQL database can read.

After the database runs the query, the API sorts the database results. Then, it sends them back to the chat interface. This way, the user can see the results.

Enhancing Your Chat Application with Advanced SQL Queries

As your chat app grows, make sure it can deal with harder questions. By learning how tables connect and using more advanced SQL parts, you help the LLM give better and more useful answers.

Vector databases provide a fresh way to handle similarity searches. Regular SQL databases may struggle with this task. For example, if a user asks a question that does not exactly match what is in the database, a vector database can still locate information that is similar in meaning. This gives better results and helps create a more enjoyable experience for users.

Crafting Complex SQL Queries for More Dynamic Conversations

Improving your chat app to have better conversations means enhancing its ability to handle complex SQL queries. These queries do more than just retrieve basic data. They let the LLM perform tasks like merging data, grouping entries, and running subqueries. This offers you new ways to analyze data and have engaging discussions.

LLMs can learn to understand hard SQL queries. This lets them create queries that fit what users want, even when the questions are hard. By being good at making detailed queries, your chat application can collect data from various tables, do calculations, and provide better results to users.

Utilizing LLM to Interpret and Generate SQL Queries

At its heart, our chat application works well because the LLM connects common human language with the specific needs of the database. This is where it becomes interesting: the LLM serves as a smart interpreter.

When you ask a question, the language model looks at your words closely. It figures out what you want to know and then builds a SQL query. This SQL query presents your question in a way that the database can read.

The LLM can read and understand natural language. It can answer different types of questions. This means it can handle both simple queries and complex requests. Users can interact easily. They do not need to learn SQL.

Troubleshooting Common Issues in LLM-SQL Chat Applications

Even with good planning, you may still face problems, especially in the start. This is normal. What’s important is being ready with solutions. This will help make the experience easy and fun for users.

Watch out for common problems, like incorrect SQL syntax in your queries.

Also, check for issues when connecting the LLM to the SQL database.

You can often fix these problems by using good error-handling techniques in your application’s code.

Debugging Connection Problems Between LLM and SQL Databases

Connection issues happen often with any app that connects to a database. LLM-SQL chat apps also face these problems. You might notice slow responses, receive error messages, or struggle to connect to the database at all.

To fix connection problems, you should start by checking the connection string your app uses for the SQL server. Make sure the hostname or IP address, port number, database name, username, and password are all correct.

Wrong permissions can cause access problems. Make sure the user account linking to the database has the right privileges. This is necessary to run the SQL queries made by the LLM.

Optimizing Performance for Real-time Interactions

In real-time chats, users want quick answers. That is why it is important to improve performance. The goal is to keep your chat application fast and responsive. It should be able to handle many user requests to the Postgres database without lagging.

Using the right methods can help your app show results to the user much quicker.

Optimization Technique

Description

Database Indexing

Creating indexes on frequently queried columns in your Postgres database can dramatically expedite data retrieval, making your queries faster.

Query Optimization

Efficient queries are crucial. Carefully analyze your queries and make use of database tools to identify areas for improvement.

Caching

Implementing a caching mechanism can significantly boost performance.

Conclusion

In conclusion, learning how to combine LLM and SQL for your chat database projects can create fun and engaging apps. First, it is important to grasp the basics. Next, set up your workspace. Make your designs easy for users. Then, enhance features by using advanced SQL queries. Fixing common problems and improving performance will lead to smoother interactions. Use LLM and SQL’s power to make your chat apps even better. If you want to know more about this great topic, visit our FAQ section for tips and help.

Frequently Asked Questions

How do I secure my LLM-SQL chat application?

To keep your LLM-SQL chat application safe, you need a strong plan. First, store important things, like your OpenAI API key and database passwords, in a safe place. Do not show these details in your code. You also need to protect your tokens. It is important to have good steps for authentication and authorization. This helps control access and stop unauthorized use of your application.

Can the LLM-SQL setup handle multiple users concurrently?

Yes, if you set up your LLM-SQL the right way, it can help many users at the same time. You can do this by handling requests in an asynchronous manner. Also, using good database connection pooling works well. These methods help create a strong and scalable solution. This means you can serve a lot of ChatGPT users at once without making it slower.

The post How to Chat with SQL Database Using LLM appeared first on Codoid.

Source: Read More