LLMs are gaining traction as the workforce across domains is exploring artificial intelligence and automation to plan their operations and make crucial decisions. Generative and Foundational models are thus relied on for multi-step reasoning tasks to achieve planning and execution at par with humans. Although this aspiration is yet to be achieved, we require extensive and exclusive benchmarks to test our models’ intelligence in reasoning and decision-making. Given the recentness of Gen AI and the short span of LLM evolution, it is challenging to generate validation approaches matching the pace of LLM innovations. Notably, subjective claims such as in planning. the validation metric’s completeness may remain questionable. For one, even if a model fulfills checkboxes for a goal, can we ascertain its ability to plan? Secondly, in practical scenarios, there exists not only a single plan but multiple plans and their alternatives. This makes the situation more chaotic. Fortunately, researchers across the globe are working to upskill LLMs for industry planning. Thus, we need a good benchmark that tests if LLMs have achieved sufficient reasoning and planning capabilities or if it is a distant dream.

ACPBench is an LLM reasoning evaluation developed by IBM Research consisting of 7 reasoning tasks over 13 planning domains. This benchmark includes reasoning tasks necessary for reliable planning, compiled in a formal language that can reproduce more problems and scale without human interference. The name ACPBench is derived from the core subject its reasoning tasks focus on: Action, Change and Planning. The tasks’ complexity varies, with a few requiring single-step reasoning and others needing multi-step reasoning. They follow Boolean and Multiple Choice Questions (MCQs) from all 13 domains (12 are well-established benchmarks in planning and Reinforcement Learning, and the last one is designed from scratch). Previous benchmarks in LLM planning were limited to only a few domains, which caused trouble scaling up.

Besides applying in multiple domains, ACPBench differed from its contemporaries as it generates datasets from formal Planning Domain Definition Language (PDDL) descriptions, which is the same thing responsible for creating correct problems and scaling them without human intervention.

The seven tasks presented in ACPBench are:

Applicability – It determines the valid actions from available ones in a given situation.

Progression – To understand the outcome of an action or change.

Reachability- It checks if the model can achieve the end goal from the current state by taking multiple actions.

Action Reachability- Identify the prerequisites for execution to execute a specific function.

Validation-To assess whether the specified sequence of actions is valid, applicable, and successfully achieves the intended goal.

Justification – Identify whether an action is necessary.

Landmarks-Identify subgoals that are necessary to achieve the goal.

Twelve of the thirteen domains above tasks span across are classical planning prevalent names such as BlocksWorld, Logistics, and Rovers, and the last one is a new category which authors name Swap. Each of these domains has a formal representation in PDDL.

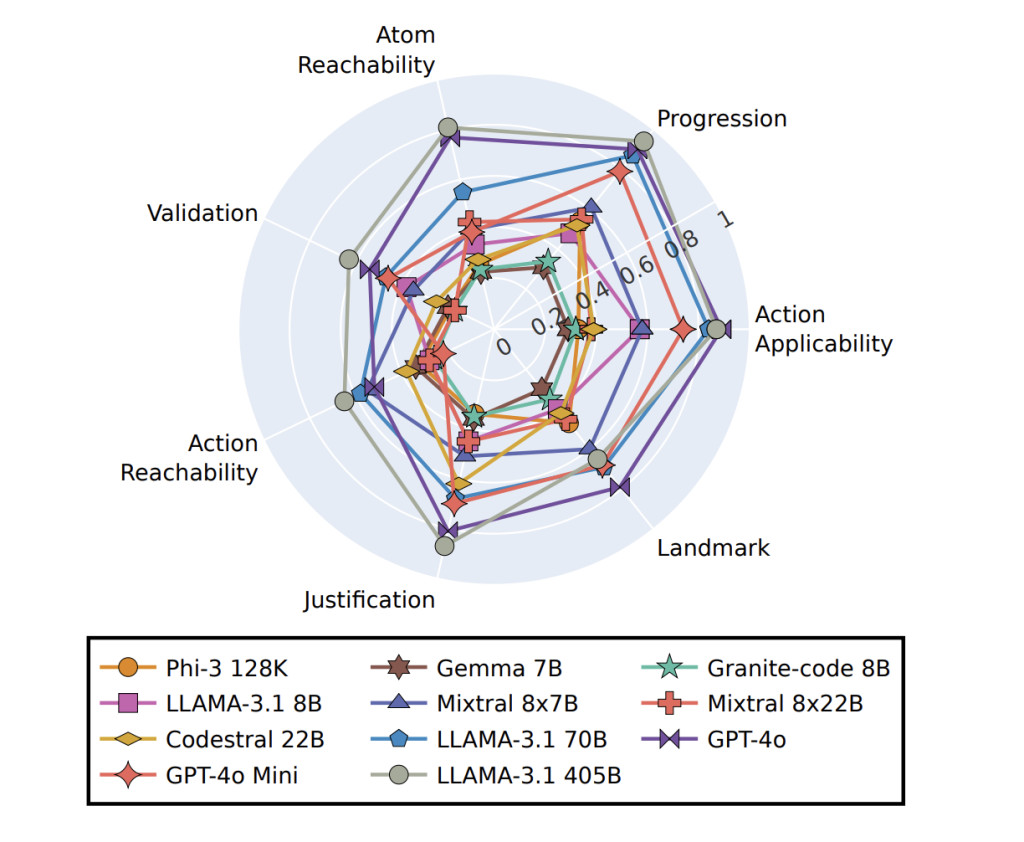

ACPBench was tested on 22 open-sourced and frontier LLMs.Some of the famous ones included GPT-4o, LLAMAmodels, Mixtral, and others. The results demonstrated that even the best-performing models (GPT-4o and LLAMA-3.1 405B) struggled with specific tasks, particularly in action reachability and validation. Some smaller models, like Codestral 22B, performed well on boolean questions but lagged in multi-choice questions. The average accuracy of GPT 4o went as low as 52 percent on these tasks. Post-evaluation authors also fine tuned Granite-code 8B, a small model and the process led to significant improvements. This fine tuned model performed at par with massive LLMs and generalized well on unseen domains, too!

ACPBench’s findings proved that LLMs underperformed on planning tasks irrespective of size and complexity. However, with skillfully crafted prompts and fine tuning techniques, they can perform better at planning.

Check out the Paper, GitHub and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post IBM Researchers ACPBench: An AI Benchmark for Evaluating the Reasoning Tasks in the Field of Planning appeared first on MarkTechPost.

Source: Read MoreÂ