This is a guest post by Anatoly Mikhaylov, Eftychia Kitsou, and Heath Skarlupka, Software Engineers at Zendesk; along with Ben Peterson, Randeep Singh, and Vivek Singh, AWS Technical Account Managers.

Zendesk is a customer experience software company that makes it effortless for organizations and customers to create connections. Its products power billions of conversations by focusing on simplifying the complexity of doing business and helping them move fast and adapt to changing customer needs and expectations. The company was founded in Copenhagen, Denmark, in 2007, built and grown in San Francisco, California, and today employs more than 6,000 people across the world.

This post is a follow-up to How Zendesk tripled performance by moving a legacy system onto Amazon Aurora and Amazon Redshift. Zendesk Explore is a reporting and analytics tool designed to help businesses analyze, understand, and share their information. In the 4 years since Zendesk Explore data stores were built on Amazon Aurora and Amazon Redshift, the dataset has quadrupled in size. In conjunction with rapid product feature growth, the growth of the dataset presented new challenges and created new opportunities for the engineering teams to address. Zendesk Explore achieved its performance and cost reduction goals through upgrading to the latest version of Amazon Aurora PostgreSQL-Compatible Edition.

In this post, we go over the techniques we used to plan and upgrade major versions of Aurora PostgreSQL databases for Zendesk Explore with minimal customer downtime. We also discuss the performance optimizations we performed, the cost savings we achieved, and how we accomplished all of this within a period of 6 months. AWS Technical Account Managers played a significant role in helping us achieve these goals in a short period of time. The upgrade was performed successfully and without customer downtime.

The key achievements of this project included:

Upgrading the Aurora PostgreSQL clusters

Right-sizing the instance type from r6g.16xlarge to r7g.4xlarge, coupled with I/O optimized storage, transitioning to the AWS Graviton3 processor instance types

Reducing technical debt and enhancing application query performance

Stabilizing the use of CPU and memory underlying hardware resources

Increasing customer satisfaction and substantially improving the cost-performance ratio

Problem

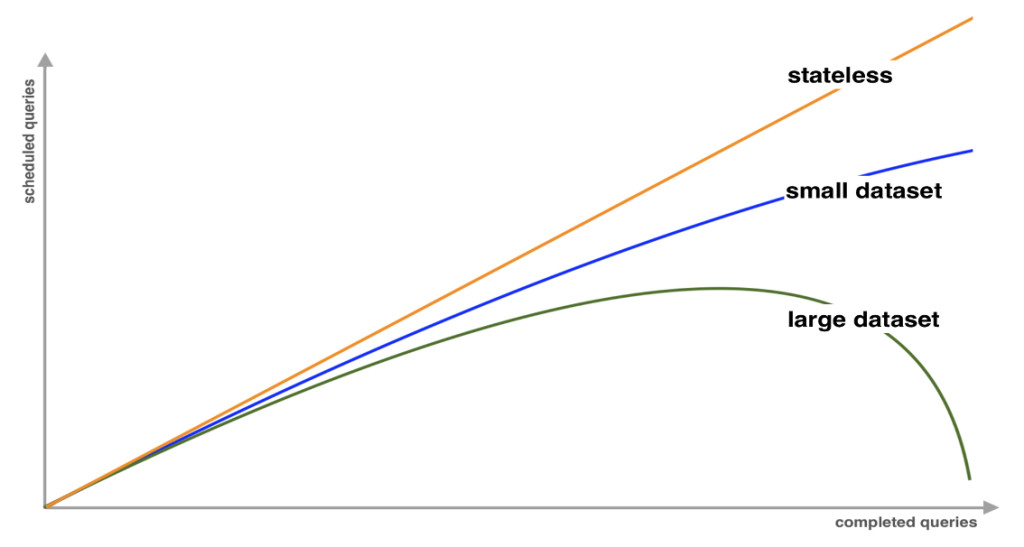

Relational data stores’ primary performance limitation is how query performance depends on the amount of data queries have to scan through. Rapid dataset growth leads to query performance challenges. Techniques and tools that worked on smaller datasets might no longer be effective on larger ones, and relational database performance problems can quickly become application performance issues. Vertical scaling isn’t always the answer because, in some situations, scaling hides the problems instead of solving them, as shown in the following figure. Problems that occur infrequently are harder to detect and remediate.

Amdahl’s Law states that “The overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used.â€

According to this law, stateless applications and applications with small datasets tend to come with predictable query runtime. However, when the dataset becomes too large, events that should happen in series (not in parallel) begin to dominate and the workload becomes very I/O intensive, meaning that most of the time is spent waiting for I/O rather than doing computation work. When a data store engine spends most of the time waiting, giving more resources won’t improve response time, it will only help to increase throughput. The question is: How valuable are long-running queries that scan large datasets?

Little’s Law states that “The average number of customers in a system (over some interval) is equal to their average arrival rate, multiplied by their average time in the system… The average time in the system is equal to the average time in queue plus the average time it takes to receive service.â€

This law applies to I/O-intensive workloads when the database doesn’t spend too much time on computationally heavy tasks, but rather, significant time is spent waiting for I/O, and this becomes the dominant factor. Without a thorough analysis and assessment of the workload and dataset, it might seem that database upscaling is the solution to the problem.

However, for Zendesk Explore’s workload and dataset, we found that the opposite approach—downscaling—provides a solution. This is because database performance often follows the hockey stick curve when a large dataset meets a complex query pattern. A formerly fast query begins to slow down together with dataset growth, and vice versa, when the query is rewritten and optimized, it can run faster on smaller instance types, as shown in the following figure.

When the dataset is too large and the query pattern is too complex, the response time on different instances can be illustrated as shown in the following figure.

Although volume of data isn’t a variable we can easily manipulate, the long-running suboptimal queries that scan through a large dataset are something we can definitely identify and remediate.

There won’t be enough resources to fulfill certain types of query patterns, even with large instance types. It’s only a matter of time until the dataset grows too large. Suboptimal queries are hard to detect in two different scenarios:

When the dataset is too small

When database instance is too large

However, after we detect this type of queries, we often find a solution to obtain the same data, either by using an alternative query or a different approach. The technique we have found useful for when the dataset is large and query patterns are unpredictable can be broken down into three steps: right-sizing, detection, and reaction. To better explain this method, we discuss some common database performance myths.

Myth #1: Spiky workloads can scale

Zendesk Explore provides powerful, prebuilt reports for assessing key aspects of customer interactions and support resources. Additionally, it allows users to construct their own tailored reports when specialized data insights are required. Typically, Zendesk Explore handles online analytical processing (OLAP) workloads, with significant import and bulk jobs run to insert reporting data.

However, the integration of Explore into business systems presents challenges when managing workloads, specifically spiky ones that often mask underlying issues. During peak times, it’s not unusual for systems to be scaled up to accommodate these loads, but this approach can overlook sporadic and infrequent long-running queries that conceal systemic application problems. For instance, combining OLAP and OLTP (online transaction processing) workloads can have adverse effects, such as a single long-running query potentially freezing the entire database engine upon completion, or striving to maintain ACID compliance and preserving data snapshots during the runtime of inefficient queries. Long count queries on vast datasets are common culprits; they can run for hours, and when complete, they might leave the database in an anomalous state. This raises the question of whether such queries should be run at all, or if they can be offloaded.

To mitigate these issues, different isolation levels can be employed, and segregating OLAP workloads from OLTP proves beneficial—unlike mere upscaling. When databases grow in size, performance improvements can often be achieved only by saturating relational databases to their limits, a technique that typically uses a cloned database and replaying traffic. This process of saturation is considered necessary to unveil and solve the fundamental performance problems of databases.

One of these types of queries even resulted in overloading a 16xlarge database cluster, which is a large infrastructure footprint hardware with 64-cpu cores, shown in the following figure.

Myth #2: Upscaling can help to run queries faster

The second myth highlights that a relational database doesn’t inherently manage resources or apply backpressure. Rather, it attempts to accomplish all the tasks that it’s instructed to perform. By default, queries are single-threaded; however, parallel queries can be employed but might lead to issues of their own, such as excessive memory usage. Generally, having more CPU cores won’t make individual queries run faster; instead, they enable more users to run more queries concurrently.

Additionally, increased concurrency, even on a system with massive parallel processing capabilities, can lead to contention and contention points for shared resources such as memory pages and mutexes. Adding more CPU cores can, in some circumstances, exacerbate these issues.

Myth #3: Right-sizing can lead to degraded performance

This leads to a question: Do all queries need to be responded to? What if a customer’s action initiates a query so complex that it will most likely be unable to complete, or a query that times out before the customer even sees the response?

The Pareto principle, also known as the 80/20 rule, the law of the vital few, and the principle of factor sparsity, states that for many outcomes, roughly 80% of consequences come from 20% of causes (the “vital fewâ€).

When we apply the Pareto principle to the problem, we can see it from different perspective:

20% of queries fulfill 80% of customer needs

20% of queries consume 80% of database resources

Improving 20% of queries can cut 80% of infrastructure costs

Talking about database performance from a cost optimization perspective prompts a different kind of conversation: What would break if the database was only half its current size? What if it were a quarter of its size? These are precisely the questions that helped us move forward on this project.

The answers to these questions don’t necessarily need to be provided immediately. However, posing these questions encourages more creative thinking and brings previously unimportant topics into the spotlight. For instance, what would be the impact of not running a certain query? In most cases, we found that this question hadn’t been asked before. We continue to identify and examine a series of similar queries, fostering a right-sizing mindset until we achieve a flat utilization of database resources.

Flat resource utilization is essential for enabling effective database right-sizing. Typically, customers don’t even notice when changes have been implemented on the backend.

Implementation

Our initial goal was to upgrade the Aurora PostgreSQL clusters, aiming for minimal downtime and without disrupting our customers. During the upgrade process, we recognized opportunities for improving the database configuration and changing several configuration options to new defaults that the upgraded version comes with. We observed instances experiencing stability issues and identified areas where query performance could be enhanced. Additionally, we noted the substantial costs associated with maintaining several r6g.16xlarge instances. We realized that our capacity demands had surpassed the capabilities of the 16xlarge configuration, indicating a need for optimization and right-sizing. Consequently, we embarked on extensive query optimization work. Our primary challenge and commitment was to make the system operational on smaller instances—right-sizing was a strategic decision taken at the outset of the project.

As a first step, we thoroughly reviewed the new features and differences between the current and target version. Upon identifying that there were no breaking changes between versions, we proceeded with performance benchmarking. We used Amazon Aurora Fast Database Cloning to create a clone, where we replayed the production queries on it with the help of Amazon Simple Queue Service (Amazon SQS). We performed thorough benchmarking with various parameter groups and instance types. This was instrumental in allowing us to observe multiple versions of Aurora PostgreSQL-Compatible running the same queries and to understand how different parameter configurations influence database health and query performance.

We performed several upgrade tests on clones created with Aurora Fast Database Cloning and crafted a runbook, aiming to minimize downtime. During our initial tests, the total downtime reached 8 hours.

Each of our Aurora PostgreSQL clusters had between approximately 900 thousand to 1 million tables, which is a significant number. During our upgrade testing, we discovered that the in-place upgrade downtime was highly correlated with the total number of tables. As a result, we decided to revisit any obsolete and unused tables and drop them. Additionally, before each upgrade, we made a point of dropping all temporary tables. By doing this, we managed to reduce the number of tables by half right before each upgrade and consequently cut our total downtime by half!

During the upgrade tests, we also identified that the versions of some installed extensions, such pg_repack, weren’t supported in the target Amazon Aurora PostgreSQL and had to be upgraded individually. Specifically for the pg_repack extension, we had to drop it before the ugprade and reinstall a supported version post-upgrade.

In parallel with the preparation of the upgrade runbook, we aimed to enhance our databases by making them run faster and more optimally, and to safeguard them. Therefore, we decided to lower the statement_timeout value and log our slow queries by reducing the log_min_duration_statement. In many cases, long-running queries are caused by poorly optimized SQL queries or inefficient indexes. By setting a low statement_timeout value, we were able to identify these problematic queries and take steps to optimize them. This greatly assisted us in eventually right-sizing our database instances to r7g.4xlarge. Moreover, because our customers are exclusively engaged in reading data, we decided to introduce one additional reader instance per cluster. Our initial topology consisted of one writer and one reader, which we altered to one writer and two readers.

In Zendesk Explore, customers can’t write any data; they can only read their aggregated data (reporting) that comes from Zendesk Suite. Consequently, the extract, transform, and load (ETL) process is the sole writer to these databases. To minimize disruptions to customer activities, we formulated a strategy predicated on traffic redirection to a database clone.

During off-peak hours for each respective cluster—with clusters situated in the Europe and US AWS Regions—we temporarily halted the ETL processes, not exceeding a duration of 3–4 hours. Subsequently, using Aurora cloning, we generated a clone of the database and redirected the customer read traffic to the clone by modifying the DNS entry in Amazon Route 53. Following the successful clone creation, we commenced the in-place upgrade on the original cluster. Upon completion of the upgrade, we redirected read traffic back to the original cluster and proceeded to evaluate the performance of the upgraded system. If the upgraded cluster met our performance criteria, we would then reinstate the write traffic. If issues arose, we had the clone ready to move to production and start all services there as part of our rollback plan.

As a final step after each upgrade, we reinstalled the previously deleted extensions with their new supported versions and initiated a manual ANALYZE of all our tables.

Results and learnings

Flat CPU and memory utilization indicate that it’s safe to right-size. To effectively right-size databases, we identified several factors that have the most significant influence on database health and performance:

Reducing I/O-intensive workloads improved the uptime of reader nodes – By using the technique of right-sizing, detection, reaction, we identified specific queries that could be rewritten on the server side to yield the same data to the customer, but in a more performant way. Through Amazon RDS Performance Insights and Amazon CloudWatch Logs, we extracted queries that consumed the most memory and often preceded some reader nodes restarts. By addressing these types of queries, we stabilized the use of hardware resources and made sure cold start scenarios no longer occurred. This is crucial because the pg_stat statistics counter is reset during reader nodes’ uptime reset scenarios (an immediate shutdown, a server crash, or starting from a backup or point-in-time recovery). With the newly introduced topology of one writer to two readers, a reset in uptime generally goes unnoticed by the client. However, when a query times out, we attempt a retry and serve its result from another reader. In such cases, our application workers experienced increased I/O wait times. Therefore, before proceeding with right-sizing, we had to first eliminate the factors leading to uptime resets as demonstrated in the following figure.

Improving the use of freeable memory – Improving the use of freeable memory is crucial, because not all memory is allocated for database needs. Some must be reserved for the underlying operating system (OS) and system tasks. Refining the Aurora PostgreSQL parameter group configuration to match the workload has helped stabilize memory usage patterns. The AWS teams conducted extensive work to help us find the optimal balance between key Aurora PostgreSQL parameters such as work_mem, max_parallel_workers_per_gather, log_temp_files, seq_page_cost, and random_page_cost. Those were the primary parameters, but we also made adjustments to approximately two dozen secondary yet important parameters. There is no singular perfect database configuration, but there exists a favorable setup that can align well with specific query patterns and the associated workload. As a result, as shown in the following figure, the occurrences of Aurora freeable memory dips became less frequent and had reduced impact on the database cluster’s health and performance.

Optimizing CPU tasks – CPU cycles are often consumed by maintenance tasks, such as autovacuum processes. By reconfiguring the autovacuum settings to make it less aggressive, and by performing upgrades with database clones followed by pg_dump and pg_restore, we managed to reset the large backlog of tuples and maintain a flat growth pattern, as shown in the following figure, where the Y-axis represents number of dead tuples.

Significant cost savings in AWS Cloud computing costs – This upgrade journey enabled us to gain a deeper understanding of our workload, refine our queries, implement effective changes in database parameters, decrease our technical debt, and reconsider our database topology—all leading to a significant reduction in costs. During the performance benchmarking phase, we also tested the AWS Graviton3 processors (r7g database instances) and observed a significant performance improvement. As a result, we decided to adopt r7g instances for future use. The following figure demonstrates the project’s timeline and instance type transition.

Additionally, with guidance from AWS, we identified that opting for Amazon Aurora I/O Optimized would be an ideal choice for our goal to further reduce costs. Several steps were taken: adding an extra reader instance, transitioning to AWS Graviton3 and Amazon Aurora I/O Optimized, and right-sizing to 4xlarge instances. Our performance metrics improved considerably, leaving us well-positioned and more confident than ever as we plan our further upgrades.

Conclusion

In this post, we showed you how Zendesk successfully upgraded their Aurora PostgreSQL clusters with minimal customer downtime, while also optimizing queries, right-sizing instances, and reducing technical debt. We demonstrated the techniques used to plan and implement the upgrade, including performance benchmarking, query optimization, and database configuration improvements. By adopting AWS Graviton3 processors, I/O optimized storage, and smaller r7g.4xlarge instances, Zendesk achieved significant cost savings and improved the cost-performance ratio of their Zendesk Explore product.

If you have any questions or want to share your own experiences with upgrading and optimizing Aurora PostgreSQL-Compatible, leave a comment or visit the AWS Database Blog to learn more about the latest best practices and customer success stories.

Special thanks to Zendesk’s engineering efforts of Bharat Sahu, Julien Champ, Jack Bracken, Neilson Zanotti, Amit Agarwal, Theo Gauchoux, and Théo Jeanjean. We also extend our gratitude to Zendesk’s engineering leadership for their support and guidance: Libo Cannici, Scott Ullrich, Daniel Rieder, Kalyan Wunnava, Hasan Ovuc, Ben Fitzgerald, Manus Gallagher, Clément Compas, and Raju Divakaran.

About the Authors

Anatoly Mikhaylov, Senior Staff Software Engineer, Performance and Capacity at Zendesk, is involved in scaling the system reliably and cost-efficiently. There is nothing he enjoys more than right-sizing databases, optimizing the query patterns, and providing better customer experience.

Eftychia Kitsou is a Sr DBA on the Relational Storage team at Zendesk, with a focus on improving MySQL and PostgreSQL databases. She is passionate about performance tuning and data management.

Heath Skarlupka is a Senior Staff Software Engineer on the FinOps team at Zendesk. Heath’s passion is optimizing cloud infrastructure to improve reliability and total cost of ownership. In his free time, Heath likes to hike at state parks and barbeque.

Ben Peterson is a Senior Technical Account Manager with AWS. He is passionate about enhancing the developer experience and driving customer success. In his role, he provides strategic guidance on using the comprehensive AWS suite of services to modernize legacy systems, optimize performance, and unlock new capabilities.

Randeep Singh is a Technical Account Manager at AWS, where he works closely with enterprise customers to help them design, implement, and optimize their cloud infrastructure. With his extensive experience in cloud computing and his deep understanding of AWS services, Randeep helps customers achieve their business goals by taking advantage of the power and flexibility of the AWS platform.

Vivek Singh is a Principal Database Specialist Technical Account Manager with AWS, focusing on Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL engines. He works with enterprise customers, providing technical assistance on PostgreSQL operational performance and sharing database best practices. He has over 17 years of experience in open source database solutions and enjoys working with customers to help design, deploy, and optimize relational database workloads on AWS.

Source: Read More