Prism is a powerful Laravel package for integrating Large Language Models (LLMs) into your applications. Using Prism, you can easily use different AI providers using the package’s driver pattern, which gives you a unified interface to work with popular AI providers out of the box. Prism has three built-in providers available at the time of writing—Anthropic, Open AI, and Ollama—with the ability to create custom drivers:

// Anthropic

$prism = Prism::text()

->using(‘anthropic’, ‘claude-3-5-sonnet-20240620’)

->withSystemPrompt(view(‘prompts.nyx’))

->withPrompt(‘Explain quantum computing to a 5-year-old.’);

$response = $prism();

echo $response->text;

// Open AI

$prism = Prism::text()

->using(‘openai’, ‘gpt-4o’)

->withSystemPrompt(view(‘prompts.nyx’))

->withPrompt(‘Explain quantum computing to a 5-year-old.’);

// Ollama

$prism = Prism::text()

->using(‘ollama’, ‘qwen2.5:14b’)

->withSystemPrompt(view(‘prompts.nyx’))

->withPrompt(‘Explain quantum computing to a 5-year-old.’);

Main Features

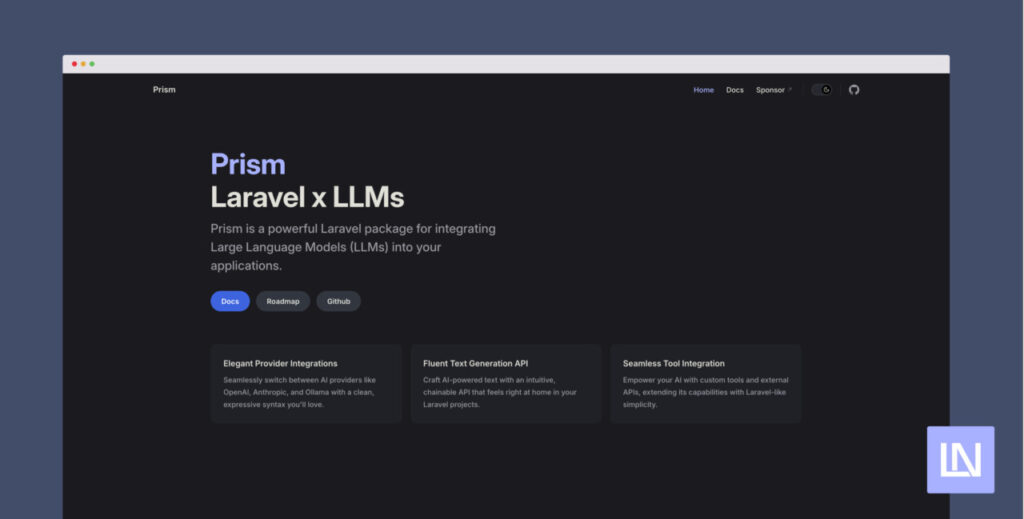

Elegant Provider Integrations – Seamlessly switch between AI providers like OpenAI, Anthropic, and Ollama with a clean, expressive syntax you’ll love.

Fluent Text Generation API – Craft AI-powered text with an intuitive, chainable API that feels right at home in your Laravel projects.

Seamless Tool Integration – Empower your AI with custom tools and external APIs, extending its capabilities with Laravel-like simplicity.

Prism Server – use tools like ChatGPT web UIs or any OpenAI SDK to interact with your custom Prism models.

Seamless integration with Laravel’s ecosystem

Built-in support for AI-powered tools and function calling

Flexible configuration options to fine-tune your AI interactions

You can learn more about this package and get full installation instructions in the official Prism documentation. The source code is available on GitHub at echolabsdev/prism.

The post Prism is an AI Package for Laravel appeared first on Laravel News.

Join the Laravel Newsletter to get all the latest

Laravel articles like this directly in your inbox.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)