Introduction

Traditional depth estimation methods often require metadata, such as camera intrinsics, or involve additional processing steps that limit their applicability in real-world scenarios. These limitations make it challenging to produce accurate depth maps efficiently, especially for diverse applications like augmented reality, virtual reality, and advanced image editing. To address these challenges, Apple introduced Depth Pro, an advanced AI model designed for zero-shot metric monocular depth estimation, reshaping the field of 3D vision by providing sharp, high-resolution depth maps in a fraction of a second.

Bridging the Gap in Depth Estimation

Depth Pro aims to bridge the gap in traditional methods by producing metric depth maps with absolute scale in zero-shot conditions, meaning it can create detailed depth information from an arbitrary image without additional training on domain-specific data. Inspired by previous work such as MiDaS, Depth Pro operates efficiently, generating a 2.25-megapixel depth map in just 0.3 seconds on a standard V100 GPU, demonstrating its practicality for real-time applications such as image editing, virtual reality, and augmented reality.

Architecture and Training

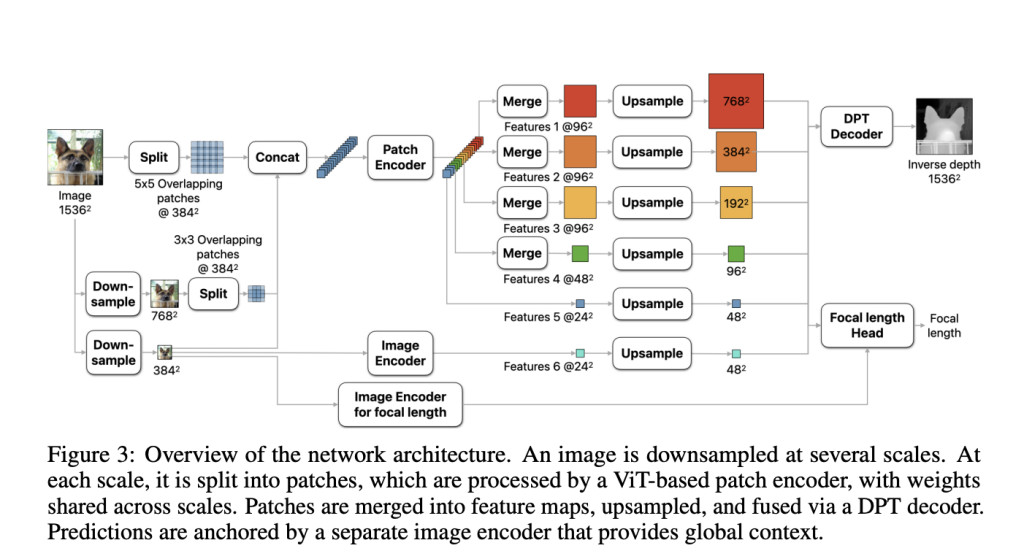

Depth Pro’s architecture is centered around a multi-scale vision transformer (ViT) designed to balance capturing global image context with preserving fine structures. Unlike conventional transformers, Depth Pro applies a plain ViT backbone at multiple scales and fuses predictions into a single high-resolution output, benefiting from ongoing advancements in ViT pretraining. This multi-scale approach ensures sharp boundary delineation even in complex scenarios involving thin structures such as hair and fur, which are typically challenging for monocular depth estimation models.

To train the model, Apple used both real and synthetic datasets, implementing a two-stage training curriculum. Initially, Depth Pro was trained on a diverse mix of real-world and synthetic datasets to achieve robust feature learning that generalizes well across domains. In the second stage, synthetic datasets with pixel-accurate ground truth were used to sharpen the depth maps, focusing on high-quality boundary tracing. This unique curriculum helped Depth Pro achieve superior boundary accuracy, eliminating artifacts like “flying pixels†that degrade image quality in other models.

Zero-Shot Focal Length Estimation

One of Depth Pro’s notable features is its zero-shot focal length estimation capability. Unlike many previous methods that rely on known camera intrinsics, Depth Pro estimates the focal length directly from the depth network’s features, enhancing its versatility for diverse real-world applications. This allows the model to synthesize views from arbitrary images, such as specifying a desired distance for rendering, without requiring metadata.

Performance Evaluation

The model’s contributions are validated through extensive experiments, demonstrating superior performance in comparison to prior methods across multiple dimensions. Depth Pro excels particularly in boundary accuracy and latency, with evaluations showing that it offers unparalleled precision in tracing fine structures and boundaries, significantly outperforming other state-of-the-art models such as Marigold, Depth Anything v2, and Metric3D v2. For example, Depth Pro produced sharper depth maps and more accurately traced occluding boundaries, resulting in cleaner novel view synthesis compared to other methods.

Efficiency and Limitations

The vision transformer’s efficiency is further highlighted in the speed comparison: Depth Pro is one to two orders of magnitude faster than models that focus on fine-grained boundary predictions, such as Marigold and PatchFusion. It manages this without compromising on accuracy, making it well-suited for real-time applications like interactive image generation and augmented reality experiences.

Despite its strong performance, Depth Pro has some limitations. The model struggles with translucent surfaces and volumetric scattering, where defining a single pixel depth becomes ambiguous. Nonetheless, its advancements mark a significant step forward in monocular depth estimation, providing a robust foundation model that is both highly accurate and computationally efficient.

Conclusion

Overall, Depth Pro’s combination of zero-shot metric depth estimation, high resolution, sharp boundary tracing, and real-time processing capability positions it as a leading model for a range of applications in 3D vision, from image editing to virtual reality. By removing the need for metadata and enabling sharp, detailed depth maps in less than a second, Depth Pro sets a new standard for depth estimation technology, making it a valuable tool for developers and researchers in the field of computer vision.

Check out the Paper and Model on HF. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post Apple AI Releases Depth Pro: A Foundation Model for Zero-Shot Metric Monocular Depth Estimation appeared first on MarkTechPost.

Source: Read MoreÂ