As organizations increasingly rely on Amazon DynamoDB for their operational database needs, the demand for advanced data insights and enhanced search capabilities continues to grow. Leveraging the power of Amazon OpenSearch Service and Amazon Bedrock, you can now unlock generative artificial intelligence (AI) capabilities for your DynamoDB data.

In this post, we show how you can set up a zero-ETL integration between DynamoDB and OpenSearch Service along with model connectors in OpenSearch Service to automatically generate embeddings using Amazon Bedrock for incoming data. Amazon Bedrock simplifies generative AI application development by offering high-performing foundation models, seamless integration with AWS services, and secure customization options, all through a single API.

Service Introductions

Amazon DynamoDB is serverless, NoSQL, fully managed database with single-digit millisecond performance at any scale, which customers use for high-throughput, low-latency workloads.

DynamoDB offers a zero-ETL integration with OpenSearch Service through the DynamoDB plugin for Amazon OpenSearch Ingestion. This integration offers a seamless, no-code solution for ingesting data from DynamoDB into OpenSearch Service. With this integration, you can use DynamoDB tables as sources for ingestion into OpenSearch Service indexes, adding rich-text and semantic search capabilities including enhanced real-time analytics in your applications.

The zero-ETL integration first uses DynamoDB export to Amazon S3 to create an initial snapshot for loading into OpenSearch Service. Real-time changes are then replicated using Amazon DynamoDB streams, treating each item as an event in OpenSearch Ingestion. This allows for dynamic attribute modifications through processor plugins, offering unparalleled flexibility.

To use zero-ETL integration, you will first need to enable point-in-time recovery (PITR) on your table. Once PITR is enabled, select the ‘new and old images’ option for your DynamoDB streams. The integration operates without impacting DynamoDB table throughput, providing a safe integration with production traffic. However, you should be mindful of considerations like the limits on parallel consumers for streams and adhere to best practices for DynamoDB integration.

Amazon OpenSearch Service is a managed solution designed to simplify the deployment, management, and scalability of OpenSearch clusters within the AWS Cloud infrastructure. This service offers a versatile and scalable approach for indexing, searching, and analyzing extensive datasets in real time. With the inclusion of the Neural Search plugin, developers gain the capability to use OpenSearch Service for crafting search experiences enhanced by machine learning (ML) and for building generative AI applications.

Customers choose OpenSearch Service as their vector database due to its ability to efficiently run semantic searches, as well as its ability to generate embeddings in real time during data ingestion. This is achieved by sending pertinent data fields to Amazon Bedrock, obtaining the corresponding embeddings, and storing them.

Vectorization and generative AI

DynamoDB handles persistent data storage, while OpenSearch Service manages semantic search functionality. The data must be converted from its human-readable format in DynamoDB to an ML model-readable format in OpenSearch Service.

The data is converted to embeddings, which are n-dimensional vector representations of your data that not only represent the words or images, but the context of the data. You can use embedding models such as Cohere Embed v3 and Amazon Titan Multimodal Embeddings G1 to convert text and images into embeddings. Models can be accessed using Amazon SageMaker JumpStart and Amazon Bedrock or self-hosted on Amazon Elastic Compute Cloud (Amazon EC2) instances. In our solution, we use Amazon Bedrock, a fully managed service for accessing foundation models (FMs) using an API. This eliminates the need to host a model ourselves and we only pay for invocations to the model. The data inserted into DynamoDB is passed to the model after it reaches OpenSearch Service, and the model returns an embedding. The data along with its embedding is stored in an OpenSearch Service index.

Solution overview

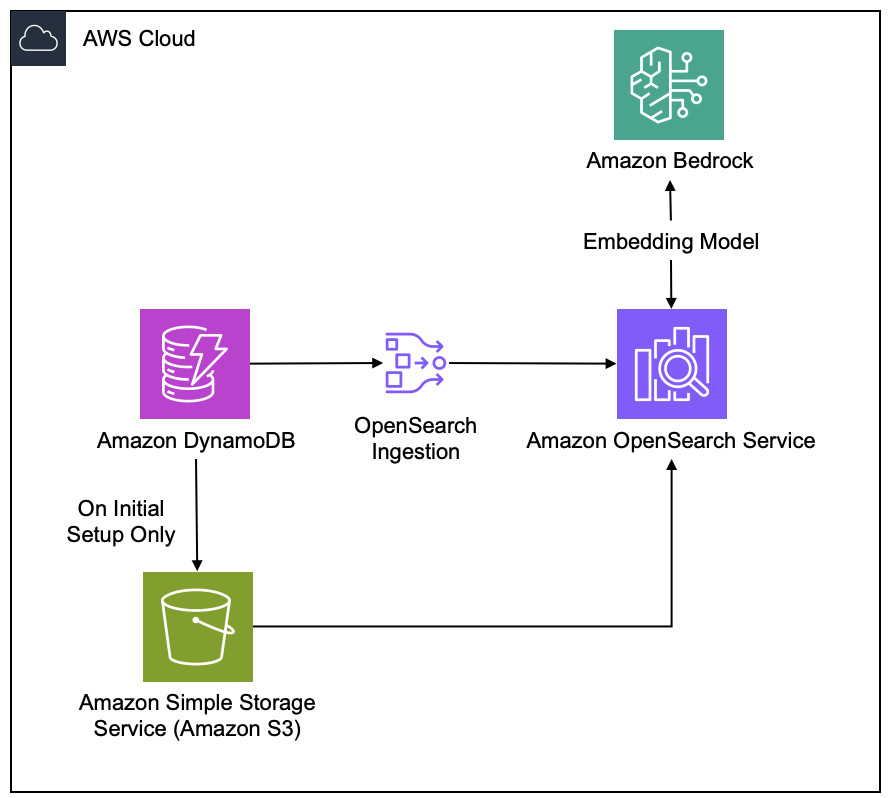

The following diagram illustrates the solution architecture.

This solution consists of the following services:

DynamoDB for persistent storage for your data.

An S3 bucket for the initial export of your data in DynamoDB which is needed if you are using this solution for a table that has already been created.

DynamoDB Streams to send your data to an OpenSearch Ingestion pipeline.

Amazon Bedrock to embed the data stored in DynamoDB.

OpenSearch Service to store, index, and enable advanced search on your data.

Prerequisites

To get started, you must have the following prerequisites:

An AWS account

An AWS Identity and Access Management (IAM) user or role with permissions to use AWS CloudFormation, DynamoDB, AWS Lambda, Amazon Bedrock, and OpenSearch Service

Access to Amazon Titan Embeddings G1 – Text model within Amazon Bedrock for the us-east-1 AWS Region

Deploy the CloudFormation template

Deploy the CloudFormation template in eu-west-1.

This template will provision the necessary IAM roles and two AWS Lambda functions, in addition to the other resources noted in the solution architecture. One Lambda function creates and registers the Amazon Bedrock model in OpenSearch Service, and creates the ingestion pipeline, the k-nn index, and a test document. The other function is used to input data into the DynamoDB table.

The CloudFormation stack takes approximately 20 minutes to create the resources.

View the OpenSearch Service index

Complete the following steps to view your index:

On the OpenSearch Service console, choose Domains in the navigation pane.

Choose the link under OpenSearch Dashboards URL (IPv4).

Log in with the credentials you specified during the CloudFormation stack creation (by default, the username is admin and the password is OpenSearch123!).

On the OpenSearch Dashboards home page, choose Dev Tools under Management in the navigation pane.

Run the following query to see the data now stored in your OpenSearch Service index:

You will see only one item in the index, which was added when the index was created.

Upload data to DynamoDB

Complete the following steps to upload your data to DynamoDB:

On a separate tab, open the Lambda console.

Navigate to the DynamoDB-Upload-Data function.

Choose Test.

In the test event configuration, enter an event name and choose Save.

You should receive a response saying that the items were successfully written to the table.

Query the new data in OpenSearch

Complete the following steps to query your data:

Return to OpenSearch Dashboards.

Run the same query to see the data now stored in your OpenSearch Service index:

The result will include information about the query as well as the hits, which will include the five products uploaded to DynamoDB. Each product will have the following information:

The embedding is 1,536 dimensions, which will change based on the model used to embed the data. Notice how the data uploaded to DynamoDB was populated with their embeddings in OpenSearch Service immediately. This is the benefit of zero-ETL integration.

To run the next query, you need the model ID.

In the navigation pane, under OpenSearch Plugins, choose Machine Learning.

Choose Action for the Amazon Titan Multimodal Embeddings model, and copy the model ID.

Run the following query to run a neural search (semantic search) using the model ID you copied:

For this you will need the model id. Select the navigation bar in the top left, scroll down to OpenSearch Plugins, select Machine Learning. Click on Action for the Bedrock Multimodal embeddings model, and copy the Model ID. Paste the model ID in the following query before running.

The results will return the products in order of how related they are to the question asked in the query_text field. Even though the description of the Bed product didn’t mention the word “sleepâ€, it was found to be the most related product. This type of related retrieval is used in running Retrieval Augmented Generation (RAG), a common strategy for building useful generative AI applications.

In the previous query, the neural search query type is used, which provides fully-managed semantic search. Neural search is an abstraction over vector search which removes the need for developers to have to orchestrate the generation of embeddings and mapping of embeddings to text results. This is useful when your priority is on ease of implementation and your workload fits within the supported media types. With the recent version of Amazon OpenSearch Service (v2.11+) you can use hybrid search functionality which normalizes the query score to leverage a combination of lexical and semantic search to improve your search relevance. The following is an example of using a Hybrid query combining lexical and semantic in a single query clause:

Another feature that would allow you to further fine-tune your results is the usage of post-filters (Amazon OpenSearch Service v2.13+). This allows you to filter up your result set to your exact needs:

View the data in DynamoDB

Complete the following steps to view the data in DynamoDB:

On the DynamoDB console, choose Explore items.

Choose your table to view the data populated in the previous step.

If no items are shown, choose Scan or query items, choose Scan, then choose Run.

You’ll see five products with a partition key, name, description, and price. There are no embeddings stored here. The description field is what was embedded.

Mutating operations done to the DynamoDB table also affect the OpenSearch Service index. For example, if the name of a product is updated in DynamoDB, it will be updated in the OpenSearch Service index in real time. If the field that is mapped to be embedded is updated, that field and the embeddings will be updated in the index. Lastly, if an item is deleted in DynamoDB, it will be deleted in the index.

Clean up

To avoid continued charges in your AWS account, open the AWS CloudFormation console and delete the stack you created.

Conclusion

In this post, you set up a zero-ETL integration between DynamoDB and OpenSearch Service, while automatically embedding data from DynamoDB using an Amazon Titan model hosted on Amazon Bedrock. This provides the ability to gain deeper insight into your DynamoDB data and serves as a source for generative AI applications.

If you have any feedback or questions, leave them in the comments section.

About the authors

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Andrew Walko is a solutions architect at AWS working with enterprise customers in the gaming and automotive/manufacturing industries. He focuses on Al/ML technologies and utilizes his experience in analytics and generative Al to empower customers to modernize their businesses through data utilization. He enjoys sharing his expertise at conferences and through publishing technical blogs.

Muslim Abu Taha is a Sr. OpenSearch Specialist Solutions Architect dedicated to guiding clients through seamless search workload migrations, fine-tuning clusters for peak performance, and ensuring cost-effectiveness. With a background as a Technical Account Manager (TAM), Muslim brings a wealth of experience in assisting enterprise customers with cloud adoption and optimize their different set of workloads. Muslim enjoys spending time with his family, traveling and exploring new places.

Source: Read More