Previous 3D model generation from single images faced challenges. Feed-forward architectures produced simplistic objects due to limited 3D data. Gaussian splatting provided rapid coarse geometry but lacked fine details and view consistency. Naive gradient thresholding caused excessive densification and swollen geometries. Regularisation methods improved accuracy, but removal led to structural issues. User studies revealed view consistency and quality problems, emphasizing the need for robust frameworks. Data availability, detail preservation, and consistency limitations highlighted the necessity for advanced approaches. Vista3D addresses these challenges, introducing a framework balancing speed and quality in 3D model generation from single images.

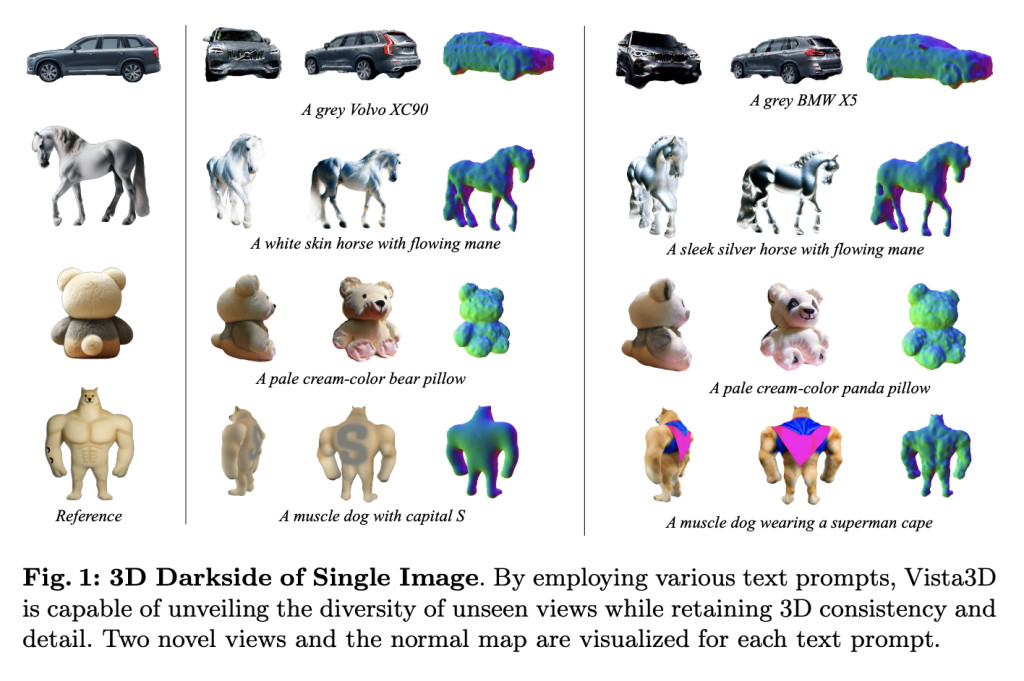

Researchers from the National University of Singapore and Huawei Technologies Ltd introduce Vista3D, a novel framework for generating 3D representations from a single image. The system addresses the challenge of revealing hidden object dimensions through a two-phase approach: a coarse phase utilizing Gaussian Splatting for initial geometry and a fine phase refining the geometry via Signed Distance Function extraction and optimization. This method enhances model quality by capturing both visible and obscured object aspects. Vista3D harmonizes 2D and 3D-aware diffusion priors, balancing consistency and diversity. The framework achieves swift, consistent 3D generation within five minutes and enables user-driven editing through text prompts, potentially advancing fields like gaming and virtual reality.

Vista3D’s methodology for generating 3D objects from single images employs a multi-stage approach. The process begins with coarse geometry generation using 3D Gaussian Splatting, providing a rapid initial 3D structure. This geometry undergoes refinement through transformation into signed distance fields and the introduction of a differentiable isosurface representation. These steps enhance surface accuracy and visual appeal. The framework incorporates diffusion priors to enable diverse 3D generation, utilizing gradient magnitude constraints and angular-based composition to maintain consistency while exploring object diversity.

The methodology follows a coarse-to-fine mesh generation strategy, employing top-K densification regularisation. This approach progressively refines the initial geometry to achieve high-fidelity outputs. By combining advanced techniques in geometry generation, refinement, and texture mapping, Vista3D addresses challenges in traditional 3D modeling. The framework’s innovative use of diffusion priors and representations enhances detail, consistency, and output diversity, resulting in high-quality 3D models generated efficiently from single images. This comprehensive approach demonstrates significant advancements in 3D object generation from limited 2D inputs.

Results from the Vista3D framework demonstrate significant advancements in 3D object generation from single images. Vista3D-L achieved state-of-the-art performance across metrics, including PSNR, SSIM, and LPIPS, outperforming existing methods. CLIP-similarity scores of 0.831 for Vista3D-S and 0.868 for Vista3D-L indicate high consistency between generated 3D views and reference images. The framework generates 3D objects in approximately 5 minutes, a notable improvement in processing time. Qualitative assessments reveal superior texture quality, particularly in scenarios with less informative reference views. Ablation studies confirm the effectiveness of key components, while comparisons with methods like One-2-3-45 and Wonder3D highlight Vista3D’s superior performance in texture, geometry quality, and view consistency.

In conclusion, the Vista3D framework introduces a coarse-to-fine approach for exploring 3D aspects of single images, enabling user-driven editing and improving generation quality through image captions. The efficient process begins with Gaussian Splatting for coarse geometry, followed by refinement using isosurface representation and disentangled textures, producing textured meshes in about 5 minutes. The angular composition of diffusion priors enhances diversity while maintaining 3D consistency. The top-k densification strategy and regularisation techniques contribute to accurate geometry and fine details. Vista3D outperforms previous methods in realism and detail, balancing generation time and mesh quality. The authors anticipate their work will inspire further advancements in single-image 3D generation research.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Vista3D: A Novel AI Framework for Rapid and Detailed 3D Object Generation from a Single Image Using Diffusion Priors appeared first on MarkTechPost.

Source: Read MoreÂ