Using advanced artificial intelligence models, video generation involves creating moving images from textual descriptions or static images. This area of research seeks to produce high-quality, realistic videos while overcoming significant computational challenges. AI-generated videos find applications in diverse fields like filmmaking, education, and video simulations, offering an efficient way to automate video production. However, the computational demands for generating long and visually consistent videos remain a key obstacle, prompting researchers to develop methods that balance quality and efficiency in video generation.

A significant problem in video generation is the massive computational cost associated with creating each frame. The iterative process of denoising, where noise is gradually removed from a latent representation until the desired visual quality is achieved, is time-consuming. This process must be repeated for every frame in a video, making the time and resources required for producing high-resolution or extended-duration videos prohibitive. The challenge, therefore, is to optimize this process without sacrificing the quality and consistency of the video content.

Existing methods like Denoising Diffusion Probabilistic Models (DDPMs) and Video Diffusion Models (VDMs) have successfully generated high-quality videos. These models refine video frames through denoising steps, producing detailed and coherent visuals. However, each frame undergoes the full denoising process, which increases computational demands. Solutions like latent shift attempt to reuse latent features across frames, but they still require improvement in terms of efficiency. These methods struggle to generate long-duration or high-resolution videos without significant computational overhead, prompting a need for more effective approaches.

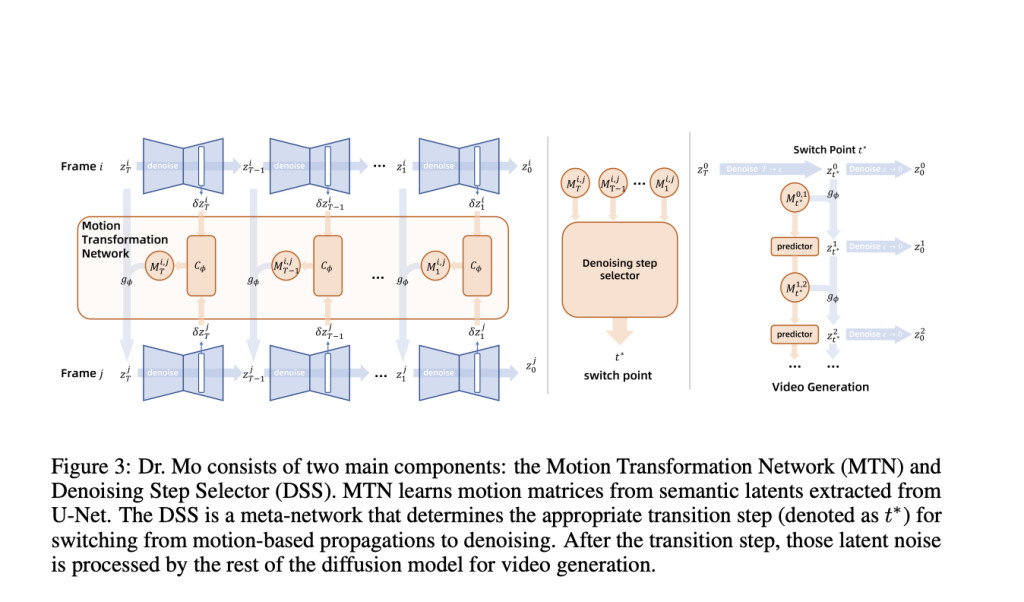

A research team has introduced the Diffusion Reuse Motion (Dr. Mo) network to solve the inefficiency of current video generation models. Dr. Mo reduces the computational burden by exploiting motion consistency across consecutive video frames. The researchers observed that noise patterns remain consistent across many frames in the early stages of the denoising process. Dr. Mo uses this consistency to propagate coarse-grained noise from one frame to the next, eliminating redundant calculations. Further, the Denoising Step Selector (DSS), a meta-network, dynamically determines the appropriate step to switch from motion propagation to traditional denoising, further optimizing the generation process.

In detail, Dr. Mo constructs motion matrices to capture semantic motion features between frames. These matrices are formed from the latent features extracted by a U-Net-like decoder, which analyzes the motion between consecutive video frames. The DSS then evaluates which denoising steps can reuse motion-based estimations rather than recalculating each frame from scratch. This approach allows the system to balance efficiency and video quality. Dr. Mo reuses noise patterns to accelerate the process in the early denoising stages. As the video generation nears completion, more fine-grained details are restored through the traditional diffusion model, ensuring high visual quality. The result is a faster system that generates video frames while maintaining clarity and realism.

The research team extensively evaluated Dr. Mo’s performance on well-known datasets, such as UCF-101 and MSR-VTT. The results demonstrated that Dr. Mo not only significantly reduced the computational time but also maintained high video quality. When generating 16-frame videos at 256×256 resolution, Dr. Mo achieved a fourfold speed improvement compared to Latent-Shift, completing the task in just 6.57 seconds, whereas Latent-Shift required 23.4 seconds. For 512×512 resolution videos, Dr. Mo was 1.5 times faster than competing models like SimDA and LaVie, generating videos in 23.62 seconds compared to SimDA’s 34.2 seconds. Despite this acceleration, Dr. Mo preserved 96% of the Inception Score (IS) and even improved the Fréchet Video Distance (FVD) score, indicating that it produced visually coherent videos closely aligned with the ground truth.

The video quality metrics further emphasized Dr. Mo’s efficiency. On the UCF-101 dataset, Dr. Mo achieved an FVD score of 312.81, significantly outperforming Latent-Shift, which had a score of 360.04. Dr. Mo scored 0.3056 on the CLIPSIM metric on the MSR-VTT dataset, a measure of semantic alignment between video frames and text inputs. This score exceeded all tested models, showcasing its superior performance in text-to-video generation tasks. Moreover, Dr. Mo excelled in style transfer applications, where motion information from real-world videos was applied to style-transferred first frames, producing consistent and realistic results across generated frames.

In conclusion, Dr. Mo provides a significant advancement in the field of video generation by offering a method that dramatically reduces computational demands without compromising video quality. By intelligently reusing motion information and employing a dynamic denoising step selector, the system efficiently generates high-quality videos in less time. This balance between efficiency and quality marks a critical step forward in addressing the challenges associated with video generation.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Diffusion Reuse MOtion (Dr. Mo): A Diffusion Model for Efficient Video Generation with Motion Reuse appeared first on MarkTechPost.

Source: Read MoreÂ