The development of Artificial Intelligence (AI) models, especially in specialized contexts, depends on how well they can access and use prior information. For example, legal AI tools need to be well-versed in a broad range of previous cases, while customer care chatbots require specific information about the firms they serve. The Retrieval-Augmented Generation (RAG) methodology is a method that developers frequently use to improve an AI model’s performance in several areas.Â

By obtaining pertinent information from a knowledge base and integrating it into the user’s prompt, RAG greatly improves the performance of an AI. However, one significant drawback of traditional RAG approaches is that they often lose context throughout the encoding process, making it harder to extract the most pertinent information.

RAG’s dependence on segmenting materials into smaller, easier-to-manage chunks for retrieval can unintentionally cause important context to be lost. For instance, a user can enquire about the sales growth of a particular company for a given quarter using a financial knowledge base. A section of text stating, “The company’s revenue grew by 3% over the previous quarter,†might be retrieved by a conventional RAG system. But without any context, this excerpt doesn’t say which company or quarter is being discussed, which makes the information that was retrieved less helpful.

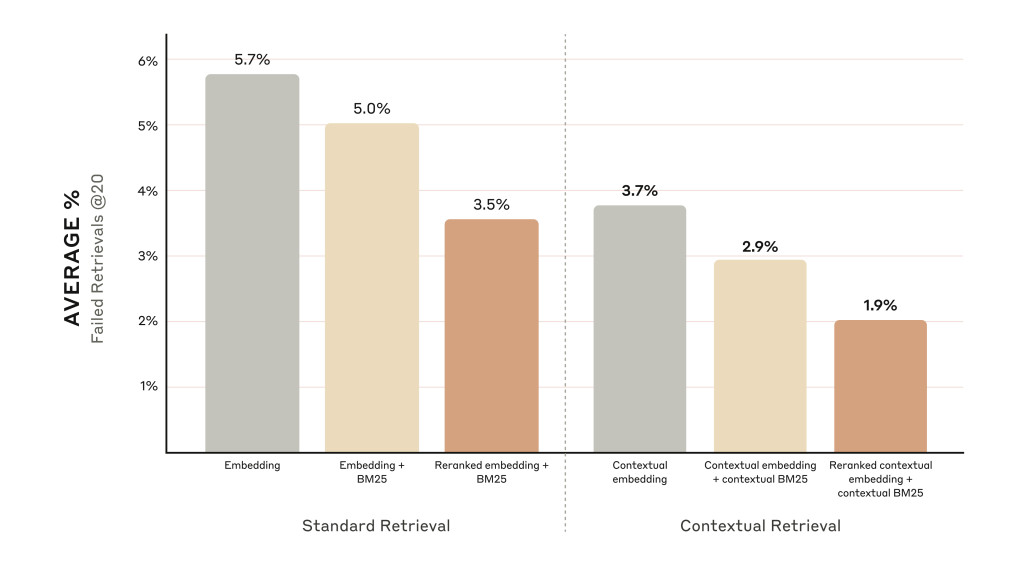

In order to overcome this issue, a new technique known as Contextual Retrieval has been introduced by Anthropic AI, which significantly raises the RAG systems’ information retrieval accuracy. The two sub-techniques that support this approach are Contextual Embeddings and Contextual BM25. Contextual Retrieval can lower the rate of unsuccessful information retrievals by 49% and, when paired with reranking, by an astounding 67% by improving the way text segments are processed and stored. These enhancements directly transfer into increased efficiency in subsequent tasks, increasing the effectiveness and dependability of AI models.

In order for Contextual Retrieval to function, each text segment must first have chunk-specific explanatory context added to it before it can be embedded or the BM25 index can be constructed. An excerpt that said, “The company’s revenue grew by 3% over the previous quarter,†for instance, might be changed to say, “This excerpt is from an SEC filing on ACME Corp’s performance in Q2 2023; the previous quarter’s revenue was $314 million.†Revenue for the business increased by 3% over the prior quarter.†The system finds it much easier to retrieve and apply the right information with this extra context.

Developers can use AI assistants like Claude to achieve Contextual Retrieval across huge knowledge libraries. They can create brief, context-specific annotations for each chunk by giving Claude precise instructions. These annotations are then appended to the text prior to embedding and indexing.

Embedding models are used in conventional RAG to capture semantic associations between text segments. These models can overlook important exact matches on occasion, particularly when handling queries, including unique IDs or technical phrases. This is where the lexical matching-based ranking function BM25 is useful. Because of its exceptional word or phrase-matching capabilities, BM25 is especially useful for technical inquiries requiring correct information retrieval. RAG systems can better retrieve the most pertinent information by integrating Contextual embedding with BM25, striking a balance between exact term matching and broader semantic understanding.

A more straightforward method might work for smaller knowledge bases, where the whole dataset can fit into the AI model’s context window. However, larger knowledge bases necessitate the use of more advanced techniques like contextual retrieval. This method allows working with knowledge bases substantially greater than what could fit in a single prompt, not only because it scales successfully to larger datasets but also because it enhances retrieval accuracy significantly.

A reranking phase can be added to enhance Contextual Retrieval’s performance even more. Reranking is the process of filtering and prioritizing potentially relevant chunks that have been first retrieved according to their relevance and importance to the user’s query. By ensuring that the AI model receives just the most relevant data, this phase improves response times and lowers expenses. In tests, the top-20-chunk retrieval failure rate was lowered by 67% when Contextual Retrieval and reranking were combined.

In conclusion, Contextual retrieval is a major improvement in the efficiency of AI models, especially in specific circumstances where precise and accurate information retrieval is needed. Contextual BM25, Contextual Embeddings, and reranking together can lead to significant gains in retrieval accuracy and AI performance overall.

The post Contextual Retrieval: An Advanced AI Technique that Reduces Incorrect Chunk Retrieval Rates by up to 67% appeared first on MarkTechPost.

Source: Read MoreÂ