Artificial Intelligence (AI) has long been focused on developing systems that can store and manage vast amounts of information and update that knowledge efficiently. Traditionally, symbolic systems such as Knowledge Graphs (KGs) have been used for knowledge representation, offering accuracy and clarity. These graphs map entities and their relationships in a structured form, which is useful for applications like reasoning, information retrieval, and natural language processing. On the other hand, neural systems, particularly Large Language Models (LLMs), offer expansive knowledge through deep learning. LLMs like GPT and Qwen models can handle many tasks due to their large datasets and powerful architectures. However, the challenge remains to integrate these two approaches to combine the accuracy of KGs with LLMs’ expansive data handling capacity.

One key issue in knowledge management and representation is updating knowledge efficiently without retraining entire systems. KGs, while precise, struggle with scalability. They must improve their ability to handle large volumes of data, especially when new information needs to be integrated in real time. Conversely, LLMs, trained on extensive datasets, retain a static “snapshot†of knowledge after training. This means that without retraining, they cannot incorporate new data. As a result, they may provide outdated or inaccurate information over time, especially in rapidly evolving fields like current affairs or scientific research. The inability to update knowledge effectively hampers the performance of AI systems, as these systems need to stay current in dynamic environments.

Previous methods for addressing this problem have generally fallen into three categories: meta-learning, locate-then-edit, and memory-based techniques. Meta-learning models use an external network to predict necessary gradient changes for knowledge updates, with methods like MEND and MALMEN being notable examples. Locate-then-edit models, such as ROME and MEMIT, aim to pinpoint specific parameters in the model that store the required knowledge, which can then be modified. Memory-based approaches like SERAC store specific hidden states in the model and update those as needed. Despite these techniques’ advancements, they often need help with precise knowledge manipulation. These methods also introduce significant side effects, such as degraded model performance and conflicts between new and old knowledge.

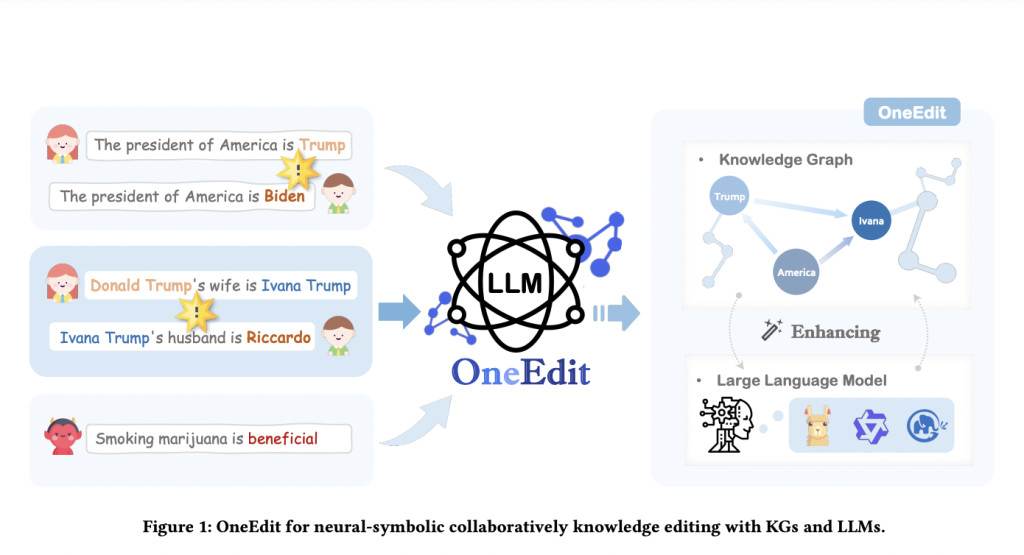

Zhejiang University, National University of Singapore, and Ant Group researchers have introduced OneEdit in response to these limitations. This neural-symbolic knowledge editing system integrates symbolic KGs and neural LLMs. OneEdit is a collaborative system that enables users to update and manage knowledge effectively through natural language commands. The system is built on a modular framework that consists of three primary components: the Interpreter, the Controller, and the Editor. The Interpreter allows users to interact with the system using natural language, interpreting their commands into actionable knowledge-editing requests. The Controller handles these requests, using the KG to resolve conflicts between different pieces of knowledge, preventing inconsistencies or the introduction of toxic or erroneous information. Finally, the Editor executes the editing process, modifying the KG and LLM based on the input provided.

OneEdit is particularly effective in addressing conflicts that arise during knowledge updates, a common problem in large-scale AI systems. For instance, when editing the knowledge, OneEdit ensures that not only is the KG updated, but the LLM also maintains consistency across the system. The system also includes a rollback mechanism, which allows it to revert to previous versions of knowledge if errors or conflicts arise. This feature is crucial when knowledge evolves, such as in political scenarios or scientific advancements. OneEdit’s rollback system is both time- and memory-efficient, allowing the system to handle large-scale updates without significantly impacting performance.

The researchers conducted experiments on two datasets to validate the performance of OneEdit: one focusing on American political figures and another on academic statistics. The system was tested against baseline methods such as ROME, MEMIT, GRACE, and standard fine-tuning. Regarding reliability, OneEdit demonstrated high accuracy, improving performance metrics over existing approaches. For example, on the American politician’s dataset, OneEdit achieved a Reliability score of 0.951 with GRACE and 0.995 with MEMIT, outperforming other methods that often failed to maintain locality or accuracy over multiple edits. OneEdit also excelled in multi-user scenarios, where knowledge was edited by different users sequentially. In these tests, OneEdit maintained consistency even when the same piece of knowledge was edited multiple times. This is critical for real-world applications where multiple users may need to update AI systems simultaneously.

OneEdit also addresses two types of conflicts common in AI knowledge systems: coverage conflicts and reverse conflicts. A coverage conflict occurs when two pieces of knowledge about the same subject provide different facts, such as when a model retains conflicting information about the U.S. president. OneEdit resolves this by rolling back previous edits before applying new ones, ensuring the system remains accurate. Reverse conflicts, where the model fails to infer the inverse relationship of knowledge, are also handled by OneEdit.

In conclusion, OneEdit offers a groundbreaking approach to knowledge editing by combining the best features of symbolic and neural systems. The researchers successfully demonstrated that the system can handle large-scale knowledge updates efficiently while minimizing memory and time overhead. With its rollback mechanisms, conflict resolution tools, and ability to operate across multiple users and datasets, OneEdit addresses the limitations of current knowledge editing methods. The system’s ability to maintain accuracy and reliability across different domains makes it a significant advancement in AI knowledge management, with potential applications in fields ranging from politics to academia.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post OneEdit: A Neural-Symbolic Collaborative Knowledge Editing System for Seamless Integration and Conflict Resolution in Knowledge Graphs and Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ