Cognitive psychology aims to understand how humans process, store, and recall information, with Kahneman’s dual-system theory providing an important framework. This theory distinguishes between System 1, which operates intuitively and rapidly, and System 2, which involves deliberate and complex reasoning. Language models (LMs), especially those using Transformer architectures like GPT-4, have made significant progress in artificial intelligence. However, a major challenge is in determining if LMs can consistently generate efficient and accurate outputs without explicit prompting for chain-of-thought (CoT) reasoning. This would indicate the development of an intuitive process similar to human System 1 thinking.

Several attempts have been made to enhance LMs’ reasoning abilities. CoT prompting has been a popular method, which helps models break down complex problems into smaller steps. However, this approach needs explicit prompting and can be resource-intensive. Other approaches have focused on fine-tuning models with additional training data or specialized datasets, but these methods do not completely overcome the challenge of developing intuitive reasoning capabilities. The goal remains to create models that can generate fast, accurate responses without relying on extensive prompting or additional training data.

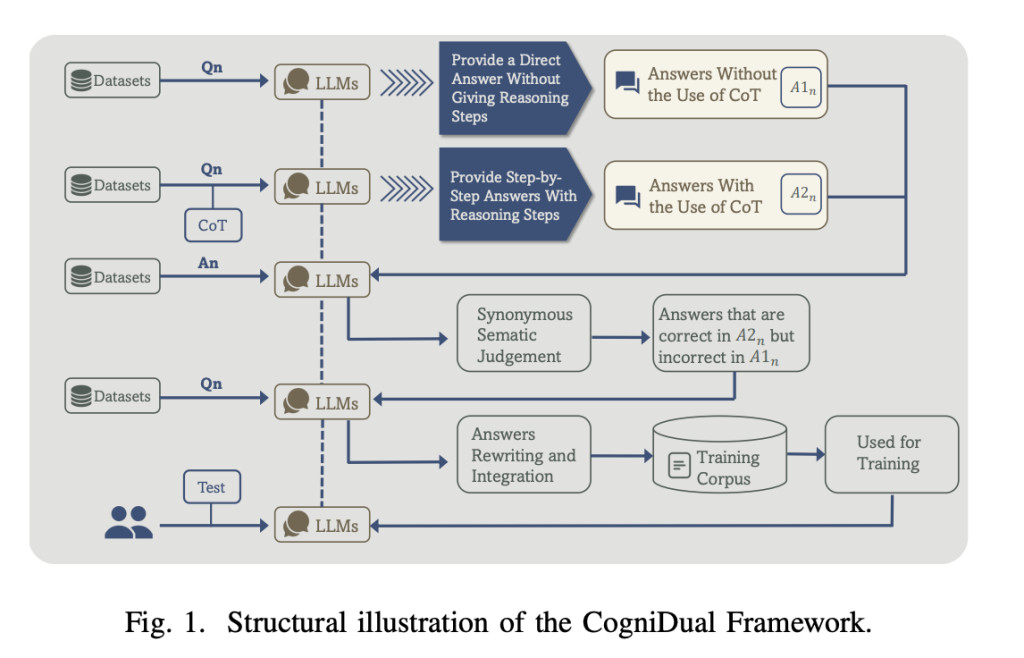

Researchers from Shanghai University of Engineering Science, INF Technology (Shanghai) Co., Ltd., Monash University, Melbourne, Australia, and Fudan University, Shanghai, have proposed the CogniDual Framework for LLMs (CFLLMs). This innovative approach investigates whether language models can evolve from deliberate reasoning to intuitive responses through self-training, mirroring human cognitive development. The CFLLMs highlight cognitive mechanisms behind LLMs’ response generation and provide practical benefits by reducing computational demands during inference. Moreover, researchers proved significant differences in response accuracy between CoT and non-CoT approaches.

The proposed method is designed to investigate five key questions about the cognitive and reasoning capabilities of language models like Llama2. The experiments are conducted to determine if these models exhibit characteristics similar to the human dual-system cognitive framework and whether self-practice without Chain of Thought (CoT) guidance can improve their reasoning abilities. Moreover, the experiment investigates if the improved reasoning abilities generalize across different reasoning tasks. This detailed approach provides an in-depth evaluation of how well LLMs can develop intuitive reasoning, similar to human cognition.

The CFLLMs demonstrated substantial performance improvements without Chain of Thought (CoT) prompting, especially on tasks that involve natural language inference. For example, on the LogiQA2.0 dataset, smaller models like Llama2-7B and Vicuna-7B demonstrated enhancements in accuracy without CoT after applying the framework. This indicates the potential for transforming System 2 capabilities into System 1-like intuitive responses through practice. However, the framework showed minimal improvement on the GSM8K dataset due to task contamination during training. In general, larger models needed fewer examples to reach their System 1 capacity, showing their greater ability to use limited data for improvement.

In conclusion. researchers introduced the CogniDual Framework for LLMs (CFLLMs), an innovative approach to finding whether language models can evolve from slower reasoning to intuitive responses. The experimental results demonstrate that LLMs can maintain enhanced problem-solving abilities after self-training without explicit CoT prompts. This supports the hypothesis that LLMs can transform System 2 reasoning into more intuitive System 1-like responses with the help of appropriate training. Future efforts should address current limitations and explore how CFLLMs affect the cognitive processing preferences of LLMs, aiming to develop more efficient and intuitive AI systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post CogniDual Framework for LLMs: Advancing Language Models from Deliberate Reasoning to Intuitive Responses Through Self-Training appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)