Search

News & Updates

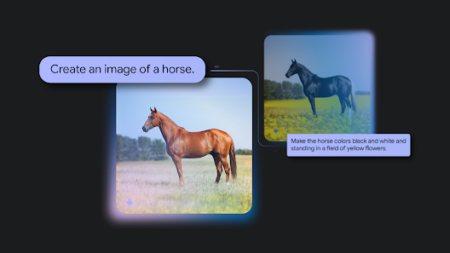

Native image output is available in Gemini 2.0 Flash for developers to experiment with in Google AI Studio and the Gemini API. Source: Read MoreÂ

The most capable model you can run on a single GPU or TPU. Source: Read MoreÂ

Introducing Gemini Robotics and Gemini Robotics-ER, AI models designed for robots to understand, act and react to the physical world. Source: Read MoreÂ

Training Diffusion Models with Reinforcement Learning We deployed 100 reinforcement learning (RL)-controlled cars into rush-hour highway traffic to smooth congestion and reduce fuel consumption for everyone. Our goal is to tackle “stop-and-go” waves, those frustrating slowdowns and speedups that usually have no clear cause but lead to congestion and significant…

Artificial Intelligence

PLAID is a multimodal generative model that simultaneously generates protein 1D sequence and 3D structure,…

Recent advances in Large Language Models (LLMs) enable exciting LLM-integrated applications. However, as LLMs have…

A new security robot at Northwood University seems more obsessed with tidiness than safety. When…

My life officially became a horror movie the day we moved to Maple Street. And…