Search

News & Updates

Artificial Intelligence

PLAID is a multimodal generative model that simultaneously generates protein 1D sequence and 3D structure,…

Training Diffusion Models with Reinforcement Learning We deployed 100 reinforcement learning (RL)-controlled cars into rush-hour…

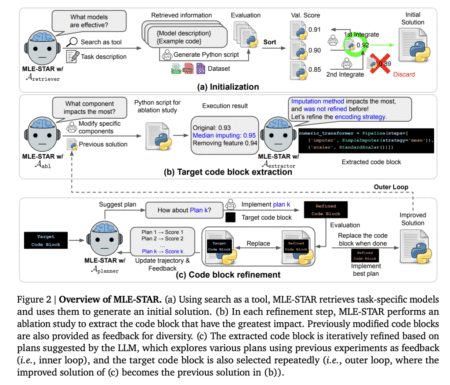

Recent advances in Large Language Models (LLMs) enable exciting LLM-integrated applications. However, as LLMs have…

In order to produce effective targeted therapies for cancer, scientists need to isolate the genetic…