Multiobjective optimization (MOO) is pivotal in machine learning, enabling researchers to balance multiple conflicting objectives in real-world applications. These applications include robotics, fair classification, and recommendation systems. In such fields, it is crucial to address the trade-offs between performance metrics, such as speed versus energy efficiency in robotics or fairness versus accuracy in classification models. These complex challenges require optimization techniques that simultaneously address various objectives, ensuring every single factor is noticed in the decision-making process.

A significant problem in multiobjective optimization is the need for scalable methods to handle large models with millions of parameters efficiently. While useful in certain scenarios, traditional approaches, particularly evolutionary algorithms, struggle when applied to large-scale machine-learning problems. These methods often fail to exploit gradient-based information, which is critical for optimizing complex models. Without gradient-based optimization, the computational burden increases, making it nearly impossible to address problems involving deep neural networks or other large models.

Currently, the most widely used methods in the field of MOO rely on evolutionary algorithms, such as those implemented in libraries like PlatEMO, Pymoo, and jMetal. These approaches are designed to explore diverse solutions but are limited by their zeroth-order nature. They work by generating and evaluating multiple candidate solutions but do not effectively incorporate gradient information. This inefficiency makes them less suitable for modern machine-learning tasks that require rapid and scalable optimization. The limitations of these methods highlight the need for a more advanced, gradient-based solution capable of handling the complexity of current machine learning models.

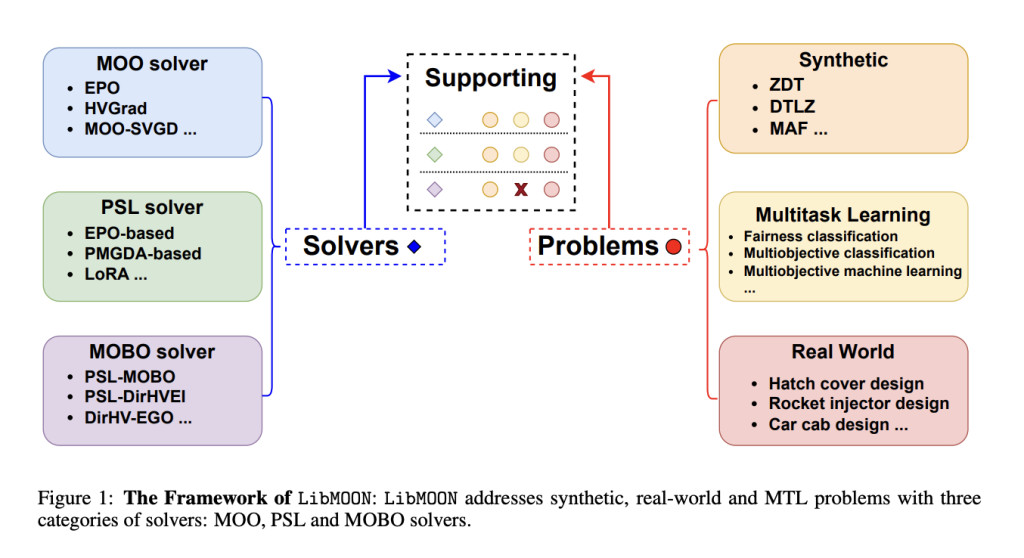

The research team from the City University of Hong Kong, SUSTech, HKBU and UIUC introduced LibMOON, a new library that fills this gap by providing a gradient-based multiobjective optimization framework. Implemented in PyTorch, LibMOON is designed to optimize large-scale machine-learning models more effectively than previous methods. The library supports over twenty cutting-edge optimization methods and offers GPU acceleration, making it highly efficient for large-scale tasks. The research team emphasizes that LibMOON not only supports synthetic and real-world multiobjective problems but also allows for extensive benchmarking, providing researchers with a reliable platform for comparison and development.

The core of LibMOON’s functionality lies in its three categories of solvers: Multiobjective optimization solvers (MOO), Pareto set learning solvers (PSL), and multiobjective Bayesian optimization solvers (MOBO). Each of these solver categories is modular and allows for easy integration of new methods, a feature that makes LibMOON highly adaptable. The MOO solvers focus on finding a finite set of Pareto optimal solutions. In contrast, PSL solvers aim to represent the entire Pareto set using a single neural model. The PSL method is particularly useful for optimizing models with millions of parameters, as it reduces the need to find multiple solutions and instead learns an entire set of Pareto optimal solutions at once. The MOBO solvers are designed to handle expensive optimization tasks where the evaluation of objective functions is costly. These solvers use advanced Bayesian optimization techniques to reduce the number of function evaluations, making them ideal for real-world applications where computational resources are limited.

LibMOON’s performance is remarkable when applied to various optimization problems. For example, when tested on synthetic problems like VLMOP2, the library’s gradient-based solvers achieved better hypervolume (HV) scores than traditional evolutionary approaches, indicating a superior ability to explore the solution space. Numerical results show that methods such as Agg-COSMOS and Agg-SmoothTche achieved pronounced hypervolume values, with HV scores of up to 0.752 for the former. Furthermore, LibMOON’s PSL methods demonstrated their strength in multi-task learning problems, efficiently learning the entire Pareto front. In one test, the PSL method with the Smooth Tchebycheff function found diverse Pareto solutions, even for problems with highly non-convex Pareto fronts. The study also showed that LibMOON’s MOO solvers reduced computational costs while maintaining high optimization quality, outperforming traditional MOEA libraries.

Furthermore, the library supports real-world applications like fairness classification and multiobjective machine learning tasks. In these tests, LibMOON’s MOO and PSL solvers outperformed existing methods, achieving higher hypervolume and diversity metrics and lower computational times. For instance, in a multi-task learning scenario involving fairness classification, LibMOON’s solvers could reduce cross-entropy loss while simultaneously balancing fairness metrics. The results in fairness classification, which often consist of balancing conflicting objectives like fairness and accuracy, further emphasize the effectiveness of LibMOON’s gradient-based methods. Moreover, LibMOON significantly reduced the time needed for optimization, with certain tasks completed nearly half the time compared to other libraries like Pymoo or jMetal.

In conclusion, LibMOON introduces a robust, gradient-based solution to multiobjective optimization, addressing the key limitations of existing methods. Its ability to efficiently scale to large machine learning models and provide accurate Pareto sets makes it an essential tool for researchers in machine learning. The library’s modular design, GPU acceleration, and extensive support for state-of-the-art methods ensure it will become a standard for multiobjective optimization. As the complexity of machine learning tasks continues to grow, tools like LibMOON will play a critical role in enabling more efficient, scalable, and precise optimization solutions.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post LibMOON: A Gradient-Based Multiobjective Optimization Library for Large-Scale Machine Learning appeared first on MarkTechPost.

Source: Read MoreÂ