Amazon DynamoDB is a serverless, NoSQL, fully managed database with single-digit millisecond performance at any scale. Customers often ask for guidance on how to obtain the count of items in a table or within specific partitions (item collections). In this post, we explore several methods to achieve this, each tailored to different use cases, with a focus on balancing accuracy, performance, and cost.

Use-case overview

Imagine you need to estimate how many users are currently using your application—tracking overall engagement is key to understanding your user base. Or, perhaps you need to enforce a quota, ensuring that users stay within defined limits when creating resources, loaning a book or purchasing concert tickets. These are common scenarios where obtaining item counts in DynamoDB can be useful.

Count of items in a table:

Using DescribeTable: This method provides a quick and cost-effective way to retrieve item counts, suitable for scenarios where immediate precision isn’t critical. It’s a simple solution, though the count is only updated approximately every six hours.

Using Scan: For those needing accurate counts, the Scan operation reads every item in the table. While this method offers better precision, it may be slower and consume more resources, especially for larger tables. To mitigate this, parallel scans can be used to improve speed.

Count of items related to a partition key:

Using Query: This approach is effective for counting items associated with a specific partition key, particularly in smaller collections. It offers a straightforward and efficient way to get counts without scanning the entire table.

Using DynamoDB streams and AWS Lambda for near real-time counts: For more dynamic needs, combining DynamoDB streams with AWS Lambda allows for maintaining up-to-date counts as items are added or removed. This method is ideal for applications that require near real-time accuracy with a scalable solution.

Using DynamoDB Transactions for idempotent updates:

DynamoDB Transactions: This option ensures that item counts remain consistent and accurate, particularly in applications that require atomic operations and strong consistency. While it provides robust data integrity, it’s important to be mindful that this method may involve higher resource usage due to the nature of transactions.

Each method offers a balance of benefits, and understanding these options will help you choose the most appropriate approach for your application’s specific needs, ensuring efficient and effective management of item counts in your DynamoDB tables.

Count of items in a table

In this section, we discuss two methods for obtaining the total count of items in a table. The choice of method depends on your specific use case. We provide guidance on which method is best suited for different scenarios.

Using DescribeTable

The DescribeTable API provides a quick way to get the count of items in a table. This method offers a low-latency response and is free of charge. However, the item count data is only updated approximately every 6 hours. This method is ideal for large tables where immediate accuracy is not a critical requirement.

The following code shows an example:

We get the following response:

Using Scan

You can use the Scan operation to count items in a table by reading every item. This can be done through the AWS Management Console, AWS SDK, or AWS Command Line Interface (AWS CLI). You can pass the Select=COUNT parameter so that DynamoDB only returns the count of items, not the actual items. This doesn’t reduce the capacity consumed from the Scan operation. Although this method provides accurate counts, it consumes read capacity units (RCUs) and can be slow and costly for larger tables as you must read (scan) the entire dataset.

The following is example code using the AWS CLI:

We get the following response:

When dealing with very large tables, the standard Scan operation might be slow, because it processes items sequentially. To speed up the Scan process, you can use a parallel scan. A parallel scan divides the table into multiple segments and scans each segment concurrently. This can significantly reduce the time it takes to count all items in a large table.

The parallel scan process includes the following components:

Segments – The table is divided into multiple segments. Each segment can be scanned independently and in parallel.

Workers – Each segment is processed by a separate worker (a separate thread, process, or instance).

Aggregation – The counts from each segment are aggregated to get the total item count.

Complete the following steps to perform a parallel scan:

Determine the number of segments (workers) you will use. A common choice is the number of CPU cores available.

Initiate multiple Scan operations concurrently, each scanning a different segment of the table.

Aggregate the counts from all segments to get the total count.

The following is an example of how you might set up a parallel scan with four segments using the AWS CLI. This method also requires you have jq installed:

When using the Scan operation to count items, the result may vary, as items can be added or removed during the Scan.

Count of items related to a partition key

In this section, we discuss three methods to obtain item counts related to a partition key (item collection).

Using Query

For counting items related to a specific partition key, the Query operation with the Select=COUNT parameter is a straightforward and efficient method. This is particularly useful for small item collections where the number of items is manageable within a single query response.

The following is example code using the AWS CLI:

We get the following response:

If your item collections contain many items, this method becomes less efficient. It requires paginating over multiple items to obtain the count, which can be both time-consuming and costly. For eventually consistent reads, you are charged 1 RCU per 8 KB of data read.

Using Amazon DynamoDB streams and AWS Lambda for near real-time counts

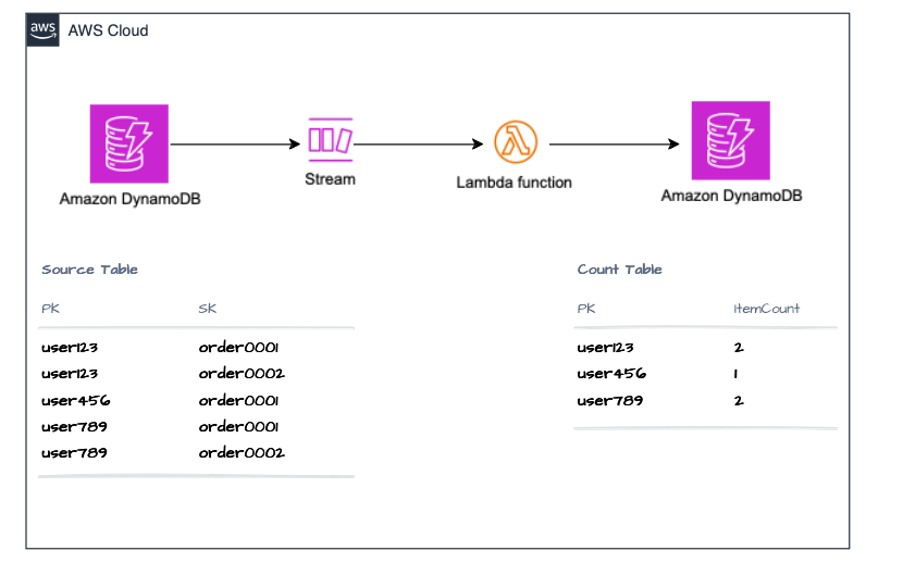

For more complex scenarios requiring near real-time item counts, using Amazon DynamoDB streams and AWS Lambda is an effective approach. By using a Lambda function invoked by DynamoDB streams, you can maintain an up-to-date count in a separate table. This method involves incrementing or decrementing the count based on item changes (additions, updates, deletions) in the source table.

Complete the following steps:

Enable DynamoDB streams on your table.

Create a Lambda function that processes stream records.

Update a separate count table within the Lambda function to reflect item additions and deletions.

The following diagram illustrates the solution architecture and workflow.

The following code is an example Lambda function (Python). The code assumes the table in use has a partition key named PK and it’s a String value.

The preceding code performs an UpdateItem operation for each individual event in the stream. This means that every insert or delete event immediately invokes a write operation to update the item count in the countTable. Consequently, each insert or delete consumes 1 write capacity unit (WCU) in the countTable. This approach could lead to higher costs and increased latency compared to the previously mentioned methods, especially when processing a large number of events with the same partition key, because it would result in multiple write operations for each event.

The following code snippet offers a more cost-effective and robust solution by accumulating changes locally before updating the countTable. Instead of performing an UpdateItem operation for every single event, it aggregates all changes related to each partition key and applies a single update per key. This significantly reduces the number of write operations, thereby lowering the consumption of WCUs and reducing costs. Additionally, this approach improves performance by minimizing the latency associated with multiple write operations. Furthermore, you can use EventSourceMapping batchWindow in Lambda to define the interval at which the Lambda function is invoked, allowing you to control how often the accumulated changes are processed, further optimizing performance and cost.

This method makes sure the item count is up to date with minimal latency. By maintaining an up-to-date count in a separate table, you can efficiently retrieve the item count using a simple GetItem operation. This approach significantly reduces overhead, because the GetItem operation is both fast and efficient, making it ideal for applications that require quick access to item counts without the need to scan or query the entire dataset. This method is especially beneficial for large tables or high-traffic applications, where real-time performance and minimal latency are essential.

Using DynamoDB transactions for idempotent updates

For applications that require accuracy for item counts, DynamoDB transactions provide a robust solution. By using transactions, you can make sure writing items to your main table (table A) and updating the item count in a separate count table (table B) occur atomically. This means that either both operations succeed, or neither succeed, maintaining data integrity and consistency. Additionally, using the ClientRequestToken parameter provides idempotency, which prevents accidental duplicate updates. However, it’s worth noting that this method may incur higher costs, as transactions consume twice the capacity of a standard UpdateItem request used in the previous method of using DynamoDB Streams and Lambda.

Using transactions offers the following benefits:

Strong consistency – You can make sure item counts are always accurate and consistent with the actual number of items in the table

Atomic operations – Both writing the item and updating the count are run as a single, atomic transaction

Error handling – If any part of the transaction fails, none of the operations are applied, preventing partial updates

Idempotency – Using ClientRequestToken makes sure repeated requests with the same token will not result in duplicate operations

Idempotency in distributed systems is necessary to prevent unintended side effects from repeated operations, such as duplicate updates. The ClientRequestToken parameter in DynamoDB transactions provides a unique identifier for the transaction request. If you send the same transaction request with the same ClientRequestToken multiple times, DynamoDB ensures that it processes only one instance of the transaction. This means your item counts remain accurate and consistent even if there are retries due to network issues or other transient errors.

In this example, we demonstrate how to use DynamoDB transactions to write an item to table A and increment the item count in table B using the AWS CLI. The steps are as follows:

Create a JSON file named transaction.json with the following content to define the transaction:

Use the following AWS CLI command to run the transaction:

Conclusion

You can obtain item counts from DynamoDB through various methods, each with its own advantages and best use cases. For large tables where immediate accuracy isn’t critical, the DescribeTable API provides a quick and free solution. For accurate counts at the cost of read capacity units, the Scan operation is available. When dealing with specific partition keys, the Query operation with Select=COUNT is efficient for smaller item collections. For near real-time counts, using DynamoDB Streams and Lambda offers a robust and scalable solution.

Choose the method that best fits your application’s requirements and DynamoDB usage patterns. By understanding the trade-offs and benefits of each approach, you can efficiently manage item counts in your DynamoDB tables.

If you’d like to understand how to monitor your tables item count over a period of time, check out “How to use Amazon CloudWatch to monitor Amazon DynamoDB table size and item count metricsâ€. If you have any questions or feedback, please feel free to leave a comment below, we’d love to hear from you.

About the Author

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Source: Read More