Large language models (LLMs) have gained significant attention in the field of artificial intelligence, particularly in the development of model-based agents. These agents, equipped with probabilistic world models, can anticipate future environmental states and plan accordingly. While world models have shown promise in reinforcement learning, researchers are now exploring their potential to enhance agent controllability. The current challenge lies in creating world models that humans can easily modulate, as traditional models rely solely on observational data, which is not an ideal medium for conveying human intentions. This limitation hinders efficient communication between humans and AI agents, potentially leading to misaligned goals and an increased risk of harmful actions during environmental exploration.

Researchers have tried to incorporate language into artificial agents and world models. Traditional world models have evolved from feed-forward neural networks to Transformers, achieving success in robotic tasks and video games. Some approaches have experimented with language-conditioned world models, focusing on emergent language or incorporating language features into model representations. Other research directions include instruction following, language-based learning, and using language descriptions to improve policy learning. Recent work has also explored agents that can read text manuals to play games. However, existing approaches have not fully utilized human language to directly enhance environment models.Â

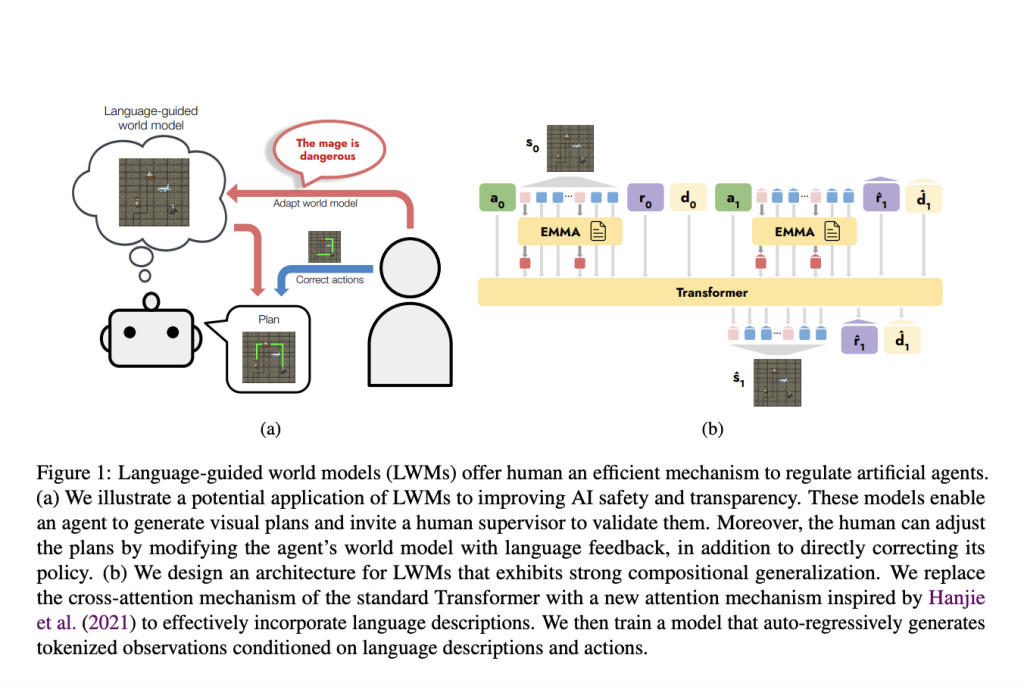

Researchers from Princeton University, the University of California, and Berkeley University of Southern California introduce Language-guided world Models (LWMs), which offer a unique approach to overcoming traditional world model limitations. These models can be steered through human verbal communication, incorporating language-based supervision while retaining model-based benefits. LWMs reduce human teaching efforts and mitigate risks of harmful agent actions during exploration. The proposed architecture exhibits strong compositional generalization, replacing standard Transformer cross-attention with a new mechanism to effectively incorporate language descriptions. Building LWMs presents the challenge of grounding language to environmental dynamics, addressed through a benchmark based on the MESSENGER game. In this benchmark, models must learn grounded meanings of entity attributes from videos and language descriptions, demonstrating compositional generalization by simulating environments with innovative attribute combinations at test time.

LWMs represent a unique class of world models designed to interpret language descriptions and simulate environment dynamics. These models address the limitations of observational world models by allowing humans to easily adapt their behavior through natural communication. LWMs can utilize pre-existing texts, reducing the need for extensive interactive experiences and human fine-tuning efforts.

The researchers formulate LWMs for entity-based environments, where each entity has a set of attributes described in a language manual. The goal is to learn a world model that approximates the true dynamics of the environment based on these descriptions. To test compositional generalization, they developed the MESSENGER-WM benchmark, which presents increasingly difficult evaluation settings.

The proposed modeling approach uses an encoder-decoder Transformer architecture with a specialized attention mechanism called EMMA (Entity Mapper with Multi-modal Attention). This mechanism identifies entity descriptions and extracts relevant attribute information. The model is trained as a sequence generator, processing both the manual and trajectory data to predict the next token in the sequence.

The evaluation of LWMs on the MESSENGER-WM benchmark yielded several key findings:

Cross-entropy losses: EMMA-LWM consistently outperformed all baselines in the more difficult NewAttr and NewAll splits, approaching the performance of the OracleParse model.

Compositional generalization: The EMMA-LWM model demonstrated a superior ability to interpret previously unseen manuals and accurately simulate dynamics, compared to the Observational model which was easily fooled by spurious correlations.

Baseline performance: The Standard model showed sensitivity to initialization, while the GPTHard model underperformed expectations, possibly due to imperfect identity extraction and the benefits of jointly learning identity and attribute extraction.

Imaginary trajectory generation: EMMA-LWM outperformed all baselines in metrics such as distance prediction (∆dist), non-zero reward precision, and termination precision across all difficulty levels (NewCombo, NewAttr, NewAll).

These results highlight EMMA-LWM’s effectiveness in compositional generalization and accurate simulation of environment dynamics based on language descriptions, surpassing other approaches in the challenging MESSENGER-WM benchmark.

LWMs have emerged as a significant advancement in artificial intelligence, offering a unique approach to adapting models through natural language instructions. These models present several advantages over traditional observational world models, potentially revolutionizing the way artificial agents interact with and understand their environments. LWMs show great promise in enhancing the controllability of artificial agents and addressing the challenge of compositional generalization. The introduction of language-guided adaptation in world models opens up new possibilities for more intuitive and flexible AI systems. This innovative approach bridges the gap between human communication and machine understanding, allowing for more natural and efficient interactions with artificial agents. As research in this field progresses, LWMs are poised to play a crucial role in the development of more sophisticated and adaptable AI systems across various domains.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Language-Guided World Models (LWMs): Enhancing Agent Controllability and Compositional Generalization through Natural Language appeared first on MarkTechPost.

Source: Read MoreÂ